Recurrent neural network

A recurrent neural network (RNN) is a class of neural network where connections between units form a directed cycle. This creates an internal state of the network which allows it to exhibit dynamic temporal behavior. Unlike feedforward neural networks, RNNs can use their internal memory to process arbitrary sequences of inputs. This makes them applicable to tasks such as unsegmented connected handwriting recognition, where they have achieved the best known results.[1]

Neural Network Package Torch7

This package provides an easy way to build and train simple or complex neural networks. Each module of a network is composed of Modules and there are several sub-classes of Module available: container classes like Sequential, Parallel and Concat , which can contain simple layers like Linear, Mean, Max and Reshape, as well as convolutional layers, and transfer functions like Tanh. Loss functions are implemented as sub-classes of Criterion.

Dimensionality reduction

In machine learning and statistics, dimensionality reduction or dimension reduction is the process of reducing the number of random variables under consideration,[1] and can be divided into feature selection and feature extraction.[2] Feature selection[edit] Feature extraction[edit] The main linear technique for dimensionality reduction, principal component analysis, performs a linear mapping of the data to a lower-dimensional space in such a way that the variance of the data in the low-dimensional representation is maximized. In practice, the correlation matrix of the data is constructed and the eigenvectors on this matrix are computed. The eigenvectors that correspond to the largest eigenvalues (the principal components) can now be used to reconstruct a large fraction of the variance of the original data.

Applications of adaptive systems - Piki

From Piki Asking what you can do with adaptive systems such as neural networks is a bit like asking what you can do with computer programming. The answer is the same: more or less anything that deals with information.

The B2B2C model

Jessica Tayenjam is a consultant at Rapid Innovation Group, where she helps high potential, technology-enabled companies mitigate market risk and create scalable growth. She is one of the speakers at our Startup 101 course on Saturday 9th March (tickets still available – download the free preview book here). Here, she shares her thoughts on the B2B2C model. Many definitions of B2B2C businesses focus on e-commerce relationships where the ‘C’ is the buyer. However, I think there is a more interesting segment of B2B2C: a business selling a product to another business which its customers will engage with.

Deep learning

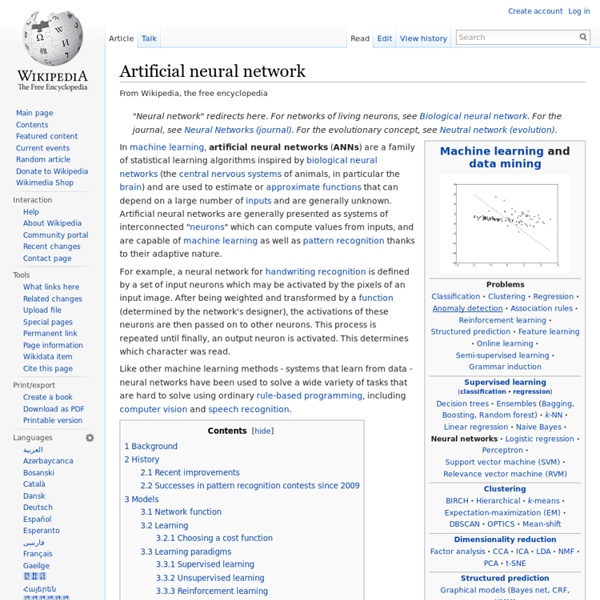

Branch of machine learning Deep learning (also known as deep structured learning or differential programming) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised.[1][2][3] Deep learning architectures such as deep neural networks, deep belief networks, recurrent neural networks and convolutional neural networks have been applied to fields including computer vision, speech recognition, natural language processing, audio recognition, social network filtering, machine translation, bioinformatics, drug design, medical image analysis, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance.[4][5][6] Artificial neural networks (ANNs) were inspired by information processing and distributed communication nodes in biological systems. Definition[edit]

Self-organizing map

A self-organizing map (SOM) or self-organizing feature map (SOFM) is a type of artificial neural network (ANN) that is trained using unsupervised learning to produce a low-dimensional (typically two-dimensional), discretized representation of the input space of the training samples, called a map. Self-organizing maps are different from other artificial neural networks in the sense that they use a neighborhood function to preserve the topological properties of the input space. This makes SOMs useful for visualizing low-dimensional views of high-dimensional data, akin to multidimensional scaling. The model was first described as an artificial neural network by the Finnish professor Teuvo Kohonen, and is sometimes called a Kohonen map or network.[1][2] Like most artificial neural networks, SOMs operate in two modes: training and mapping.

Social Curation Service Pearltrees Revamps Web and Mobile Apps

Pearltrees, the service that allows you to arrange Web content, photos and more (‘pearls’) into mindmap-style ‘trees’, has updated its Web and mobile apps today in order to bring a more seamless user experience and new features to the platform. The company said the Web platform has been fully redesigned and rebuilt in HTML5, making it more easily accessible on a range of different devices, as well as introducing new features also now found in its iOS and Android apps. It seems it’s becoming a bit of a habit for Pearltrees to significantly revamp its website at about this time each year, and this time around it’s gone all-out to make collections, and collecting, “simpler, more accessible and more shareable,” CEO and co-founder Patrice Lamothe said.

Neural Network Tutorial

Introduction I have been interested in artificial intelligence and artificial life for years and I read most of the popular books printed on the subject. I developed a grasp of most of the topics yet neural networks always seemed to elude me. Sure, I could explain their architecture but as to how they actually worked and how they were implemented… well that was a complete mystery to me, as much magic as science.