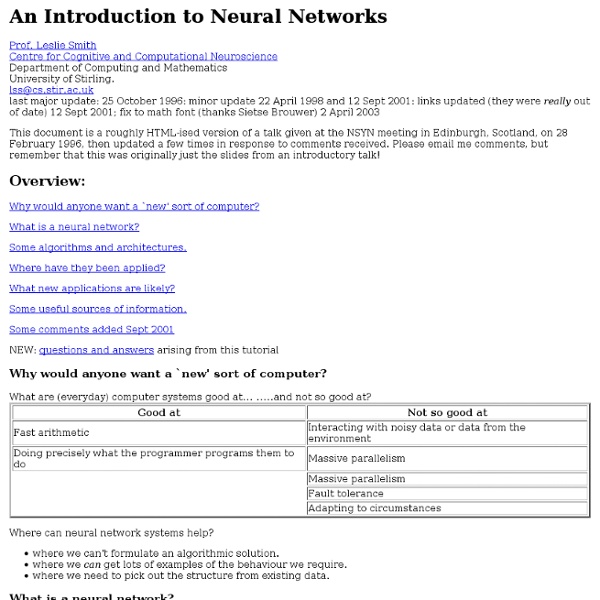

A Non-Mathematical Introduction to Using Neural Networks The goal of this article is to help you understand what a neural network is, and how it is used. Most people, even non-programmers, have heard of neural networks. There are many science fiction overtones associated with them. And like many things, sci-fi writers have created a vast, but somewhat inaccurate, public idea of what a neural network is. Most laypeople think of neural networks as a sort of artificial brain. Neural networks are one small part of AI. The human brain really should be called a biological neural network (BNN). There are some basic similarities between biological neural networks and artificial neural networks. Like I said, neural networks are designed to accomplish one small task. The task that neural networks accomplish very well is pattern recognition. Figure 1: A Typical Neural Network As you can see, the neural network above is accepting a pattern and returning a pattern. Neural Network Structure Neural networks are made of layers of similar neurons. Conclusion

New Pattern Found in Prime Numbers (PhysOrg.com) -- Prime numbers have intrigued curious thinkers for centuries. On one hand, prime numbers seem to be randomly distributed among the natural numbers with no other law than that of chance. But on the other hand, the global distribution of primes reveals a remarkably smooth regularity. This combination of randomness and regularity has motivated researchers to search for patterns in the distribution of primes that may eventually shed light on their ultimate nature. In a recent study, Bartolo Luque and Lucas Lacasa of the Universidad Politécnica de Madrid in Spain have discovered a new pattern in primes that has surprisingly gone unnoticed until now. They found that the distribution of the leading digit in the prime number sequence can be described by a generalization of Benford’s law. “Mathematicians have studied prime numbers for centuries,” Lacasa told PhysOrg.com. The set of all primes - like the set of all integers - is infinite.

Hammack Home This book is an introduction to the standard methods of proving mathematical theorems. It has been approved by the American Institute of Mathematics' Open Textbook Initiative. Also see the Mathematical Association of America Math DL review (of the 1st edition), and the Amazon reviews. The second edition is identical to the first edition, except some mistakes have been corrected, new exercises have been added, and Chapter 13 has been extended. (The Cantor-Bernstein-Schröeder theorem has been added.) Order a copy from Amazon or Barnes & Noble for $13.75 or download a pdf for free here. Part I: Fundamentals Part II: How to Prove Conditional Statements Part III: More on Proof Part IV: Relations, Functions and Cardinality Thanks to readers around the world who wrote to report mistakes and typos! Instructors: Click here for my page for VCU's MATH 300, a course based on this book.

Brain size and evolution - complexity, "behavioral complexity", and brain size From Serendip Organisms have indeed gotten more "complex" over evolutionary time, at least on a broad scale Organisms differ in "behavioral complexity" Organisms differ in brain sizeThere is some relation between "behavioral complexity" and brain size, but humans do not have the largest brains. There is a better relation between "behavioral complexity" and brain size in relation to body size. from Harry J. Jerison, Paleoneurology and the Evolution of Mind, Scientific American, January, 1976 Why should such a relation exist? Is the slope of the line 2/3 (surface to volume) or 3/4 (metabolic rate)? I'm still more interested in whether there is life on Mars?.

The human brain can create structures in up to 11 dimensions Neuroscientists have used a classic branch of maths in a totally new way to peer into the structure of our brains. What they've discovered is that the brain is full of multi-dimensional geometrical structures operating in as many as 11 dimensions. We're used to thinking of the world from a 3-D perspective, so this may sound a bit tricky, but the results of this new study could be the next major step in understanding the fabric of the human brain - the most complex structure we know of. This latest brain model was produced by a team of researchers from the Blue Brain Project, a Swiss research initiative devoted to building a supercomputer-powered reconstruction of the human brain. The team used algebraic topology, a branch of mathematics used to describe the properties of objects and spaces regardless of how they change shape. "We found a world that we had never imagined," says lead researcher, neuroscientist Henry Markram from the EPFL institute in Switzerland.

Max Planck Neuroscience on Nautilus: Surprising Network Activity in the Immature Brain One of the outstanding mysteries of the cerebral cortex is how individual neurons develop the proper synaptic connections to form large-scale, distributed networks. Now, an international team of scientists have gained novel insights from studying spontaneously generated patterns of activity arising from local connections in the early developing visual cortex. These early activity patterns serve as a template for the subsequent development of the long-range neural connections that are a defining feature of mature distributed networks. In a recently published Nature Neuroscience article, scientists at the Max Planck Florida Institute for Neuroscience, Frankfurt Institute for Advanced Studies, Goethe University of Frankfurt, and the University of Minnesota detail how they investigated the visual cortex of the ferret, an ideal model system to explore the early development of networks in the cortex. Lead image credit: sdecoret / Shutterstock

emotional intelligence | NeuroBollocks Prism Brain Mapping is an online assessment package that promises… Well… it promises all kind of things, from “Enhanced selling skills” to “Developing female leaders” to 360 degree assessments”, whatever the hell that’s supposed to mean. It appears to be a pretty big deal, with practitioners all around the world and a certification program for new ‘practitioners’. So, what is it? It’s basically a re-packaging of some old psychometric tests with a neuroscience-y sounding spin. Or in their words: “It represents a simple, yet comprehensive, synthesis of research by some of the world’s leading neuroscientists into how the human brain works, and why people, who have similar backgrounds, intelligence, experience, skills, and knowledge, behave in very different ways. The central idea seems to be to divide the brain up into four colour-coded segments, like so: …and then produce a matching colour-coded report that divides the responses up into several behavioural domains:

Time on the Brain: How You Are Always Living In the Past, and Other Quirks of Perception I always knew we humans have a rather tenuous grip on the concept of time, but I never realized quite how tenuous it was until a couple of weeks ago, when I attended a conference on the nature of time organized by the Foundational Questions Institute. This meeting, even more than FQXi’s previous efforts, was a mashup of different disciplines: fundamental physics, philosophy, neuroscience, complexity theory. Crossing academic disciplines may be overrated, as physicist-blogger Sabine Hossenfelder has pointed out, but it sure is fun. Like Sabine, I spend my days thinking about planets, dark matter, black holes—they have become mundane to me. Neuroscientist Kathleen McDermott of Washington University began by quoting famous memory researcher Endel Tulving, who called our ability to remember the past and to anticipate the future “mental time travel.” McDermott outlined the case of Patient K.C., who has even worse amnesia than the better-known H.M. on whom the film Memento was based.

How To Stop Checking Your Phone: 4 Secrets From Research Before we commence with the festivities, I wanted to thank everyone for helping my first book become a Wall Street Journal bestseller. To check it out, click here. If I told you we check our phones 5 billion times a minute you’d probably just shrug and agree. We’ve seen the shocking stats over and over and at this point no number would surprise us. But there’s one study that haunts me… Here’s what NYU professor Adam Alter told me: There’s a study that was done asking people, mainly young adults, to make a decision: if you had to break a bone or break your phone what would you prefer? May I suggest that this has finally gotten out of hand? We’re not looking at the problem correctly. You do not have a short attention span. Have you had multiple car crashes this week because you can’t pay attention to the road? See? That’s not a short attention span. This thing you call “your life” is made of memories. We have a “mind control” problem. So what do we do? Nah. Good. Well, I lied. 3) Mindfulness