Category:Machine learning. Machine Learning authors/titles recent submissions. Artificial Intelligence and Machine Learning. A Gaussian Mixture Model Layer Jointly Optimized with Discriminative Features within A Deep Neural Network Architecture Ehsan Variani, Erik McDermott, Georg Heigold ICASSP, IEEE (2015) Adaptation algorithm and theory based on generalized discrepancy Corinna Cortes, Mehryar Mohri, Andrés Muñoz Medina Proceedings of the 21st ACM Conference on Knowledge Discovery and Data Mining (KDD 2015) Adding Third-Party Authentication to Open edX: A Case Study John Cox, Pavel Simakov Proceedings of the Second (2015) ACM Conference on Learning @ Scale, ACM, New York, NY, USA, pp. 277-280 An Exploration of Parameter Redundancy in Deep Networks with Circulant Projections Yu Cheng, Felix X. Machine Learning (Theory) Metacademy. Machine Learning.

A new digital ecology is evolving, and humans are being left behind. This is an excellent point.

You mean something similar to Valve's fee on steam's marketplace? They take 10% cut out of every transaction, no matter how big or small. Unfortunately, it (the Tobin tax mentioned in the article ) is being resisted by some very powerful people. Not really, that's more akin to the capital gains tax already in place. Short term sells (less than a year between purchase and selling) are taxed as regular income, while long term sells (more than a year between purchase and selling) are taxed at a significantly lower rate. The problem is that because these taxes are based on percentage, its fairly easy for the algorithms to overcome the higher tax rate with small margins and massive trade volume.

For the algorithms, however, this is a major roadblock. Machine learning textbook. Autopoietic Computing. Proposed by: darklight@darkai.org on 12/30/2013 Reality augmented autopoietic social structures A self replicating machine is a machine which can make a copy of itself.

Just as biological entities have DNA which operates on the same principle of self replication, the same fundamental process which takes place in biological organisms can take place in artificial lifeforms. This fundamental phenomena allows robots to essentially create clones of themselves. Computing software protocols like Bitcoin rely on decentralized self replicating nodes to create a unified shared blockchain which acts as the public ledger for the network. Quantum Machine Learning Singularity from Google, Kurzweil and Dwave ? Dwave's 512 qubit system can speedup the solution of Google's machine learning algorithms by 50,000 times in 25% of the problem cases.

This could make it the fastest system for solving Google's problems. Meet the algorithm that can learn “everything about anything” The most recent advances in artificial intelligence research are pretty staggering, thanks in part to the abundance of data available on the web.

The Future of Machine Intelligence. Ben Goertzel March 20, 2009 In early March 2009, 100 intellectual adventurers journeyed from various corners of Europe, Asia, America and Australasia to the Crowne Plaza Hotel in Arlington Virginia, to take part in the Second Conference on Artificial General Intelligence, AGI-09: a conference aimed explicitly at the grand goal of the AI field, the creation of thinking machines with general intelligence at the human level and ultimately beyond.

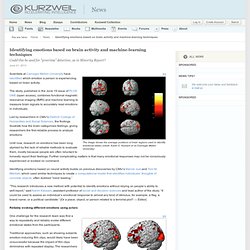

While the majority of the crowd hailed from academic institutions, major firms like Google, GE, AT&T and Autodesk were also represented, along with a substantial contingent of entrepreneurs involved with AI startups, and independent researchers. Since I was the chair of the conference and played a large role in its organization – along with a number of extremely competent and passionate colleagues – my opinion must be considered rather subjective ... but, be that as it may, my strong feeling is that the conference was an unqualified success! Identifying emotions based on brain activity and machine-learning techniques. The image shows the average positions of brain regions used to identify emotional states (credit: Karim S.

Kassam et al. /Carnegie Mellon University) Scientists at Carnegie Mellon University have identified which emotion a person is experiencing based on brain activity. The study, published in the June 19 issue of PLOS ONE (open access), combines functional magnetic resonance imaging (fMRI) and machine learning to measure brain signals to accurately read emotions in individuals. Led by researchers in CMU’s Dietrich College of Humanities and Social Sciences, the findings illustrate how the brain categorizes feelings, giving researchers the first reliable process to analyze emotions. Until now, research on emotions has been long stymied by the lack of reliable methods to evaluate them, mostly because people are often reluctant to honestly report their feelings. As Machines Get Smarter, Evidence They Learn Like Us. The brain performs its canonical task — learning — by tweaking its myriad connections according to a secret set of rules.

To unlock these secrets, scientists 30 years ago began developing computer models that try to replicate the learning process. Now, a growing number of experiments are revealing that these models behave strikingly similar to actual brains when performing certain tasks. Researchers say the similarities suggest a basic correspondence between the brains’ and computers’ underlying learning algorithms. Newest 'machine-learning' Questions. An Introduction to Deep Learning (in Java): From Perceptrons to Deep Networks. In recent years, there’s been a resurgence in the field of Artificial Intelligence.

It’s spread beyond the academic world with major players like Google, Microsoft, and Facebook creating their own research teams and making some impressive acquisitions. Some this can be attributed to the abundance of raw data generated by social network users, much of which needs to be analyzed, the rise of advanced data science solutions, as well as to the cheap computational power available via GPGPUs. Baidu says its massive deep-learning system is nearly complete. Chinese search engine company Baidu is working on a massive computing cluster for deep learning that will be 100 times larger than the cat-recognizing system Google famously built in 2012 and that should be complete in six months, Baidu Chief Scientist and machine leaning expert Andrew Ng told Bloomberg News in an article published on Wednesday.

The size Ng is referring to is in terms of neural connections, not sheer server or node count, and will be accomplished via heavy use of graphics processing units, or GPUs. That Baidu is at work on such a system is hardly surprising: Ng actually helped build that system at Google (as part of a project dubbed Google Brain) and has been one of the leading voices in the deep learning community for years. Google preps wave of machine learning apps. High performance access to file storage Google is preparing to unleash a wave of apps that get intelligence from its mammoth machine learning models.

The apps will all rely on the neural networks Google has been developing internally to allow its systems to automatically classify information that has traditionally been tough for computers to parse. This includes human speech or unlabeled images, said Jeffrey Dean a fellow in Google's Systems Infrastructure Group who helped create MapReduce and GFS, to the GigaOm Structure in San Francisco on Wednesday. Recommender Systems - past, present, and future. PyBrain. SDKs for building machine learning applications. Machine Learning cheat sheet Machine learning in Python. School of Engineering - Stanford Engineering Everywhere. Remembering objects lets computers learn like a child - tech - 05 June 2013.

Video: Watch a computer recognise familiar objects ALWAYS seeing the world with fresh eyes can make it hard to find your way around. Giving computers the ability to recognise objects as they scan a new environment will let them navigate much more quickly and understand what they are seeing. Renato Salas-Moreno at Imperial College London and colleagues have added object recognition to a computer vision technique called simultaneous location and mapping (SLAM). Twitter Data Analytics. Published by Springer Shamanth Kumar, Fred Morstatter, and Huan Liu Data Mining and Machine Learning Lab School of Computing, Informatics, and Decision Systems Engineering Arizona State University Social media has become a major platform for information sharing. Neural Network. Neural networks and deep learning.

AIspace. These tools are for learning and exploring concepts in artificial intelligence. They were developed at the Laboratory for Computational Intelligence at the University of British Columbia under direction of Alan Mackworth and David Poole. They are part of the online resources for Artificial Intelligence: Foundations of Computational Agents. If you are teaching or learning about AI, you may use these tools under the terms of use. Feedback is welcome. Gaussian Processes for Machine Learning: Contents. Carl Edward Rasmussen and Christopher K. I. Williams MIT Press, 2006. ISBN-10 0-262-18253-X, ISBN-13 978-0-262-18253-9.

This book is © Copyright 2006 by Massachusetts Institute of Technology. The MIT Press have kindly agreed to allow us to make the book available on the web. The whole book as a single pdf file. To create a super-intelligent machine, start with an equation. Intelligence is a very difficult concept and, until recently, no one has succeeded in giving it a satisfactory formal definition. Most researchers have given up grappling with the notion of intelligence in full generality, and instead focus on related but more limited concepts – but I argue that mathematically defining intelligence is not only possible, but crucial to understanding and developing super-intelligent machines. Apache Mahout: Scalable machine learning and data mining. Machine learning. Machine learning. The International Machine Learning Society - About. Beijing.