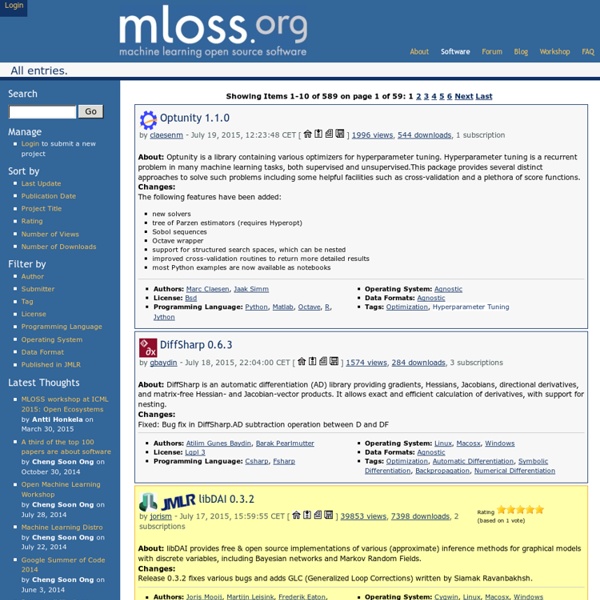

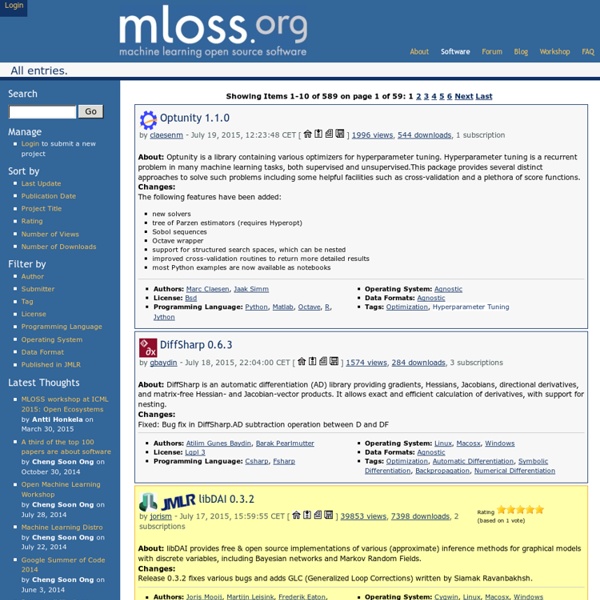

All entries

Dirichlet Process, Infinite Mixture Models, and Clustering

# Generate some fake data with some uniform random means generateFakeData < - function( num.vars=3, n=100, num.clusters=5, seed=NULL ) { if(is.null(seed)){ set.seed(runif(1,0,100)) } else { set.seed(seed) data <- data.frame(matrix(NA, nrow=n, ncol=num.vars+1)) mu <- NULL for(m in 1:num.vars){ mu <- cbind(mu,rnorm(num.clusters, runif(1,-10,15), 5)) for (i in 1:n) { cluster <- sample(1:num.clusters, 1) data[i, 1] <- cluster for(j in 1:num.vars){ data[i, j+1] <- rnorm(1, mu[cluster,j], 1) data$X1 <- factor(data$X1) var.names <- paste("VAR",seq(1,ncol(data)-1), sep="") names(data) <- c("cluster",var.names) return(data) # Set up a procedure to calculate the cluster means using squared distance dirichletClusters <- function(orig.data, disp.param = NULL, max.iter = 100, tolerance = .001) n <- nrow( orig.data ) data <- as.matrix( orig.data ) pick.clusters <- rep(1, n) k <- 1 mu <- matrix( apply(data,2,mean), nrow=1, ncol=ncol(data) ) is.converged <- FALSE iteration <- 0 ss.old <- Inf ss.curr <- Inf while ( ! k < - k + 1

Related:

Related: