Mechanize. Stateful programmatic web browsing in Python, after Andy Lester’s Perl module WWW::Mechanize.

The examples below are written for a website that does not exist (example.com), so cannot be run. There are also some working examples that you can run. import reimport mechanize br = mechanize.Browser()br.open(" follow second link with element text matching regular expressionresponse1 = br.follow_link(text_regex=r"cheese\s*shop", nr=1)assert br.viewing_html()print br.title()print response1.geturl()print response1.info() # headersprint response1.read() # body br.select_form(name="order")# Browser passes through unknown attributes (including methods)# to the selected HTMLForm.br["cheeses"] = ["mozzarella", "caerphilly"] # (the method here is __setitem__)# Submit current form.

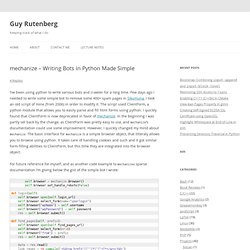

Mechanize – Writing Bots in Python Made Simple by Guy Rutenberg. I’ve been using python to write various bots and crawler for a long time.

Few days ago I needed to write some simple bot to remove some 400+ spam pages in Sikumuna, I took an old script of mine (from 2006) in order to modify it. The script used ClientForm, a python module that allows you to easily parse and fill html forms using python. I quickly found that ClientForm is now deprecated in favor of mechanize. In the beginning I was partly set back by the change, as ClientForm was pretty easy to use, and mechanize‘s documentation could use some improvement.

However, I quickly changed my mind about mechanize. For future reference for myself, and as another code example to mechanizes sparse documentation I’m giving below the gist of the simple bot I wrote: This isn’t a complete code example, as the rest of the code is just mundane, but you can clearly see how simple it is to use mechanize. The interesting parts are: Related.

Mechanize — Documentation. Full API documentation is in the docstrings and the documentation of urllib2.

The documentation in these web pages is in need of reorganisation at the moment, after the merge of ClientCookie and ClientForm into mechanize. Tests and examples Examples The front page has some introductory examples. The examples directory in the source packages contains a couple of silly, but working, scripts to demonstrate basic use of the module. See also the forms examples (these examples use the forms API independently of mechanize.Browser).

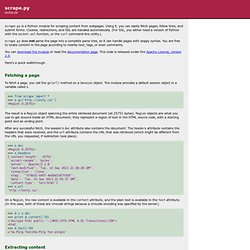

Tests To run the tests: python test.py There are some tests that try to fetch URLs from the internet. Python test.py discover --tag internet The urllib2 interface mechanize exports the complete interface of urllib2. Import mechanizeresponse = mechanize.urlopen(" response.read() Compatibility These notes explain the relationship between mechanize, ClientCookie, ClientForm, cookielib and urllib2, and which to use when. API differences between mechanize and urllib2: Julian_Todd / Python mechanize cheat sheet. Scrape.py. Scrape.py is a Python module for scraping content from webpages.

Using it, you can easily fetch pages, follow links, and submit forms. Cookies, redirections, and SSL are handled automatically. (For SSL, you either need a version of Python with the socket.ssl function, or the curl command-line utility.) Webscraping with Python. WebScraping. Documentation. Documentation / 3rd party libraries. Beautiful Soup: We called him Tortoise because he taught us. [ Download | Documentation | Hall of Fame | For enterprise | Source | Changelog | Discussion group | Zine ] You didn't write that awful page. You're just trying to get some data out of it. Beautiful Soup is here to help. Since 2004, it's been saving programmers hours or days of work on quick-turnaround screen scraping projects.

Beautiful Soup is a Python library designed for quick turnaround projects like screen-scraping. Beautiful Soup provides a few simple methods and Pythonic idioms for navigating, searching, and modifying a parse tree: a toolkit for dissecting a document and extracting what you need. Beautiful Soup parses anything you give it, and does the tree traversal stuff for you. Valuable data that was once locked up in poorly-designed websites is now within your reach. Interested? Getting and giving support If you have questions, send them to the discussion group. If you use Beautiful Soup as part of your work, please consider a Tidelift subscription.