Synaptic Web. Intro to scikit-learn (II), SciPy2013 Tutorial, Part 1 of 2. Swarm intelligence. Swarm intelligence (SI) is the collective behavior of decentralized, self-organized systems, natural or artificial.

The concept is employed in work on artificial intelligence. The expression was introduced by Gerardo Beni and Jing Wang in 1989, in the context of cellular robotic systems.[1] The application of swarm principles to robots is called swarm robotics, while 'swarm intelligence' refers to the more general set of algorithms. 'Swarm prediction' has been used in the context of forecasting problems.

Example algorithms[edit] Particle swarm optimization[edit] Ant colony optimization[edit] Artificial bee colony algorithm[edit] Artificial bee colony algorithm (ABC) is a meta-heuristic algorithm introduced by Karaboga in 2005,[5] and simulates the foraging behaviour of honey bees. Bacterial colony optimization[edit] Differential evolution[edit] Differential evolution is similar to genetic algorithm and pattern search. Machine Learning Cheat Sheet (for scikit-learn) Neural Networks for Machine Learning {c}

Easy, High-Level Introduction. By Pete McCollum Saipan59@juno.com Introduction This article focuses on a particular type of neural network model, known as a "feed-forward back-propagation network".

This model is easy to understand, and can be easily implemented as a software simulation. First we will discuss the basic concepts behind this type of NN, then we'll get into some of the more practical application ideas. Complex Problems The field of neural networks can be thought of as being related to artificial intelligence, machine learning, parallel processing, statistics, and other fields. Consider an image processing task such as recognizing an everyday object projected against a background of other objects. A fundamental difference between the image recognition problem and the addition problem is that the former is best solved in a parallel fashion, while simple mathematics is best done serially. The Feed-Forward Neural Network Model Figure 1. Figure 2. When to use (or not!) Robotics Applications ? Low-level w/ full algorthms. A single-layer network has severe restrictions: the class of tasks that can be accomplished is very limited.

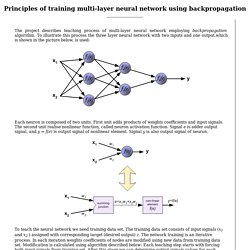

In this chapter we will focus on feed-forward networks with layers of processing units. Minsky and Papert (Minsky & Papert, 1969) showed in 1969 that a two layer feed-forward network can overcome many restrictions, but did not present a solution to the problem of how to adjust the weights from input to hidden units. An answer to this question was presented by Rumelhart, Hinton and Williams in 1986 (Rumelhart, Hinton, & Williams, 1986), and similar solutions appeared to have been published earlier (Werbos, 1974; Parker, 1985; Cun, 1985). More low-level w/ great visuals. The project describes teaching process of multi-layer neural network employing backpropagation algorithm.

To illustrate this process the three layer neural network with two inputs and one output,which is shown in the picture below, is used: Each neuron is composed of two units. First unit adds products of weights coefficients and input signals. The second unit realise nonlinear function, called neuron activation function. Signal e is adder output signal, and y = f(e) is output signal of nonlinear element. To teach the neural network we need training data set. Propagation of signals through the hidden layer. OCR w/ Ruby. Introduction to Neural Networks The utility of artificial neural network models lies in the fact that they can be used to infer a function from observations.

This is particularly useful in applications where the complexity of the data or task makes the design of such a function by hand impractical. Neural Networks are being used in many businesses and applications. Their ability to learn by example makes them attractive in environments where the business rules are either not well defined or are hard to enumerate and define. Many people believe that Neural Networks can only solve toy problems. In this module you will find an implementation of neural networks using the Backpropagation is a supervised learning technique (described by Paul Werbos in 1974, and further developed by David E. Modeling the OCR problem using Neural Networks networks Let's imagine that we have to implement a program to identify simple patterns (triangles, squares, crosses, etc).

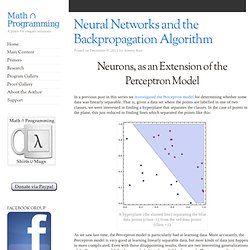

Neurons w/ Python. Neurons, as an Extension of the Perceptron Model In a previous post in this series we investigated the Perceptron model for determining whether some data was linearly separable.

That is, given a data set where the points are labelled in one of two classes, we were interested in finding a hyperplane that separates the classes. In the case of points in the plane, this just reduced to finding lines which separated the points like this: A hyperplane (the slanted line) separating the blue data points (class -1) from the red data points (class +1) As we saw last time, the Perceptron model is particularly bad at learning data.

PyBrain. Competitions. Scikit-learn.