Machine Learning Video Library - Learning From Data (Abu-Mostafa) Machine Learning Cheat Sheet (for scikit-learn) The sport of data science. Machine Learning Repository: Data Sets. Neural Networks for Machine Learning - View Thread. Learning From Data - Fall Session. More resources for deep learning. Gnumpy. (Russian / Romanian / Belarussian translations by various people) Gnumpy is free software, but if you use it in scientific work that gets published, you should cite this tech report in your publication.

Download: gnumpy.py (also be sure to have the most recent version of Cudamat) Documentation: here. Do you want to have both the compute power of GPU's and the programming convenience of Python numpy? Gnumpy + Cudamat will bring you that. Gnumpy is a simple Python module that interfaces in a way almost identical to numpy, but does its computations on your computer's GPU.

Gnumpy runs on top of, and therefore requires, the excellent cudamat library, written by Vlad Mnih. Gnumpy can run in simulation mode: everything happens on the CPU, but the interface is the same. See also this presentation by Xavier Arrufat, introducing numpy at the Python for Data Analysis meetup in Barcelona, 2013. Recent changes: Home page. The Next Generation of Neural Networks. U.S. Census Return Rate Challenge (Visualization Competition) Note: The prediction phase of this competition has ended.

Please join the visualization competition which ends on Nov. 11, 2012. This challenge is to develop a statistical model to predict census mail return rates at the Census block group level of geography. The Census Bureau will use this model for planning purposes for the decennial census and for demographic sample surveys. The model-based estimates of predicted mail return will be publicly released in a later version of the Census "planning database" containing updated demographic data. Participants are encouraged to develop and evaluate different statistical approaches to proposing the best predictive model for geographic units. Please note also that as described in the rules, only US citizens and residents are eligible for prizes.

GE Quest - Let the Quests Begin! Neural Networks for Machine Learning - View Thread. Neural Networks for Machine Learning - View Thread. Neural Networks for Machine Learning - View Thread. Introduction to Information Retrieval. Neural Networks for Machine Learning - View Thread. Resources for nural networks. CosmoLearning Computer Science. Machine Learning Repository. Www.ipam.ucla.edu/publications/gss2012/gss2012_10595.pdf.

Kaggle: making data science a sport. PyBrain. Deep Belief Networks. A tutorial on Deep Learning. Complex probabilistic models of unlabeled data can be created by combining simpler models.

Mixture models are obtained by averaging the densities of simpler models and "products of experts" are obtained by multiplying the densities together and renormalizing. A far more powerful type of combination is to form a "composition of experts" by treating the values of the latent variables of one model as the data for learning the next model.

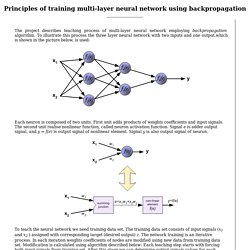

The first half of the tutorial will show how deep belief nets -- directed generative models with many layers of hidden variables -- can be learned one layer at a time by composing simple, undirected, product of expert models that only have one hidden layer. It will also explain why composing directed models does not work. Would you like to put a link to this lecture on your homepage? Deep Learning. Backpropagation. The project describes teaching process of multi-layer neural network employing backpropagation algorithm.

To illustrate this process the three layer neural network with two inputs and one output,which is shown in the picture below, is used: Each neuron is composed of two units. First unit adds products of weights coefficients and input signals. The second unit realise nonlinear function, called neuron activation function. PyBrain. Introduction to Neural Networks. CS-449: Neural Networks Fall 99 Instructor: Genevieve Orr Willamette University Lecture Notes prepared by Genevieve Orr, Nici Schraudolph, and Fred Cummins [Content][Links]

An introduction to neural networks. Andrew Blais, Ph.D.

(onlymice@gnosis.cx)David Mertz, Ph.D. (mertz@gnosis.cx) Gnosis Software, Inc. June 2001 A convenient way to introduce neural nets is with a puzzle that they can be used to solve. Suppose that you are given, for example, 500 characters of code that you know to be either C, C++, Java or Python. According to a simplified account, the human brain consists of about ten billion neurons, and a neuron is, on average, connected to several thousand other neurons. Threshold logic units The first step toward neural nets is to abstract from the biological neuron, and to focus on its character as a threshold logic unit (TLU). Neural Network Basics. David Leverington Associate Professor of Geosciences The Feedforward Backpropagation Neural Network Algorithm Although the long-term goal of the neural-network community remains the design of autonomous machine intelligence, the main modern application of artificial neural networks is in the field of pattern recognition (e.g., Joshi et al., 1997).

In the sub-field of data classification, neural-network methods have been found to be useful alternatives to statistical techniques such as those which involve regression analysis or probability density estimation (e.g., Holmström et al., 1997). UFLDL Tutorial - Ufldl. Comp.ai.neural-nets FAQ, Part 1 of 7: Introduction. Learning From Data - Online Course. A real Caltech course, not a watered-down version on YouTube & iTunes Free, introductory Machine Learning online course (MOOC) Taught by Caltech Professor Yaser Abu-Mostafa [article]Lectures recorded from a live broadcast, including Q&APrerequisites: Basic probability, matrices, and calculus8 homework sets and a final examDiscussion forum for participantsTopic-by-topic video library for easy review Outline This is an introductory course in machine learning (ML) that covers the basic theory, algorithms, and applications.

ML is a key technology in Big Data, and in many financial, medical, commercial, and scientific applications. Deep Learning Tutorial - www.socher.org. Slides Updated Version of Tutorial at NAACL 2013 See Videos.

Thoughts on Machine Learning – the statistical software R. This is going to be an ongoing article series about various aspects of Machine Learning. In the first post of the series I’m going to explain why I decided to learn and use R , and why it is probably the best statistical software for Machine Learning at this time. R vs. popular programming languages like Java Implementing Machine Learning algorithms is not an easy task because it requires a deep understanding of the inner workings of the algorithm. Furthermore, it can make a big difference how the function of a Machine Learning algorithm is implemented in detail because the application of advanced mathematical tricks can enhance the performance of the algorithm substantially. Machine learning in Python — scikit-learn 0.12.1 documentation. A Brief Introduction to Neural Networks · D. Kriesel. Manuscript Download - Zeta2 Version Filenames are subject to change.

Thus, if you place links, please do so with this subpage as target. Original Version? EBookReader Version? The original version is the two-column layouted one you've been used to. For print, the eBookReader version obviously is less attractive. During every release process from now on, the eBookReader version going to be automatically generated from the original content. Linear Classification - The Perceptron (Abu-Mostafa) Neural Networks. Artificial Neural Networks: Architectures and Applications by Kenji Suzuki (ed.) - InTech , 2013Artificial neural networks may be the single most successful technology in the last two decades.

The purpose of this book is to provide recent advances in architectures, methodologies, and applications of artificial neural networks.(3885 views) CSC321 Winter 2012: lectures. Tuesday January 8 First class meeting. Explaining how the course will be taught. For the rest of this schedule, students are to study the listed material before the class meeting. Python Vs. Octave. [Python] Neuron Module. Basic Perceptron Learning for AND Gate. Mathesaurus. Deep Learning Tutorials — DeepLearning v0.1 documentation.