President Biden Is Man, Woman And 40 Years Old - Why We Need Algorithmic Transparency. Did you know that the German Chancellor Angela Merkel is a boy and that Joe Biden is both a "man" and a "woman"?

Those are the insights you will get if you feed Angela and Joe's images into Microsoft's MS Azure's Vision API. Following some online mockery about Angela Merkel's misjudged gender, Microsoft changed their algorithm, and Angela was a female again. All good? Far from it! Mathematicians Boycott Police Work. Mathematicians at universities across the country are halting collaborations with police departments across the U.S.

A June 15 letter was sent to the trade journal Notices of the American Mathematical Society, announcing the boycott.Typically, mathematicians work with police departments to build algorithms, conduct modeling work, and analyze data. Several prominent academic mathematicians want to sever ties with police departments across the U.S., according to a letter submitted to Notices of the American Mathematical Society on June 15. The letter arrived weeks after widespread protests against police brutality, and has inspired over 1,500 other researchers to join the boycott. These mathematicians are urging fellow researchers to stop all work related to predictive policing software, which broadly includes any data analytics tools that use historical data to help forecast future crime, potential offenders, and victims.

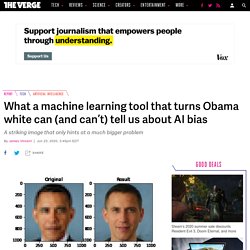

What a machine learning tool that turns Obama white can (and can’t) tell us about AI bias. It’s a startling image that illustrates the deep-rooted biases of AI research.

Input a low-resolution picture of Barack Obama, the first black president of the United States, into an algorithm designed to generate depixelated faces, and the output is a white man. It’s not just Obama, either. Get the same algorithm to generate high-resolution images of actress Lucy Liu or congresswoman Alexandria Ocasio-Cortez from low-resolution inputs, and the resulting faces look distinctly white. As one popular tweet quoting the Obama example put it: “This image speaks volumes about the dangers of bias in AI.”

Mathematicians urge cutting ties with police. Nationwide protests against anti-Black racism and police violence have sparked demands and reckonings in several corners of higher education and academe.

The latest to join the fray is the discipline of mathematics. Understanding and Reducing Bias in Machine Learning. ‘.. even after the observation of the frequent or constant conjunction of objects, we have no reason to draw any inference concerning any object beyond those of which we have had experience’ — Hume, A Treatise of Human Nature Mireille Hildebrandt, a lawyer and philosopher working at the intersection of law and technology, has written and spoken extensively on the issue of bias and fairness in machine learning algorithms.

In an upcoming paper on agnostic and bias free machine learning (Hildebrandt, 2019), she argues that bias free machine learning doesn’t exist and that a productive bias is necessary for an algorithm to be able to model the data and make relevant predictions. The three major types of bias that can occur in a predictive system can be laid out as: Healthcare Algorithms Are Biased, and the Results Can Be Deadly. In October 2019, a group of researchers from several universities published a damning revelation: A commercial algorithm widely used by health organizations was biased against black patients.

The algorithm, later identified as being provided by health-services company Optum, helped providers determine which patients were eligible for extra care. According to the researchers' findings, the algorithm gave higher priority to white patients when it came to treating complex conditions, including diabetes and kidney problems. A biased medical algorithm favored white people for health-care programs. A study has highlighted the risks inherent in using historical data to train machine-learning algorithms to make predictions.

The news: An algorithm that many US health providers use to predict which patients will most need extra medical care privileged white patients over black patients, according to researchers at UC Berkeley, whose study was published in Science. Effectively, it bumped whites up the queue for special treatments for complex conditions like kidney problems or diabetes. The study: The researchers dug through almost 50,000 records from a large, undisclosed academic hospital. They found that white patients were given higher risk scores, and were therefore more likely to be selected for extra care (like more nursing or dedicated appointments), than black patients who were in fact equally sick. The researchers calculated that the bias cut the proportion of black patients who got extra help by more than half. Five Cognitive Biases In Data Science (And how to avoid them)

Retrain your mind.

Image by John Hain from Pixabay. Recently, I was reading Rolf Dobell’s The Art of Thinking Clearly, which made me think about cognitive biases in a way I never had before. Twelve Million Phones, One Dataset, Zero Privacy. These are the actual locations for millions of Americans.

At the New York Stock Exchange … … in the beachfront neighborhoods of Los Angeles ... … in secure facilities like the Pentagon … … at the White House … … and at Mar-a-Lago, PresidentTrump’s Palm Beach resort. One nation, tracked An investigation into the smartphone tracking industry from Times Opinion.