Wiki Homepage - Spark. Welcome to the Apache Spark wiki.

This wiki is for pages that change frequently or benefit from being easier to edit than pages on the Spark website.

Spark-Job-Tools. Spark Rule Systems. Rule-Pattern How2s. GitHub - mikeaddison93/sbt-spark-submit: sbt plugin for spark-submit. Start/Deploy Apache Spark application programmatically using spark launcher. Sometimes we need to start our spark application from the another scala/java application.

Spark-EMR-Hadoop. Scala-Spark-Blogs. Spark Submit Templates. Spark Packages. DataFrames. Complex Event Processing (CEP) SparQL Rule and Pruning. Spark-SQL and Sqarql. SparkR. Spark-Web-Frameworks. Performance and Optimization. Tableau - Spark SQL. Mikeaddison93/spark-avro. Mikeaddison93/sparql-playground. Submit a Streaming Step - Amazon Elastic MapReduce. This section covers the basics of submitting a Streaming step to a cluster.

A Streaming application reads input from standard input and then runs a script or executable (called a mapper) against each input. The result from each of the inputs is saved locally, typically on a Hadoop Distributed File System (HDFS) partition. After all the input is processed by the mapper, a second script or executable (called a reducer) processes the mapper results. The results from the reducer are sent to standard output. You can chain together a series of Streaming steps, where the output of one step becomes the input of another step.

The mapper and the reducer can each be referenced as a file or you can supply a Java class. Submit a Streaming Step Using the Console. Bigtop - Apache Bigtop. Useful Developer Tools - Spark. Reducing Build Times.

How to build a Spark fat jar in Scala and submit a job. Are you looking for a ready-to-use solution to submit a job in Spark?

These are short instructions about how to start creating a Spark Scala project, in order to build a fat jar that can be executed in a Spark environment. I assume you already have installed Maven (and Java JDK) and Spark (locally or in a real cluster); you can either compile the project from your shell (like I’ll show here) or “import an existing Maven project” with Eclipse and build it from there (read this other article to see how).

Setup Eclipse to start developing in Spark Scala and build a fat jar. I suggest two ways to get started to develop Spark in Scala, both with Eclipse: one is to download (from the site scala-ide.org) the full pre-configured Eclipse which already includes the Scala IDE; another one consists in updating your existing Eclipse adding the Scala plugin (detailed instructions below).

This basically will allow you to start Scala projects and run them locally. In each case, at the end of the procedure, in order to start developing in Spark, you have to import inside Eclipse as “existing maven project” a project template (that you can find linked at the bottom of this article). Now I’ll illustrate how to integrate the Scala plugin in you existing maven installation.

In this example I used an Eclipse Kepler EE. From the site copy the latest link version for Kepler, or if not present, follow the link “Older versions” in the page, and choose the right Scala version for you. Make sure you have Java JDK 1.7 installed and that Eclipse is pointing at it. Meniluca :D. How to run SparkR in Eclipse on Windows environment. SparkR has been officially merged into Apache Spark 1.4.0 on June 11, 2015.

I could not find any direct post or link in google that shows how to run SparkR in Eclipse on Windows environment. There are a couple of posts showing how to run SparkR in command line on Windows, another a few on running SparkR in RStudio. I have set up a few Windows machines locally to run SparkR in Eclipse and in RStudio. Here I document the process of running SparkR in Eclipse. Since I have 64-bit Windows 7 and Windows 8, I use 64-bit version of Eclipse as the example. The basic steps are: How to run SparkR in Eclipse on Windows environment. SPARK Plugin for Eclipse: Installation Instructions and User Guide. Installation of the plugin is fairly simple, but it will require you to download and setup the Eclipse (version 3.0) program.

(If you already have installed Eclipse 3.0, skip ahead to Install the SPARK-IDE plugin. 2.x versions of Eclipse will not work with this plugin) If you do not already have Eclipse, please download it from www.eclipse.org. At the time of these instructions, the most recent release is Eclipse 3.0.1, which can be downloaded from here: If you are a Java developer, then the "Eclipse SDK" release is probably best for you. If you are not a Java developer, then you should click on "View all platforms for release 3.0.1" and scroll down to the "Platform Runtime Binary" releases. The download process is likely to take a long time. On the Windows platform, there is no installer, so I assume the same is true for other platforms as well. Apache Spark™ - Lightning-Fast Cluster Computing. Vagrant. Python 2.7 - Why can't PySpark find py4j.java_gateway? Spark Tutorial (Part I): Setting Up Spark and IPython Notebook within 10 minutes.

Introduction: The objective of this post is to share a step-by-step procedure of setting up data science local environment consisted of IPython Notebook (Anaconda Analystics) with ability of scaling up by parallizing/distributing tasks through Apache Spark local machine or a remote cluster.

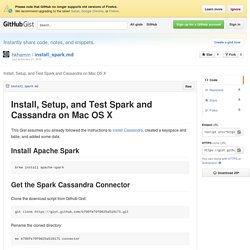

Anaconda Analystics is one of the most popular Python IDE among Python data scienctist community, featuring the interactivity of web-based IPython Notebook (gallery), the ease of setting-up and inclusion of a comphresive collection of built-in python modules. At the other hand, Apache Spark is described as a lightning-fast cluster computing and a complementary piece to Apache Hadoop. For Python user, there are a number advantages of using web-based IPython Notebook to conduct data science projects rather than using the console-based ipython/pyspark. Install Apache Spark and Anaconda (IPython Notebook) on the local machine Optional Prerequisites: Reference. Install, Setup, and Test Spark and Cassandra on Mac OS X. Install, Setup, and Test Spark and Cassandra on Mac OS X This Gist assumes you already followed the instructions to install Cassandra, created a keyspace and table, and added some data.

Install Apache Spark brew install apache-spark Get the Spark Cassandra Connector Clone the download script from Github Gist: git clone. SPARK Plugin for Eclipse: Installation Instructions and User Guide. Configuring IPython Notebook Support for PySpark · John Ramey. 01 Feb 2015 Apache Spark is a great way for performing large-scale data processing. Lately, I have begun working with PySpark, a way of interfacing with Spark through Python. After a discussion with a coworker, we were curious whether PySpark could run from within an IPython Notebook. It turns out that this is fairly straightforward by setting up an IPython profile.

Here’s the tl;dr summary: Install Spark Create PySpark profile for IPython Some config Simple word count example The steps below were successfully executed using Mac OS X 10.10.2 and Homebrew. Many thanks to my coworker Steve Wampler who did much of the work. Mikeaddison93/spark-csv. Running Spark on EC2 - Spark 1.5.1 Documentation. The spark-ec2 script, located in Spark’s ec2 directory, allows you to launch, manage and shut down Spark clusters on Amazon EC2. It automatically sets up Spark and HDFS on the cluster for you. This guide describes how to use spark-ec2 to launch clusters, how to run jobs on them, and how to shut them down. It assumes you’ve already signed up for an EC2 account on the Amazon Web Services site. spark-ec2 is designed to manage multiple named clusters. You can launch a new cluster (telling the script its size and giving it a name), shutdown an existing cluster, or log into a cluster. Create an Amazon EC2 key pair for yourself.

Launching a Spark/Shark Cluster on EC2. This section will walk you through the process of launching a small cluster using your own Amazon EC2 account and our scripts and AMI (New to AMIs? See this intro to AMIs). 1. Pre-requisites The cluster setup script we’ll use below requires Python 2.x and has been tested to work on Linux or OS X. We will use the Bash shell in our examples below.