The Psychology of Web Performance - how slow response times affect user psychology. Summary: Users experience psychological and physiological effects when interacting with web pages, experiencing frustration when not completing tasks and engagement at faster web sites.

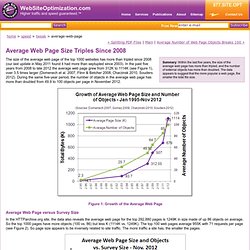

Learn how web page response times affect user psychology and behavior. Average Web Page Size Septuples Since 2003 - web page statistics and survey trends for page size and web objects. Summary: Within the last five years, the size of the average web page has more than tripled, and the number of external objects has more than doubled.

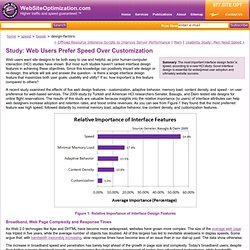

The data appears to suggest that the more popular a web page, the smaller the total file size. Study: Web Users Prefer Speed Over Customization. A recent study examined the effects of five web design features - customization, adaptive behavior, memory load, content density, and speed - on user preference for web-based services.

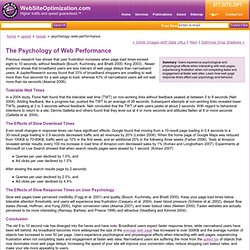

The 2009 study by Turkish and American HCI researchers Seneler, Basoglu, and Daim tested site designs for online flight reservations. The results of this study are valuable because insights into the relative importance (to users) of interface attributes can help web designers increase adoption and retention rates, and boost online revenues. As you can see from Figure 1 they found that the most preferred feature was high speed, followed distantly by minimal memory load, adaptive behavior, low content density, and customization features. Figure 1: Relative Importance of Interface Design Features. How Google Analytics Gets Information. When Google Analytics code gathers information about each pageview, how does it send that information to the data collection servers so it can be processed?

The information is sent in the query string of a request for the __utm.gif file. This file is requested for every single pageview, transaction and event. When the files are processed, Google Analytics can then string together the individual actions into a visit. Many organizations store a copy of every tracking request sent to Google Analytics data collection servers - this is accomplished with the setLocalRemoteServerMode(); function in ga.js. Once you have a local copy of Google Analytics tracking requests, you can process them with Angelfish Software.

Cookies in Google Analytics. Cookies are at the heart of Google Analytics.

Not just because they are delicious, but because they provide a critical link in tracking return visitors and attribution. There is shockingly little documentation on the cookies created by the tracking code, what they store or how they work. But they are so integral to the Google Analytics reports, it is important to lift the hood and understand exactly what is going on.

***Update: Universal Analytics uses a different cookie "recipe" than the one outlined in this article. Flavors. Facebook + Twitter's Influence on Google's Search Rankings. The author's views are entirely his or her own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

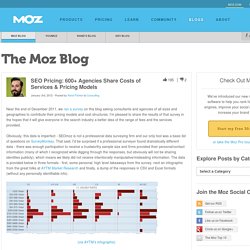

Since last December's admission from Google + Bing's search teams regarding the direct impact of Twitter + Facebook on search rankings, marketers have been asking two questions: SEO Pricing: 600+ Agencies Share Costs of Services & Pricing Models. Near the end of December 2011, we ran a survey on this blog asking consultants and agencies of all sizes and geographies to contribute their pricing models and cost structures.

I'm pleased to share the results of that survey in the hopes that it will give everyone in the search industry a better idea of the range of fees and the services provided. Obviously, this data is imperfect - SEOmoz is not a professional data surveying firm and our only tool was a basic list of questions on SurveyMonkey. That said, I'd be surprised if a professional surveyor found dramatically different data - there was enough participation to receive a trustworthy sample size and firms provided their personal/contact information (many of which I recognized while digging through the responses, but obviously will not be sharing identities publicly), which means we likely did not receive intentionally manipulative/misleading information. (via AYTM's infographic) Top 9 Takeaways. Rich snippets: testing tool improvements, breadcrumbs, and events. Webmaster Level: All Since the initial roll-out of rich snippets in 2009, webmasters have shown a great deal of interest in adding markup to their web pages to improve their listings in search results.

When webmasters add markup using microdata, microformats, or RDFa, Google is able to understand the content on web pages and show search result snippets that better convey the information on the page. Thanks to steady adoption by webmasters, we now see more than twice as many searches with rich snippets in the results in the US, and a four-fold increase globally, compared to one year ago. Here are three recent product updates.

Testing tool improvements Despite the healthy adoption rate by webmasters so far, implementing the rich snippets markup correctly can still be a major challenge. Introducing Rich Snippets. Webmaster Level: All.

Specify your canonical. Carpe diem on any duplicate content worries: we now support a format that allows you to publicly specify your preferred version of a URL.

If your site has identical or vastly similar content that's accessible through multiple URLs, this format provides you with more control over the URL returned in search results. It also helps to make sure that properties such as link popularity are consolidated to your preferred version. Sitemaps.org - Home. The Web Robots Pages. The Periodic Table Of SEO Ranking Factors. The Fresh Rank Algorithm, Is It More Important Than PageRank. New York Times Exposes J.C. Penney Link Scheme That Causes Plummeting Rankings in Google. Today, the New York Times published an article about a search engine optimization investigation of J.C. Penney. Perplexed by how well jcpenney.com did in unpaid (organic) search results for practically everything the retailer sold, they asked someone familiar with the world of search engine optimization (SEO) to look into it a bit more.

The investigation found that thousands of seemingly unrelated web sites (many that seemed to contain only links) were linking to the J.C. Penney web site. And most of those links had really descriptive anchor text. How Does Google Work? Learn How Google Works: Search Engine + AdWords. The following infographic was created years ago when Google had a content-first focus on search. In the years since then, the rise of mobile devices has caused Google to shift to a user-first approach to search. We created a newer infographic to reflect the modern search landscape here. Vote on Hacker News, or Bookmark this on Delicious 600 Pixel Wide Version. PageRank. Mathematical PageRanks for a simple network, expressed as percentages. (Google uses a logarithmic scale.) Google Raters - All About Google Quality Raters. Wow, my post about how Google makes algorithm changes sure got a LOT of attention. While I happened to think the post itself was pretty darn informative (if I can be so humble…lol), it turns out that the majority of folks visiting just wanted a copy of the 2011 Google Quality Raters Handbook.