Pipeline. Probabilistic programming. Online courses. Machine Learning. Data science. Dataviz. R. Python. Django. Wordpress. Hadoop + Strata. Genetic programming. Github. Color themes for papers. Programacion Android. Mobile Game Analytics: Miniclip’s Story. API.AI. Python for R Users. CoderProg - Ebooks & Elearning For Programming. Automated Machine Learning: An Interview with Randy Olson, TPOT Lead Developer. Read an insightful interview with Randy Olson, Senior Data Scientist at University of Pennsylvania Institute for Biomedical Informatics, and lead developer of TPOT, an open source Python tool that intelligently automates the entire machine learning process.

Automated machine learning has become a topic of considerable interest over the past several months. A recent KDnuggets blog competition focused on this topic, and generated a handful of interesting ideas and projects. Of note, our readers were introduced to Auto-sklearn, an automated machine learning pipeline generator, via the competition, and learned more about the project in a follow-up interview with its developers. Prior to that competition, however, KDnuggets readers were introduced to TPOT, "your data science assistant," an open source Python tool that intelligently automates the entire machine learning process. Randy agreed to take some time out to discuss TPOT and automated machine learning with our readers. Related: Contest Winner: Winning the AutoML Challenge with Auto-sklearn. This post is the first place prize recipient in the recent KDnuggets blog contest.

Auto-sklearn is an open-source Python tool that automatically determines effective machine learning pipelines for classification and regression datasets. It is built around the successful scikit-learn library and won the recent AutoML challenge. By Matthias Feurer, Aaron Klein and Frank Hutter, University of Freiburg. Editor's note: This blog post was the winner in the recent KDnuggets Automated Data Science and Machine Learning blog contest. Despite the great success of machine learning (ML) in many fields (e.g., computer vision, speech recognition or machine translation), it is very hard for novice users to effectively apply ML: they need to decide between dozens of available ML algorithms and preprocessing methods, and tune the hyperparameters of the selected approaches for the dataset at hand.

Algorithm and hyperparameter selection. IPython Books - Introduction to Machine Learning in Python with scikit-learn. Are categorical variables getting lost in your random forests? To understand what's causing the difference, we need to study the logic of tree-building algorithms.

The tree building algorithm At the heart of the tree-building algorithm is a subalgorithm that splits the samples into two bins by selecting a variable and a value. This splitting algorithm considers each of the features in turn, and for each feature selects the value of that feature that minimizes the impurity of the bins.

We won't get into the details of how this is calculated (and there's more than one way), except to say that you can consider a bin that contains mostly positive or mostly negative samples more pure than one that contains a mixture. Weight of Evidence (WOE) and Information Value (IV) Explained. In this article, we will cover the concept of weight of evidence and information value and how they are used in predictive modeling process along with details of how to compute them using SAS, R and Python.

Logistic regression model is one of the most commonly used statistical technique for solving binary classification problem. It is an acceptable technique in almost all the domains. These two concepts - weight of evidence (WOE) and information value (IV) evolved from the same logistic regression technique. These two terms have been in existence in credit scoring world for more than 4-5 decades.

They have been used as a benchmark to screen variables in the credit risk modeling projects such as probability of default. The weight of evidence tells the predictive power of an independent variable in relation to the dependent variable. Distribution of Goods - % of Good Customers in a particular groupDistribution of Bads - % of Bad Customers in a particular groupln - Natural Log 1. 2. 1.

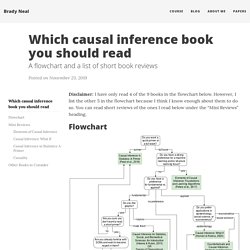

Which causal inference book you should read. Disclaimer: I have only read 4 of the 9 books in the flowchart below.

However, I list the other 5 in the flowchart because I think I know enough about them to do so. You can read short reviews of the ones I read below under the “Mini Reviews” heading.