How to Perform Feature Selection for Regression Data. Feature selection is the process of identifying and selecting a subset of input variables that are most relevant to the target variable.

Perhaps the simplest case of feature selection is the case where there are numerical input variables and a numerical target for regression predictive modeling. This is because the strength of the relationship between each input variable and the target can be calculated, called correlation, and compared relative to each other. In this tutorial, you will discover how to perform feature selection with numerical input data for regression predictive modeling. After completing this tutorial, you will know: How to evaluate the importance of numerical input data using the correlation and mutual information statistics.How to perform feature selection for numerical input data when fitting and evaluating a regression model.How to tune the number of features selected in a modeling pipeline using a grid search.

Let’s get started. Tutorial Overview Regression Dataset Books. LmSubsets: Exact variable-subset selection in linear regression. The R package lmSubsets for flexible and fast exact variable-subset selection is introduced and illustrated in a weather forecasting case study.

How to Choose a Feature Selection Method For Machine Learning. Last Updated on August 20, 2020 Feature selection is the process of reducing the number of input variables when developing a predictive model.

It is desirable to reduce the number of input variables to both reduce the computational cost of modeling and, in some cases, to improve the performance of the model. Statistical-based feature selection methods involve evaluating the relationship between each input variable and the target variable using statistics and selecting those input variables that have the strongest relationship with the target variable. These methods can be fast and effective, although the choice of statistical measures depends on the data type of both the input and output variables. As such, it can be challenging for a machine learning practitioner to select an appropriate statistical measure for a dataset when performing filter-based feature selection.

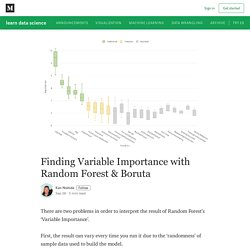

After reading this post, you will know: Let’s get started. Overview This tutorial is divided into 4 parts; they are: 1. 2. 3. Finding Variable Importance with Random Forest & Boruta. There are two problems in order to interpret the result of Random Forest’s ‘Variable Importance’.

First, the result can vary every time you run it due to the ‘randomness’ of sample data used to build the model. Second, you don’t know which variables are actually meaningful and which are not for predicting the outcome. Feature Selection using Genetic Algorithms in R. This is a post about feature selection using genetic algorithms in R, in which we will do a quick review about: What are genetic algorithms?

GA in ML? What does a solution look like? GA process and its operatorsThe fitness functionGenetics Algorithms in R! Try it yourselfRelating concepts Animation source: "Flexible Muscle-Based Locomotion for Bipedal Creatures" - Thomas Geijtenbeek The intuition behind. BounceR 0.1.2: Automated Feature Selection.

Feature Selection : Select Important Variables with Boruta Package. Variable Selection using Cross-Validation (and Other Techniques) A natural technique to select variables in the context of generalized linear models is to use a stepŵise procedure.

It is natural, but contreversial, as discussed by Frank Harrell in a great post, clearly worth reading. Frank mentioned about 10 points against a stepwise procedure. In order to illustrate that issue of variable selection, consider a dataset I’ve been using many times on the blog, MYOCARDE=read.table( " head=TRUE,sep=";") where we have observations from people entering E.R., because of a (potential) infarctus, and we want to understand who did survive, and to build a predictive model.

What if we use a forward stepwise logistic regression here? > reg0=glm(PRONO~1,data=MYOCARDE,family=binomial) > reg1=glm(PRONO~. or Schwarz Bayesian Information Criterion, With those two approaches, we have the same story: the most important variable (or say with the highest predictive value) is REPUL. Now, what about using cross-validation here? -fold Cross Validation (see Shao (1997)), where on. Feature Selection methods with example (Variable selection methods) Introduction One of the best ways I use to learn machine learning, is by benchmarking myself against the best data scientists in competitions.

It gives you a lot of insight into how you perform against the best on a level playing field. Initially, I used to believe that machine learning is going to be all about algorithms – know which one to apply when and you will come on the top. When I got there, I realized that was not the case – the winners were using the same algorithms which a lot of other people were using. Next, I thought surely these people would have better / superior machines.

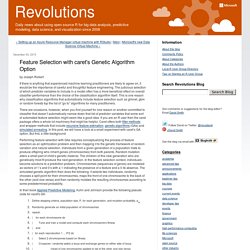

Feature Selection with caret's Genetic Algorithm Option. By Joseph Rickert If there is anything that experienced machine learning practitioners are likely to agree on, it would be the importance of careful and thoughtful feature engineering.

The judicious selection of which predictor variables to include in a model often has a more beneficial effect on overall classifier performance than the choice of the classification algorithm itself. This is one reason why classification algorithms that automatically include feature selection such as glmnet, gbm or random forests top the list of “go to” algorithms for many practitioners. There are occasions, however, when you find yourself for one reason or another committed to classifier that doesn’t automatically narrow down that list of predictor variables that some sort of automated feature selection might seem like a good idea. If you are an R user then the caret package offers a whole lot machinery that might be helpful. Next run the GA. Introduction to Feature selection for bioinformaticians using R, correlation matrix filters, PCA & backward selection.

Bioinformatics is becoming more and more a Data Mining field.

Every passing day, Genomics and Proteomics yield bucketloads of multivariate data (genes, proteins, DNA, identified peptides, structures), and every one of these biological data units is described by a number of features: length, physicochemical properties, scores, etc. Careful consideration of which features to select when trying to reduce the dimensionality of a specific dataset is, therefore, critical if one wishes to analyze and understand their impact on a model, or to identify what attributes produce a specific biological effect. For instance, considering a predictive model C1A1 + C2A2 + C3A3 … CnAn = S, where Ci are constants, Ai are features or attributes and S is the predictor output (retention time, toxicity, score, etc).

One of the simplest and most powerful filter approaches is the use of correlation matrix filters. Correlation Matrix : R Example: Removing features with more than 0.70 of Correlation. How to perform feature selection (pick imp. variables) - Boruta in R? Introduction Variable selection is an important aspect of model building which every analyst must learn.

After all, it helps in building predictive models free from correlated variables, biases and unwanted noise. A lot of novice analysts assume that keeping all (or more) variables will result in the best model as you are not losing any information. Sadly, that is not true! How many times has it happened that removing a variable from model has increased your model accuracy ? At least, it has happened to me. In this article, we’ll focus on understanding the theory and practical aspects of using Boruta Package.