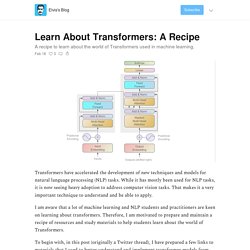

Learn About Transformers: A Recipe - Elvis's Blog. Transformers have accelerated the development of new techniques and models for natural language processing (NLP) tasks.

While it has mostly been used for NLP tasks, it is now seeing heavy adoption to address computer vision tasks. That makes it a very important technique to understand and be able to apply. I am aware that a lot of machine learning and NLP students and practitioners are keen on learning about transformers. Therefore, I am motivated to prepare and maintain a recipe of resources and study materials to help students learn about the world of Transformers. To begin with, in this post (originally a Twitter thread), I have prepared a few links to materials that I used to better understand and implement transformer models from scratch. The reason for this post is so that I have an easy way to continue to update the study material. 🧠 High-level Introduction First, try to get a very high-level introduction about transformers.

. 🔗 🔗 🎨 The Illustrated Transformer 🔗 Under the Hood of LSTMs. Understanding Convolution in Deep Learning — Tim Dettmers. Explaining RNNs without neural networks. Terence ParrTerence teaches in University of San Francisco's MS in Data Science program and you might know him as the creator of the ANTLR parser generator.

Vanilla recurrent neural networks (RNNs) form the basis of more sophisticated models, such as LSTMs and GRUs. There are lots of great articles, books, and videos that describe the functionality, mathematics, and behavior of RNNs so, don't worry, this isn't yet another rehash. CNN Explainer. – The all-in-one workspace for your notes, tasks, wikis, and databases. Lil'Log. GAN Lab: Play with Generative Adversarial Networks in Your Browser! What is a GAN?

Many machine learning systems look at some kind of complicated input (say, an image) and produce a simple output (a label like, "cat"). By contrast, the goal of a generative model is something like the opposite: take a small piece of input—perhaps a few random numbers—and produce a complex output, like an image of a realistic-looking face. A generative adversarial network (GAN) is an especially effective type of generative model, introduced only a few years ago, which has been a subject of intense interest in the machine learning community. You might wonder why we want a system that produces realistic images, or plausible simulations of any other kind of data. Besides the intrinsic intellectual challenge, this turns out to be a surprisingly handy tool, with applications ranging from art to enhancing blurry images. How does a GAN work? The first idea, not new to GANs, is to use randomness as an ingredient. What's happening in the visualization?

Deploying Deep Learning Models On Web And Mobile - home. Introduction This project was completed by Nidhin Pattaniyil and Reshama Shaikh.

This article details how to create a web and mobile app image classifier and is deep-learning-language agnostic. Our example uses the fastai library, but a model weights file from any deep learning library can be used to create a web and mobile app using our methods. Summary The project covers: How do Convolutional Neural Networks work? Find the rest of the How Neural Networks Work video series in this free online course. slides pdf [2MB] ppt [6MB] in French by Charles Crouspeyre in Japanese in Simplified Mandarin by Jimmy Lin in Traditional Mandarin by Jimmy Lin in Persian by Elham Khanchebemehr related presentation by Mohammad Khalooei MATLAB and Caffe implementations for NVIDIA GPUs by Alexander Hanuschkin Nine times out of ten, when you hear about deep learning breaking a new technological barrier, Convolutional Neural Networks are involved.

Also called CNNs or ConvNets, these are the workhorse of the deep neural network field. They have learned to sort images into categories even better than humans in some cases. If there’s one method out there that justifies the hype, it is CNNs. What’s especially cool about them is that they are easy to understand, at least when you break them down into their basic parts. X's and O's Features CNNs compare images piece by piece. How do Convolutional Neural Networks work? The Illustrated Transformer – Jay Alammar – Visualizing machine learning one concept at a time. Discussions: Hacker News (65 points, 4 comments), Reddit r/MachineLearning (29 points, 3 comments)Translations: Chinese (Simplified), KoreanWatch: MIT’s Deep Learning State of the Art lecture referencing this post In the previous post, we looked at Attention – a ubiquitous method in modern deep learning models.

Attention is a concept that helped improve the performance of neural machine translation applications. In this post, we will look at The Transformer – a model that uses attention to boost the speed with which these models can be trained. The Transformers outperforms the Google Neural Machine Translation model in specific tasks. The biggest benefit, however, comes from how The Transformer lends itself to parallelization. The Transformer was proposed in the paper Attention is All You Need. CNNs, Part 1: An Introduction to Convolutional Neural Networks - victorzhou.com. There’s been a lot of buzz about Convolution Neural Networks (CNNs) in the past few years, especially because of how they’ve revolutionized the field of Computer Vision.

In this post, we’ll build on a basic background knowledge of neural networks and explore what CNNs are, understand how they work, and build a real one from scratch (using only numpy) in Python. This post assumes only a basic knowledge of neural networks. My introduction to Neural Networks covers everything you’ll need to know, so you might want to read that first. Making Anime Faces With StyleGAN.

The Building Blocks of Interpretability. Building Custom Deep Learning Based OCR models. Introduction OCR provides us with different ways to see an image, find and recognize the text in it.

When we think about OCR, we inevitably think of lots of paperwork - bank cheques and legal documents, ID cards and street signs. In this blog post, we will try to predict the text present in number plate images. What we are dealing with is an optical character recognition library that leverages deep learning and attention mechanism to make predictions about what a particular character or word in an image is, if there is one at all. Lots of big words thrown there, so we'll take it step by step and explore the state of OCR technology and different approaches used for these tasks. You can always directly skip to the code section of the article or check the github repository if you are familiar with the big words above.

Transformers from scratch. I will assume a basic understanding of neural networks and backpropagation.

If you'd like to brush up, this lecture will give you the basics of neural networks and this one will explain how these principles are applied in modern deep learning systems. A working knowledge of Pytorch is required to understand the programming examples, but these can also be safely skipped. Self-attention The fundamental operation of any transformer architecture is the self-attention operation. 1803.09820. Generative Adversarial Networks - The Story So Far.

When Ian Goodfellow dreamt up the idea of Generative Adversarial Networks (GANs) over a mug of beer back in 2014, he probably didn’t expect to see the field advance so fast: In case you don’t see where I’m going here, the images you just saw were utterly, undeniably, 100% … fake.

Also, I don’t mean these were photoshopped, CGI-ed, or (fill in the blanks with whatever Nvidia’s calling their fancy new tech at the moment). I mean that these images are entirely generated through addition, multiplication, and splurging ludicrous amounts of cash on GPU computation. The algorithm that makes is stuff work is called a generative adversarial network (which is the long way of writing GAN, for those of you still stuck in machine learning acronym land), and over the last few years, there have been more innovations dedicated to making it work than there have been privacy scandals at Facebook.

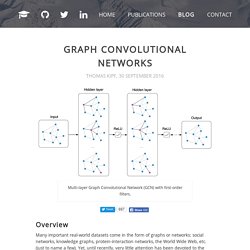

I’m not going to explain concepts like transposed convolutions and Wasserstein distance in detail. PhD Student @ University of Amsterdam. Multi-layer Graph Convolutional Network (GCN) with first-order filters.

Overview Many important real-world datasets come in the form of graphs or networks: social networks, knowledge graphs, protein-interaction networks, the World Wide Web, etc. (just to name a few). Yet, until recently, very little attention has been devoted to the generalization of neural network models to such structured datasets. In the last couple of years, a number of papers re-visited this problem of generalizing neural networks to work on arbitrarily structured graphs (Bruna et al., ICLR 2014; Henaff et al., 2015; Duvenaud et al., NIPS 2015; Li et al., ICLR 2016; Defferrard et al., NIPS 2016; Kipf & Welling, ICLR 2017), some of them now achieving very promising results in domains that have previously been dominated by, e.g., kernel-based methods, graph-based regularization techniques and others. and a review/discussion post by Ferenc Huszar: How powerful are Graph Convolutions? Outline. WTF is image classification?

MIT 6.S094: Deep Learning for Self-Driving Cars. DeepTraffic is a deep reinforcement learning competition. The goal is to create a neural network to drive a vehicle (or multiple vehicles) as fast as possible through dense traffic. What you see above is all you need to succeed in this competition. Here are the basic steps: Change parameters in the code box (read documentation for hints). Click "Apply Code" white button. In version 2.0, you can create visualizations of your best submission: How convolutional neural networks see the world.

Famous Convolutional Neural Network Architectures - #1 - Predictive Programmer. Variational Autoencoders Explained. You know every image of a digit should contain, well, a single digit. An input in $\mathbb{R}^{28×28}$ doesn’t explicitly contain that information. But it must reside somewhere... That somewhere is the latent space. You can think of the latent space as $\mathbb{R}^{k}$ where every vector contains $k$ pieces of essential information needed to draw an image. Let’s say the first dimension contains the number represented by the digit. We can think of the process that generated the images as a two steps process. VAE tries to model this process: given an image $x$, we want to find at least one latent vector which is able to describe it; one vector that contains the instructions to generate $x$. Let’s pour some intuition into the equation: The integral means we should search over the entire latent space for candidates.For every candidate $z$, we ask ourselves: can $x$ be generated using the instructions of $z$?

The VAE training objective is to maximize $P(x)$. Tutorial - What is a variational autoencoder? – Jaan Altosaar. Why do deep learning researchers and probabilistic machine learning folks get confused when discussing variational autoencoders? What is a variational autoencoder? Why is there unreasonable confusion surrounding this term? There is a conceptual and language gap. The sciences of neural networks and probability models do not have a shared language. My goal is to bridge this idea gap and allow for more collaboration and discussion between these fields, and provide a consistent implementation (Github link).

Brain of mat kelcey. The first thing i thought when we setup our bee hive was "i wonder how you could count the number of bees coming and going? " after a little research i discovered it seems noone has a good non intrusive system for doing it yet. it can apparently be useful for all sorts of hive health checking. the first thing to do was collect some sample data. a raspberry pi, a standard pi camera and a solar panel is a pretty simple thing to get going and at 1 frame every 10 seconds you get 5,000+ images over a day (6am to 9pm). Identifying dog breeds using Keras. Convolutional neural networks for artistic style transfer — Harish Narayanan. How HBO’s Silicon Valley built “Not Hotdog” with mobile TensorFlow, Keras & React Native. 3. The DeepDog Architecture Design Our final architecture was spurred in large part by the publication on April 17 of Google’s MobileNets paper, promising a new neural architecture with Inception-like accuracy on simple problems like ours, with only 4M or so parameters.

Recommending music on Spotify with deep learning – Sander Dieleman. This summer, I’m interning at Spotify in New York City, where I’m working on content-based music recommendation using convolutional neural networks. In this post, I’ll explain my approach and show some preliminary results. 2D Visualization of a Convolutional Neural Network. Estimating an Optimal Learning Rate For a Deep Neural Network.

Starting deep learning hands-on: image classification on CIFAR-10. Want to know how Deep Learning works? Here’s a quick guide for everyone. Why do we normalize images by subtracting the dataset's image mean and not the current image mean in deep learning? - Cross Validated. Applied Deep Learning - Part 1: Artificial Neural Networks. Deep reinforcement learning: where to start. AlphaGo Zero: Learning from scratch. The $1700 great Deep Learning box: Assembly, setup and benchmarks.

Chihuahua or muffin? My search for the best computer vision API. [1404.7828] Deep Learning in Neural Networks: An Overview. Coding the History of Deep Learning - FloydHub Blog. The 9 Deep Learning Papers You Need To Know About (Understanding CNNs Part 3) – Adit Deshpande – CS Undergrad at UCLA ('19) Learning Deep Learning with Keras. Deep Learning with Python. Deep learning is applicable to a widening range of artificial intelligence problems, such as image classification, speech recognition, text classification, question answering, text-to-speech, and optical character recognition.

It is the technology behind photo tagging systems at Facebook and Google, self-driving cars, speech recognition systems on your smartphone, and much more. In particular, Deep learning excels at solving machine perception problems: understanding the content of image data, video data, or sound data. Here's a simple example: say you have a large collection of images, and that you want tags associated with each image, for example, "dog," "cat," etc.

Deep_Learning_Project. ""Sometimes, we tend to get lost in the jargon and confuse things easily, so the best way to go about this is getting back to our basics. Image Classification using Deep Neural Networks — A beginner friendly approach using TensorFlow. Note: You can find the entire source code on this GitHub repo.

The limitations of deep learning. A Peek at Trends in Machine Learning – Andrej Karpathy – Medium. A Brief History of CNNs in Image Segmentation: From R-CNN to Mask R-CNN. Python Programming Tutorials. AI, Deep Learning, and Machine Learning: A Primer – Andreessen Horowitz. The Difference Between AI, Machine Learning, and Deep Learning? Deep Reinforcement Learning: Pong from Pixels. The Truth About Deep Learning - Quantified. Guest Post (Part I): Demystifying Deep Reinforcement Learning - Nervana. Neural networks and deep learning. Deep Learning.

Deeplearning. Greylock talk - Google Slides. What deep learning really means. Practical Deep Learning For Coders—18 hours of lessons for free. 1704.01568. A 'Brief' History of Neural Nets and Deep Learning, Part 1 – Andrey Kurenkov's Web World.