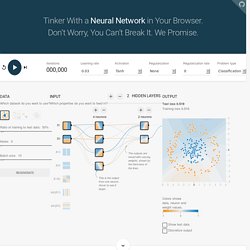

Neural Networks Demystified - lumiverse. A Neural Network Playground. Um, What Is a Neural Network?

It’s a technique for building a computer program that learns from data. It is based very loosely on how we think the human brain works. First, a collection of software “neurons” are created and connected together, allowing them to send messages to each other. Next, the network is asked to solve a problem, which it attempts to do over and over, each time strengthening the connections that lead to success and diminishing those that lead to failure. Deep Learning. CS231n Convolutional Neural Networks for Visual Recognition. Deep Learning. Can I get a PDF of this book?

No, our contract with MIT Press forbids distribution of too easily copied electronic formats of the book. Google employees who would like a paper copy of the book can send Ian the name of the printer nearest their desk and he will send a print job to that printer containing as much of the book as you would like to read.Why are you using HTML format for the drafts? Home — TensorFlow. Theoretical Motivations for Deep Learning. This post is based on the lecture “Deep Learning: Theoretical Motivations” given by Dr.

Yoshua Bengio at Deep Learning Summer School, Montreal 2015. I highly recommend the lecture for a deeper understanding of the topic. Machine Learning for Developers by Mike de Waard. Most developers these days have heard of machine learning, but when trying to find an 'easy' way into this technique, most people find themselves getting scared off by the abstractness of the concept of Machine Learning and terms as regression, unsupervised learning, Probability Density Function and many other definitions.

If one switches to books there are books such as An Introduction to Statistical Learning with Applications in R and Machine Learning for Hackers who use programming language R for their examples. However R is not really a programming language in which one writes programs for everyday use such as is done with for example Java, C#, Scala etc. This is why in this blog machine learning will be introduced using Smile, a machine learning library that can be used both in Java and Scala. These are languages that most developers have seen at least once during their study or career. As final note I'd like to thank the following people: Features Model. Unsupervised Feature Learning and Deep Learning Tutorial. Blog – A Gentle Guide to Machine Learning. Machine Learning is a subfield within Artificial Intelligence that builds algorithms that allow computers to learn to perform tasks from data instead of being explicitly programmed.

Got it? We can make machines learn to do things! The first time I heard that, it blew my mind. That means that we can program computers to learn things by themselves! A Visual Introduction to Machine Learning. Finding better boundaries Let's revisit the 73-m elevation boundary proposed previously to see how we can improve upon our intuition.

Clearly, this requires a different perspective. By transforming our visualization into a histogram, we can better see how frequently homes appear at each elevation. While the highest home in New York is 73m, the majority of them seem to have far lower elevations. Your first fork A decision tree uses if-then statements to define patterns in data.

For example, if a home's elevation is above some number, then the home is probably in San Francisco. In machine learning, these statements are called forks, and they split the data into two branches based on some value. That value between the branches is called a split point. Tradeoffs. A Neural Network in 11 lines of Python - i am trask. Deepdream/dream.ipynb at master · google/deepdream. The Unreasonable Effectiveness of Recurrent Neural Networks. There’s something magical about Recurrent Neural Networks (RNNs).

I still remember when I trained my first recurrent network for Image Captioning. Within a few dozen minutes of training my first baby model (with rather arbitrarily-chosen hyperparameters) started to generate very nice looking descriptions of images that were on the edge of making sense. Sometimes the ratio of how simple your model is to the quality of the results you get out of it blows past your expectations, and this was one of those times. What made this result so shocking at the time was that the common wisdom was that RNNs were supposed to be difficult to train (with more experience I’ve in fact reached the opposite conclusion). Fast forward about a year: I’m training RNNs all the time and I’ve witnessed their power and robustness many times, and yet their magical outputs still find ways of amusing me. We’ll train RNNs to generate text character by character and ponder the question “how is that even possible?” Understanding Convolution in Deep Learning. Convolution is probably the most important concept in deep learning right now.

It was convolution and convolutional nets that catapulted deep learning to the forefront of almost any machine learning task there is. But what makes convolution so powerful? How does it work? In this blog post I will explain convolution and relate it to other concepts that will help you to understand convolution thoroughly. There are already some blog post regarding convolution in deep learning, but I found all of them highly confusing with unnecessary mathematical details that do not further the understanding in any meaningful way. Synaptic - The javascript neural network library. Deep Learning Framework. Hacker's guide to Neural Networks. Hi there, I’m a CS PhD student at Stanford.

I’ve worked on Deep Learning for a few years as part of my research and among several of my related pet projects is ConvNetJS - a Javascript library for training Neural Networks. Javascript allows one to nicely visualize what’s going on and to play around with the various hyperparameter settings, but I still regularly hear from people who ask for a more thorough treatment of the topic. This article (which I plan to slowly expand out to lengths of a few book chapters) is my humble attempt.

It’s on web instead of PDF because all books should be, and eventually it will hopefully include animations/demos etc. My personal experience with Neural Networks is that everything became much clearer when I started ignoring full-page, dense derivations of backpropagation equations and just started writing code. “…everything became much clearer when I started writing code.” Chapter 1: Real-valued Circuits Base Case: Single Gate in the Circuit f(x,y)=xy The Goal. Practical Machine Learning Problems - Machine Learning Mastery.

What is Machine Learning?

We can read authoritative definitions of machine learning, but really, machine learning is defined by the problem being solved. Therefore the best way to understand machine learning is to look at some example problems. In this post we will first look at some well known and understood examples of machine learning problems in the real world. Becoming a Data Scientist - Curriculum via Metromap ← Pragmatic Perspectives. Data Science, Machine Learning, Big Data Analytics, Cognitive Computing …. well all of us have been avalanched with articles, skills demand info graph’s and point of views on these topics (yawn!). One thing is for sure; you cannot become a data scientist overnight. Its a journey, for sure a challenging one.

But how do you go about becoming one? Where to start? When do you start seeing light at the end of the tunnel? Neural networks and deep learning. Josephmisiti/awesome-machine-learning. Stanford Large Network Dataset Collection. Metacademy - Level-Up Your Machine Learning. Since launching Metacademy, I've had a number of people ask , What should I do if I want to get 'better' at machine learning, but I don't know what I want to learn? Excellent question! My answer: consistently work your way through textbooks. I then watch as they grimace in the same way an out-of-shape person grimaces when a healthy friend responds with, "Oh, I watch what I eat and consistently exercise. " Progress requires consistent discipline, motivation, and an ability to work through challenges on your own. But why textbooks? Algorithmia Blog -Create your own machine learning powered RSS reader in under 30 minutes - Algorithmia Blog.

Home - Toronto Deep Learning Demos. Learning from the best. Guest contributor David Kofoed Wind is a PhD student in Cognitive Systems at The Technical University of Denmark (DTU): As a part of my master's thesis on competitive machine learning, I talked to a series of Kaggle Masters to try to understand how they were consistently performing well in competitions.

What I learned was a mixture of rather well-known tactics, and less obvious tricks-of-the-trade. In this blog post, I have picked some of their answers to my questions in an attempt to outline some of the strategies which are useful for performing well on Kaggle. As the name of this blog suggests, there is no free hunch, and reading this blog post will not make you a Kaggle Master overnight. Yet following the steps described below will most likely help with getting respectable results on the leaderboards. Feature engineering is often the most important part For most Kaggle competitions the most important part is feature engineering, which is pretty easy to learn how to do. If we let. 20 short tutorials all data scientists should read (and practice) - Data Science Central. The Numerical and Insightful Blog: A Gentle Introduction to Backpropagation. Why is this blog being written?

Neural networks have always fascinated me ever since I became aware of them in the 1990s. They are often represented with a hypnotizing array of connections. In the last decade, deep neural networks have dominated pattern recognition, often replacing other algorithms for applications like computer vision and voice recognition. At least in specialized tasks, they indeed come close to mimicking the miraculous feats of cognition our brains are capable of. Deep learning Reading List.