Untitled. Investigators Guide to RDM practice. Codebook cookbook: How to enter and document your data. Codebook cookbook How to enter and document your data 1.

Introduction Writing a codebook is an important step in the management of any data analysis project. The codebook will serve as a reference for the clinical team; it will help newcomers to the project to rapidly have a flavor of what is at stake and will serve as a communication tool with the statistical unit. 2. If you enter data in a spreadsheet such as Excel (as is currently popular in biomedical research) or a database program such as Access, there is much freedom in the way data can be entered. 2.1 Variables names A unique, unambiguous name should be given to each variable. 2.2 Variables labels A label is a description of the variable, such as a textual description or a reference to the question, if the item arises from a questionnaire. It is important to include a descriptive variable label for each variable in the file. Examples of variable labels are presented in Section 3.1.1 2.3 Variables codes 2.4 Variables formats 3. 4. 5. 6.

Identifiers for the 21st century: How to design, provision, and reuse identifiers to maximize data utility and impact. Journal article Open Access Julie McMurry; 40 additional authors (see file) In an era of big data, there is increasing optimism that data mining will yield valuable insights.

However, in the life sciences, relevant data is not only "big"; it is also highly decentralized across thousands of online databases. Sansone BioSharing. CEDAR - Center for Expanded Data Annotation and Retrieval. How to write a good codebook - McGill University. Codebook cookbook A guide to writing a good codebook for data analysis projects in medicine 1.

Introduction Writing a codebook is an important step in the management of any data analysis project. The codebook will serve as a reference for the clinical team; it will help newcomers to the project to rapidly have a flavor of what is at stake and will serve as a communication tool with the statistical unit. Indeed, when comes time to perform statistical analyses on your data, the statistician will be grateful to have a codebook that is readily usable, that is, a codebook that is easy to turn into code for whichever statistical analysis package he/she will use (SAS, R, Stata, or other). 2. Research Data Blog. [Reposted from The University of Edinburgh, like many other universities, is currently undertaking extensive work to build infrastructure that supports and enables good practice in the area of Research Data Management.

Research Data MANTRA - Library Training. Introduction During autumn and winter 2012-13, data librarians at the University of Edinburgh (Robin Rice and Anne Donnelly) led a pilot course for four University academic service librarians on Research Data Management (RDM) covering five topics involving reading assigments from the MANTRA course, reflective writing, and 2-hour face-to-face training sessions, including group exercises from the UK Data Archive (UKDA).

The course was deemed successful by participants and Information Services managers, and was delivered to all the University's academic service librarians. Metadata Standards. The Kepler Project — Kepler. VisTrailsWiki. Seaddata. Untitled. Home - Data Services - LibGuides at NIH Library. Data Science Seminars - BD2K Training Coordinating Center. The BD2K Guide to the Fundamentals of Data Science Series Every Friday beginning September 9, 2016 12pm - 1pm Eastern Time / 9am - 10am Pacific Time The Big Data to Knowledge (BD2K) Initiative presents this virtual lecture series on the data science underlying modern biomedical research.

Beginning in September 2016, the webinar series will consist of weekly presentations from experts across the country covering the basics of data management, representation, computation, statistical inference, data modeling, and other topics relevant to “big data” in biomedicine. The webinar series will provide essential training suitable for individuals at an introductory overview level. Please join our weekly meetings from your computer, tablet or smartphone. If you prefer to use your phone, you can also dial in using the following information. View all archived videos on our YouTube channel: BD2K Guide to the Fundamentals of Data Science.

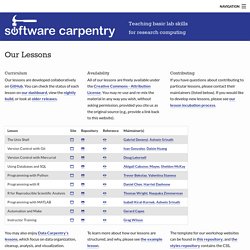

Our Lessons. Availability All of our lessons are freely available under the Creative Commons - Attribution License.

You may re-use and re-mix the material in any way you wish, without asking permission, provided you cite us as the original source (e.g., provide a link back to this website). Contributing If you have questions about contributing to particular lessons, please contact their maintainers (listed below). If you would like to develop new lessons, please see our lesson incubation process. Dryad Digital Repository - Dryad.

DataONE. The Dataverse Project - Dataverse.org. Dryad Digital Repository - Dryad. Data Curation Profiles. Knb.ecoinformatics. Statement on the Institute of Medicine Report on Sharing Clinical Trial Data. I want to thank the Institute of Medicine (IOM) for its important and thoughtful report, Sharing Clinical Trial Data – Maximizing Benefits, Minimizing Risk (link is external), which was commissioned by NIH and a number of other sponsors.

Data sharing is fundamental to NIH’s mission, and the responsible sharing of clinical trials data in ways that protects patient privacy has many important benefits. Through responsible data sharing, participants in clinical trials are assured that their contributions to research will have maximal effect, and researchers are able to accelerate efforts to prevent, detect, and treat disease by validating and advancing results.

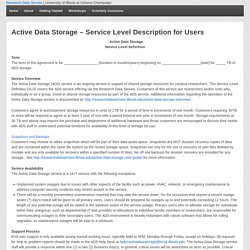

Data sharing also improves the efficiency of clinical research and bolsters safety in clinical trials by preventing unnecessary duplication of trials, including trials of products that have already been found to be unsafe or ineffective. Francis S. Collins, M.D., Ph.D. Data management backgrounder 107751. Active Data Storage – Service Level Description for Users. Active Data StorageService Level Definition Term The term of this agreement is for _________________ [duration in months/years] beginning on ___________________ [date] for _____ TB of ADS storage.

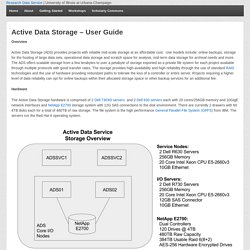

Service Overview The Active Data Storage (ADS) service is an ongoing service in support of shared storage resources for campus researchers. This Service Level Definition (SLD) covers the ADS service offering via the Research Data Service. Customers of this service are researchers and/or units who, individually or as a group, invest in shared storage resources as part of the ADS service. Active Data Storage – User Guide. Overview Active Data Storage (ADS) provides projects with reliable mid-scale storage at an affordable cost.

Use models include: online backups, storage for the hosting of large data sets, operational data storage and scratch space for analysis, mid-term data storage for archival needs and more. The ADS offers scalable storage from a few terabytes to over a petabyte of storage exported as a private file system for each project available through multiple protocols with good transfer rates. The storage provides high-availability and high-reliability through the use of standard RAID technologies and the use of hardware providing redundant paths to tolerate the loss of a controller or entire server.

Why Persistent Identifiers Deserve Their Own Festival. Mlanet. Welcome to the new MLANET Login information and help below. MLA members and registered guests: Your Username is now your email (not your old MLANET member ID)Your Password is unchanged from the one you had on the prior MLANET.If you do not remember your password, please reset your passord (requires email). Not a member? Take the next step in your career and join MLA now. Publishing Data Workflows. [Guest post from Angus Whyte, Digital Curation Centre] In the first week of March the 7th Plenary session of the Research Data Alliance got underway in Tokyo.

Plenary sessions are the fulcrum of RDA activity, when its many Working Groups and Interest Groups try to get as much leverage as they can out of the previous 6 months of voluntary activity, which is usually coordinated through crackly conference calls. The Digital Curation Centre (DCC) and others in Edinburgh contribute to a few of these groups, one being the Working Group (WG) on Publishing Data Workflows. Like all such groups it has a fixed time span and agreed deliverables. This WG completes its run at the Tokyo plenary, so there’s no better time to reflect on why DCC has been involved in it, how we’ve worked with others in Edinburgh and what outcomes it’s had. DCC takes an active part in groups where we see a direct mutual benefit, for example by finding content for our guidance publications.

Data Science Workflow: Overview and Challenges. By Philip Guo October 30, 2013 Comments (4) During my Ph.D., I created tools for people who write programs to obtain insights from data. Millions of professionals in fields ranging from science, engineering, business, finance, public policy, and journalism, as well as numerous students and computer hobbyists, all perform this sort of programming on a daily basis. Shortly after I wrote my dissertation in 2012, the term Data Science started appearing everywhere. Some industry pundits call data science the "sexiest job of the 21st century. " Registry of Research Data Repositories. ExAC Browser. Public_Access_Plans_US_Fed_Agencies. CENDI is collecting information on Federal Agency plans and guidance for implementation of Public Access. The site will capture information as it is released to the public by agencies.

The following sections are now available: Public Access Plans of U.S. Federal Agencies Award Dates Covered Under Public Access Policies for Publication and for Data Management Plans Public Access (PA) Plans of U.S. In a memo released by the Office of Science and Technology Policy (OSTP) on February 22, 2013, each Federal agency with over $100 million in annual conduct of research and development expenditures was directed to develop a plan to support increased public access to the results of research funded by the Federal Government.

Agencies with Public Access Plans which have been approved for public release are listed below. Department of Agriculture (Nov. 2014) [PA Plan] Data Curation Network. The Data Curation Network will enable academic institutions to better support researchers that are faced with a growing number of requirements to ethically share their research data. The Data Curation Network project brings together the perspectives of research data librarians, academic library administration, and data curation subject experts from six major academic institutions to develop a Data Curation Network model. Data curation services are currently provided by expert staff at each of our institutions to prepare digital research data for open access and reuse.

Our goal is to demonstrate that by sharing our data curation staff across a ‘network of expertise’ will enable academic libraries to collectively, and more effectively, curate a wider variety of data types (e.g., discipline, file format, etc.) that expands beyond what any single institution might offer alone. Scholarly Communication Program.

Integrating Data for Analysis, Anonymization, and SHaring. ConText is based on a negation algorithm called NegEx. ConText's input is a sentence with indexed clinical conditions; ConText's output for each indexed condition is the value for contextual features or modifiers. S&E Indicators 2016. SAMHSA. Publishers' Policies on PubMed Central, Miner Library. This is a selective list of publishers' policies issued in response to NIH's Public Access policy. UR authors publishing in journals from any of these publishers should verify that the policy listed here is still current before submitting their manuscript to PubMed Central (PMC) in compliance with the NIH Public Access policy.

Complying with the NIH Public Access Policy - Copyright Considerations and Options. Complying with the NIH Public Access Policy - Copyright Considerations and OptionsA SPARC/Science Commons/ARL joint white paper by Michael W. NIHComplianceFlowchart. Identify Submission Method. Enter a journal name below to see if it uses Submission Method A. Method A Journals These journals make the final published version of all NIH-funded articles available in PubMed Central (PMC) no later than 12 months after publication without author involvement. The start date shown for each journal is the earliest publication date that meets this requirement. When and How to Comply. Educating Yourself on Research Data Management: Resources and Opportunities - Google Sheets. Untitled. Checklists for Planning and Writing a Human Subjects Grant Application.

NIH Data Sharing Policy and Implementation Guidance. Doodling, Sketching and 'Mind Mapping' as Learning Tools. The broad concept of “drawing to learn” is gaining respect and popularity from classrooms to boardrooms. As Sunni Brown says in her TED talk, posted below, there has long been “a powerful cultural norm against doodling in settings where we are supposed to learn something,” but doodling — and its more formal cousins “sketchnoting,” “visual notetaking” and “mind mapping” — might instead be considered powerful and interesting ways to “help yourself think.”

Below, the latest in our Skills and Strategies series, which takes skills that students need and strategies that teachers can use across the curriculum and matches them to New York Times content. If you try any of the ideas below with something from The Times, let us know by emailing us a photo of your drawing at LNFeedback@nytimes.com, or by tweeting us at @nytimeslearning.

Sharing Research Data Management Practice. Bookmarks for dsalo tagged 'horrorstories' Bookmarks for kbriney tagged 'datahorrorstories' MEDLINE Databank Sources. The Digital Humanities CenterThe Digital Humanities Center. Research Data MANTRA. Public Health Information & Data Tutorial. The Public Health Information and Data Tutorial provides instruction for members of the public health workforce on issues related to information access and management. There are no copyright restrictions on the contents of this tutorial and users are free to adapt or duplicate any portion. The contributors and authors of this tutorial’s content represent city, county, state and federal agencies. They establish clear connections to recognized competencies in public health and provide examples representing much of the diversity inherent in the practice of public health. Agency for Healthcare Research & Quality. Mission. Research Tools & Data. Figshare.

By Dan Valen. Data Sharing Repositories. Discipline-Based Data Archives. Search. LONI Image Data Archive (IDA) Data repositories & atlases - Neuroinformatics and Image Analysis Laboratory - UoBergen. Search. NIH-Public-Access-Plan.pdf. NIH Data Sharing Policy and Implementation Guidance. DMP Requirements: DMPTool. A Beginner's Guide to Data Management - NIH Data Sharing Repositories. Data Management General Guidance: DMPTool. Research Data Services Data Management Consulting Group. BioLexicon. Deliver an effective and flexible data warehouse solution, Part 3: Design and implement a warehouse ETL process. Document steps used in data processing.