Machine Learning

> Bpesquet

> AI

Arthur charpentier. Topic-4-linear-models-part-1-ols. One could ask why we choose to minimize the mean square error instead of something else?

After all, one can minimize the mean absolute value of the residual. The only thing that will happen, if we change the minimized value, is that we will exceed the Gauss-Markov theorem conditions, and our estimates will therefore cease to be the optimal over the linear and unbiased ones. Before we continue, let's digress to illustrate maximum likelihood estimation with a simple example. Many people probably remember the formula of ethyl alcohol, so I decided to do an experiment to determine whether people remember a simpler formula for methanol: CH3OH. We surveyed 400 people to find that only 117 people remembered the formula. P(θ,x)=θx(1−θ)(1−x),x∈{0,1} This distribution is exactly what we need, and the distribution parameter θ is the estimate of the probability that a person knows the formula of methyl alcohol.

P(x;θ)=400∏i=1θxi(1−θ)(1−xi)=θ117(1−θ)283 y=Xw+ϵ, ϵi∼N(0,σ2)

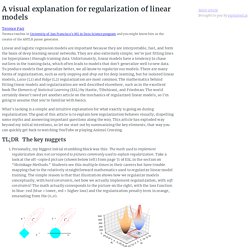

A visual explanation for regularization of linear models. Terence ParrTerence teaches in University of San Francisco's MS in Data Science program and you might know him as the creator of the ANTLR parser generator.

Linear and logistic regression models are important because they are interpretable, fast, and form the basis of deep learning neural networks.

Introduction to Bagging and Ensemble Methods. The bias-variance trade-off is a challenge we all face while training machine learning algorithms.

Bagging is a powerful ensemble method which helps to reduce variance, and by extension, prevent overfitting. Ensemble methods improve model precision by using a group (or "ensemble") of models which, when combined, outperform individual models when used separately. In this article we'll take a look at the inner-workings of bagging, its applications, and implement the bagging algorithm using the scikit-learn library. In particular, we'll cover: An Overview of Ensemble Learning Why Use Ensemble Learning? Let's get started. Bring this project to life. A Pirate's Guide to Accuracy, Precision, Recall, and Other Scores. Whether you're inventing a new classification algorithm or investigating the efficacy of a new drug, getting results is not the end of the process.

Your last step is to determine the correctness of the results. There are a great number of methods and implementations for this task.

Naïve Bayes for Machine Learning – From Zero to Hero. Before I dive into the topic, let us ask a question – what is machine learning all about and why has it suddenly become a buzzword?

Machine learning fundamentally is the “art of prediction”. It is all about predicting the future, based on the past. The reason it is a buzzword is actually not about data, technology, computing power or any of that stuff. It’s just about human psychology! Yes, we humans are always curious about the future, aren’t we? So the art of prediction is just about hitting that sweet spot between timing and accuracy. Probability Theory - A game of Randomness vs. It is not only important what happened in the past, but also how likely it is that it will be repeated in the future. Let’s take another classic example of rolling a dice. The above examples are represented pictorially below. So what’s the math? Before going to Bayes’ theorem, we need to know about a few (more!) Now, Bayes’ theorem (named after Rev. Below is one simple way to explain the Bayes rule.

Machine Learning - OranLooney.com. ML From Scratch, Part 6: Principal Component Analysis In the previous article in this series we distinguished between two kinds of unsupervised learning (cluster analysis and dimensionality reduction) and discussed the former in some detail.

In this installment we turn our attention to the later. In dimensionality reduction we seek a function where is the dimension of the original data and is less than or equal to .

Model-Based Machine Learning (Early Access): Table of Contents. A Guide to Bayesian Statistics — Count Bayesie. A Gentle Introduction to Bayes Theorem for Machine Learning. Last Updated on October 4, 2019 Bayes Theorem provides a principled way for calculating a conditional probability.

It is a deceptively simple calculation, although it can be used to easily calculate the conditional probability of events where intuition often fails. Bayes Theorem also provides a way for thinking about the evaluation and selection of different models for a given dataset in applied machine learning. Maximizing the probability of a model fitting a dataset is more generally referred to as maximum a posteriori, or MAP for short, and provides a probabilistic framework for predictive modeling. In this post, you will discover Bayes Theorem for calculating conditional probabilities. After reading this post, you will know: An intuition for Bayes Theorem from a perspective of conditional probability.An intuition for Bayes Theorem from the perspective of machine learning.How to calculate conditional probability using Bayes Theorem for a real world example. Let’s get started.

Accuracy, Precision, Recall or F1?

Often when I talk to organizations that are looking to implement data science into their processes, they often ask the question, “How do I get the most accurate model?”.

And I asked further, “What business challenge are you trying to solve using the model?” And I will get the puzzling look because the question that I posed does not really answer their question. I will then need to explain why I asked the question before we start exploring if Accuracy is the be-all and end-all model metric that we shall choose our “best” model from.

[1902.10730] Degenerate Feedback Loops in Recommender Systems. Principled Machine Learning: Practices and Tools for Efficient Collaboration. Machine learning projects are often harder than they should be.

We’re dealing with data and software, and it should be a simple matter of running the code, iterating through some algorithm tweaks, and after a while we have a perfectly trained AI model. But fast forward three months later, the training data might have been changed or deleted, and the understanding of training scripts might be a vague memory of which does what. Have you created a disconnect between the trained model and the process to create the model? How do you share work with colleagues for collaboration or replicating your results? As is true for software projects in general, what’s needed is better management of code versions and project assets. Myself, I am just beginning my journey to learn about Machine Learning tools.

Machine Learning Recipes with Josh Gordon. [1905.12787] The Theory Behind Overfitting, Cross Validation, Regularization, Bagging, and Boosting: Tutorial. 20 lines of code that will beat A/B testing every time. Zwibbler.com is a drop-in solution that lets users draw on your web site.

A/B testing is used far too often, for something that performs so badly. It is defective by design: Segment users into two groups. Show the A group the old, tried and true stuff. Show the B group the new whiz-bang design with the bigger buttons and slightly different copy. After a while, take a look at the stats and figure out which group presses the button more often. In recent years, hundreds of the brightest minds of modern civilization have been hard at work not curing cancer. With a simple 20-line change to how A/B testing works, that you can implement today, you can always do better than A/B testing -- sometimes, two or three times better.

It can reasonably handle more than two options at once.. The Multi-armed bandit problem The multi-armed bandit problem takes its terminology from a casino. Like many techniques in machine learning, the simplest strategy is hard to beat.

All you need to know about Linear Regression algebra to be interview-ready. Linear Regression (LR) is one of the most simple and important algorithm there is in data science.

Whether you’re interviewing for a job in data science, data analytics, machine learning or quant research, you might end up having to answer specific algebra questions about LR. Here’s all you need to know to feel confident about your LR knowledge.

A Tour of Machine Learning Algorithms. Last Updated on August 14, 2020 In this post, we will take a tour of the most popular machine learning algorithms. It is useful to tour the main algorithms in the field to get a feeling of what methods are available. There are so many algorithms that it can feel overwhelming when algorithm names are thrown around and you are expected to just know what they are and where they fit.

I want to give you two ways to think about and categorize the algorithms you may come across in the field. The first is a grouping of algorithms by their learning style.The second is a grouping of algorithms by their similarity in form or function (like grouping similar animals together). Both approaches are useful, but we will focus in on the grouping of algorithms by similarity and go on a tour of a variety of different algorithm types.

Random Forests for Complete Beginners - victorzhou.com. Machine Learning 101 – Onfido Tech. Réduction de dimensionnalité - Data Analytics Post. 2 min Réduction de dimensionnalité : On désigne ainsi toute méthode permettant de projeter des données issues d’un espace de grande dimension dans un espace de plus petite dimension. Cette opération est cruciale en apprentissage automatique pour lutter contre ce qu’on appelle le fléau des grandes dimensions (le fait que les grandes dimensions altèrent l’efficacité des méthodes). On emploie ici le mot « dimension » au sens algébrique, i.e. la dimension de l’espace vectoriel sous-jacent aux valeurs des vecteurs de descripteurs.

Deep_Learning_Project. ""Sometimes, we tend to get lost in the jargon and confuse things easily, so the best way to go about this is getting back to our basics.

Don’t forget what the original premise of machine learning (and thus deep learning) is - IF the input and output are related by a function y=f(x), then if we have x, there is no way to exactly know f unless we know the process itself. However, machine learning gives you the ability to approximate f with a function g, and the process of trying out multiple candidates to identify the function g best approximating f is called machine learning. Ok, that was machine learning, and how is deep learning different?

Deep learning simply tries to expand the possible kind of functions that can be approximated using the above mentioned machine learning paradigm. If you want to know the mathematics of it, go read about VC dimension and how more layers in a network affect it.

How to choose machine learning algorithms. Dataset exploration: Boston house pricing — Neural Thoughts. Let's start with something basic - with data. Since in machine learning we solve problems by learning from data we need to prepare and understand our data well. This time we explore the classic Boston house pricing dataset - using Python and a few great libraries.

We'll learn the big picture of the process and a lot of small everyday tips.

[D] How to build a Portfolio as a Machine Learning/Data Science Engineer in industry ? : MachineLearning. Pmigdal comments on [D] What would you include in a first ML course?

Big data and machine learning for Businesses. ML in Javascript. Spotify’s Discover Weekly: How machine learning finds your new music. Applied Machine Learning: The Less Confusing Guide. The Hitchhiker’s Guide to Machine Learning in Python. Machine Learning and Artificial Intelligence. Course ENSAE: Machine learning in computational biology. Jean-Philippe Vert ENSAESpring 2017 Modern technologies like DNA microarrays or high-throughput sequencing are revolutionising biology and medical research. By allowing the collection of large amounts of measures at the molecular level on living organisms, they pave the way to a quantitative and rationale analysis of biological systems.

Machine Learning. Ideas on interpreting machine learning. The AI Hierarchy of Needs – Hacker Noon. IBM Research: Machine learning applications. Machine Learning Crash Course: Part 4 - The Bias-Variance Dilemma · ML@B. By Daniel Geng and Shannon Shih13 Jul 2017. Machine Learning with JavaScript : Part 1 – Hacker Noon. 10 Machine Learning Examples in JavaScript. Machine learning libraries are becoming faster and more accessible with each passing year, showing no signs of slowing down. While traditionally Python has been the go-to language for machine learning, nowadays neural networks can run in any language, including JavaScript!

The web ecosystem has made a lot of progress in recent times and although JavaScript and Node.js are still less performant than Python and Java, they are now powerful enough to handle many machine learning problems. Web languages also have the advantage of being super accessible - all you need to run a JavaScript ML project is your web browser.

How to get started with Machine Learning on Bluemix. Machine Learning Exercises In Python, Part 1. A Course in Machine Learning. Machine Learning is Fun!

How to get started with machine learning?

Machine learning algorithm cheat sheet. A Visual Introduction to Machine Learning. Lian Li: Machine Learning with Node.js - JSUnconf 2016. Applying the magic of neural networks: Machine Learning with nodejs // slidr.io. A Few Useful Things to Know about Machine Learning.