Part 15 - For More Info about AI. Part 14 - The Chinese Room Objection. By Jack Copeland © Copyright B.J.

Copeland, May 2000 The Chinese Room Objection One influential objection to strong AI, the Chinese room objection, originates with the philosopher John Searle. Searle claims to be able to prove that no computer program--not even a computer program from the far-distant future--could possibly think or understand. Searle's alleged proof is based on the fact that every operation that a computer is able to carry out can equally well be performed by a human being working with paper and pencil. Given a list of the instructions making up a computer program, a human being can in principle obey each instruction using paper and pencil. Few accept Searle's objection, but there is little agreement as to exactly what is wrong with it. [Previous section] [top of page] [Next section]

Part 13 - Is Strong AI Possible? By Jack Copeland © Copyright B.J.

Copeland, May 2000 Is Strong AI Possible? The ongoing success of applied Artificial Intelligence and of cognitive simulation seems assured. However, strong AI, which aims to duplicate human intellectual abilities, remains controversial. The difficulty of "scaling up" AI's so far relatively modest achievements cannot be overstated. However, this lack of substantial progress may simply be testimony to the difficulty of strong AI, not to its impossibility. Part 12 - Chess. By Jack Copeland © Copyright B.J.

Copeland, May 2000 Chess Some of AI's most conspicuous successes have been in chess, its oldest area of research. In 1945 Turing predicted that computers would one day play "very good chess", an opinion echoed in 1949 by Claude Shannon of Bell Telephone Laboratories, another early theoretician of computer chess. By 1958 Simon and Newell were predicting that within ten years the world chess champion would be a computer, unless barred by the rules.

Part 11 - Nouvelle AI. Part 10 - Connectionism. By Jack Copeland © Copyright B.J.

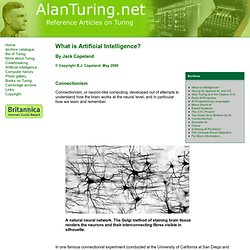

Copeland, May 2000 Connectionism Connectionism, or neuron-like computing, developed out of attempts to understand how the brain works at the neural level, and in particular how we learn and remember. A natural neural network. In one famous connectionist experiment (conducted at the University of California at San Diego and published in 1986), David Rumelhart and James McClelland trained a network of 920 artificial neurons to form the past tenses of English verbs. Part 9 - Top-Down AI vs. Bottom-up AI. By Jack Copeland © Copyright B.J.

Copeland, May 2000 Top-Down AI vs Bottom-Up AI Turing's manifesto of 1948 distinguished two different approaches to AI, which may be termed "top down" and "bottom up". Part 8 - The CYC Project. By Jack Copeland © Copyright B.J.

Part 7 - Expert Systems. By Jack Copeland © Copyright B.J.

Copeland, May 2000 Expert Systems An expert system is a computer program dedicated to solving problems and giving advice within a specialised area of knowledge. A good system can match the performance of a human specialist. The basic components of an expert system are a "knowledge base" or KB and an "inference engine".

In 1965 the AI researcher Edward Feigenbaum and the geneticist Joshua Lederberg, both of Stanford University, began work on Heuristic Dendral, the high-performance program that was the model for much of the ensuing work in the area of expert systems (the name subsequently became DENDRAL). Work on MYCIN, an expert system for treating blood infections, began at Stanford in 1972. Janice Aikins' medical expert system Centaur (1983) was designed to determine the presence and severity of lung disease in a patient by interpreting measurements from pulmonary function tests. Fuzzy logic. Part 6 - Microworld AI. By Jack Copeland © Copyright B.J.

Copeland, May 2000 Micro-World AI The real world is full of distracting and obscuring detail: generally science progresses by focussing on artificially simple models of reality (in physics, frictionless planes and perfectly rigid bodies, for example). In 1970 Marvin Minsky and Seymour Papert, of the MIT AI Laboratory, proposed that AI research should likewise focus on developing programs capable of intelligent behaviour in artificially simple situations known as micro-worlds. An early success of the micro-world approach was SHRDLU, written by Terry Winograd of MIT (details of the program were published in 1972).

Had you touched any pyramid before you put one on the green block? Although SHRDLU was initially hailed as a major breakthrough, Winograd soon announced that the program was in fact a dead end. Roger Schank and his group at Yale applied a form of the micro-world approach to language processing. [Previous section] [top of page] [Next section] Part 5 - AI Programming Languages. By Jack Copeland © Copyright B.J.

Copeland, May 2000 AI Programming Languages In the course of their work on the Logic Theorist and GPS, Newell, Simon and Shaw developed their Information Processing Language, or IPL, a computer language tailored for AI programming. At the heart of IPL was a highly flexible data-structure they called a "list". In 1960 John McCarthy combined elements of IPL with elements of the lambda calculus--a powerful logical apparatus dating from 1936--to produce the language that he called LISP (from LISt Processor).

The logic programming language PROLOG (from PROgrammation en LOGique) was conceived by Alain Colmerauer at the University of Marseilles, where the language was first implemented in 1973. Researchers at the Institute for New Generation Computer Technology in Tokyo have used PROLOG as the basis for sophisticated logic programming languages. Part 4 - Early AI Programs. By Jack Copeland © Copyright B.J.

Copeland, May 2000 Early AI Programs The first working AI programs were written in the UK by Christopher Strachey, Dietrich Prinz, and Anthony Oettinger. Strachey was at the time a teacher at Harrow School and an amateur programmer; he later became Director of the Programming Research Group at Oxford University. Strachey chose the board game of checkers (or draughts) as the domain for his experiment in machine intelligence. Prinz's chess program, also written for the Ferranti Mark I, first ran in November 1951. Turing started to program his Turochamp chess-player on the Ferranti Mark I but never completed the task. Machine learning. Part 3 - Alan Turing and the Origins of AI. By Jack Copeland © Copyright B.J. Copeland, May 2000 Alan Turing and the Origins of AI The earliest substantial work in the field was done by the British logician and computer pioneer Alan Mathison Turing.

In 1935, at Cambridge University, Turing conceived the modern computer. During the Second World War Turing was a leading cryptanalyst at the Government Code and Cypher School, Bletchley Park (where the Allies were able to decode a large proportion of the Wehrmacht's radio communications). At Bletchley Park Turing illustrated his ideas on machine intelligence by reference to chess. In London in 1947 Turing gave what was, so far as is known, the earliest public lecture to mention computer intelligence, saying "What we want is a machine that can learn from experience", adding that the "possibility of letting the machine alter its own instructions provides the mechanism for this". Part 2 - Strong AI, Applied AI and CS. By Jack Copeland © Copyright B.J. Copeland, May 2000 Strong AI, Applied AI, and CS Research in AI divides into three categories: "strong" AI, applied AI, and cognitive simulation or CS.

Part 1 - What is AI? By Jack Copeland © Copyright B.J. Copeland, May 2000 Artificial Intelligence (AI) is usually defined as the science of making computers do things that require intelligence when done by humans. AI has had some success in limited, or simplified, domains.