Camara lucida [en] Kinect Physics Tutorial for Processing. Chparsons/ofxCamaraLucida. Geometric Operations - Affine Transformation. Common Names: Affine Transformation Brief Description In many imaging systems, detected images are subject to geometric distortion introduced by perspective irregularities wherein the position of the camera(s) with respect to the scene alters the apparent dimensions of the scene geometry.

Applying an affine transformation to a uniformly distorted image can correct for a range of perspective distortions by transforming the measurements from the ideal coordinates to those actually used. (For example, this is useful in satellite imaging where geometrically correct ground maps are desired.) An affine transformation is an important class of linear 2-D geometric transformations which maps variables (e.g. pixel intensity values located at position in an input image) into new variables (e.g. in an output image) by applying a linear combination of translation, rotation, scaling and/or shearing (i.e. non-uniform scaling in some directions) operations.

How It Works Similarly, pure scaling is: pairs. . . Kinect Sensor. The Kinect Services support the following features: Depth image including Player Index RGB image Tilt (Get and Set) Microphone Array (not in simulation) Skeleton Tracking (not in simulation) You can specify the resolution of the Depth and RGB cameras independently via a config file, as well as the depth camera mode.The config file also specifies whether you want skeleton tracking to be performed or not.

If you do not use the skeleton data, you should not track it because there is a performance overhead. You cannot turn skeleton tracking on once the service is running, so it must be selected in the config file. Kinect depth image. Kinect: Simple way to get the Depth Stream (Source Code) On February 1st, Microsoft released the first official version of the Kinect SDK and It is better than ever!

If you are starting to play with this cool “toy”, you are probably finding many problems in your way. I have been struggling with WPF and its way to paint stuff on the screen for a while now, and these two things are quite basic when we talk about the Kinect. So this afternoon, my friend Jesús Domínguez gave me a call asking me for my old Kinect projects. He just bought a new Kinect and wanted to use the latest SDK. I have been always interested in this device, so we spent this cold afternoon trying to simplify Microsoft’s code in order to give you the simplest, shortest, and easiest source code you can use to get the Depth stream from the Kinect.

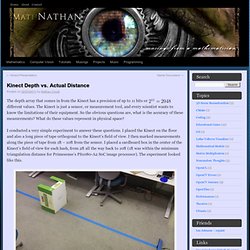

You can download the full source code from this link: Here is all source code explained: namespace WPFKinectTest{ /// <summary> /// Interaction logic for Window1.xaml /// </summary> public Window1() { InitializeComponent(); ///Authors: null); Kinect Depth vs. Actual Distance. The depth array that comes in from the Kinect has a precision of up to 11 bits or different values.

The Kinect is just a sensor, or measurement tool, and every scientist wants to know the limitations of their equipment. So the obvious questions are, what is the accuracy of these measurements? What do these values represent in physical space? I conducted a very simple experiment to answer these questions. Experiment Layout. For each measurement I saved the rgb image, a color mapped depth image, and a text file containing the raw values from the depth array of the ROI containing the box.

My findings tell me that the Kinect’s depth measurement as a function of distance is not linear… It appears to follow a logarithmic scaling. There is no interpolation or further calculations done on the graph above, it is simply a line graph. Getting started with Microsoft Kinect SDK - Depth. How to use the raw depth data that the Kinect provides to display and analyze a scene and create a live histogram of depth.

UPDATE: A new version of the entire series for SDK 1.0 is being prepared and should be published soon. The first part is Getting started with Windows Kinect SDK 1.0 If you don't want to miss it subscribe to the RSS feed, follow us on Google+, Twitter, Linkedin or Facebook or sign up for our weekly newsletter. Other Articles in this Series This is the second installment of a series on getting started with the Kinect SDK. In the previous article, Getting started with Microsoft Kinect SDK, we covered how to power your Kinect, downloading and installing the SDK, and using the video camera. Note: this tutorial uses Beta 2 of the SDK. Neil Dodgson. Skip to content | Access key help Computer Laboratory Neil Dodgson Neil Dodgson is Professor of Graphics & Imaging at the University of Cambridge and Deputy Head of the Computer Laboratory.

With Peter Robinson and Alan Blackwell, he runs the Graphics & Interaction Research Group (Rainbow) E-mail: nad (at) cl.cam.ac.uk Office: SS10 in the William Gates BuildingTelephone: +44-1223-334417Laboratory address: see main contacts page General information Graphics & Interaction Research Group Research Publications Subdivision surfaces Aesthetic imaging Stereoscopic 3D displays PhD Students Teaching Research Skills Advanced Graphics Emmanuel College.