Hardware info. Hardware Information Laser illuminator The illuminator uses an 830nm laser diode.

There is no modulation - output level is constant. Output power measured at the illuminator output is around 60mW (Using Coherent Lasercheck). The laser is temperature stabilised with a small peltier element mounted between the illuminator and the aluminium mounting plate. The depth sensing appears somewhat sensitive to the relative position of the illuminator and sensor. Futuristic Push buttons. Accuracy / resolution of depth data? - OpenKinect. I Heart Robotics: Limitations of the Kinect. Or "Why do we still need other sensors if the Kinect is so awesome?

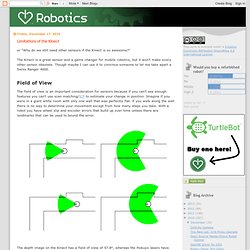

" The Kinect is a great sensor and a game changer for mobile robotics, but it won't make every other sensor obsolete. Though maybe I can use it to convince someone to let me take apart a Swiss Ranger 4000. Field of View The field of view is an important consideration for sensors because if you can't see enough features you can't use scan matching/ICP to estimate your change in position. Imagine if you were in a giant white room with only one wall that was perfectly flat. if you walk along the wall there is no way to determine your movement except from how many steps you take. The depth image on the Kinect has a field of view of 57.8°, whereas the Hokuyo lasers have between 240° and 270°, and the Neato XV-11's LIDAR has a full 360° view.

In addition to being able to view more features, the wider field of view also allows the robot to efficiently build a map without holes. Range Environmental Computation and Thermodynamics. Compiling Kinect Fusion on Kubuntu 11.10. Currently I am writing my master thesis using the Kinect and ROS.

I stumbled across the Kinect Fusion video last year and recently I found out it's open source and can be downloaded from the Point Cloud Library SVN. To build the Point Cloud Library under Kubuntu on your own, you will need to install a few additional things. System requirements: NVidia graphics card with CUDA cores. Master Thesis: Online Symbol Recognition Through Data Fusion of a 3D- and a Color Camera for an Autonomous Robot. Recently I finished my master thesis which was about using the Kinect to do sign recognition.

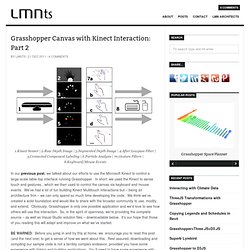

(sign on the right is out of range) The goal was to improve recognition speed by only searching symbols in suitable surfaces (filtered by arrangement, distance, size and place). I wrote most of the algorithms on my own, except some stuff I used from OpenCV (e.g. warp perspective) and from ROS (gathering the images). The most interesting stuff begins in chapter 5. The document can be found here. Grasshopper Canvas with Kinect Interaction: Part 2. 1.Kinect Sensor | 2.Raw Depth Image | 3.Segmented Depth Image | 4.After Lowpass Filter | 5.Connected Component Labeling | 6.Particle Analysis | 7n.Gesture Filters | 8.Keyboard/Mouse Events In our previous post, we talked about our efforts to use the Microsoft Kinect to control a large-scale table-top interface running Grasshopper.

In short: we used the Kinect to sense touch and gestures…which we then used to control the canvas via keyboard and mouse events. We’ve had a lot of fun building Kinect Multitouch Interactions but – being an architecture firm – we can only spend so much time developing the code. We think we’ve created a solid foundation and would like to share with the broader community to use, modify, and extend. Obviously, Grasshopper is only one possible application and we’d love to see how others will use this interaction.

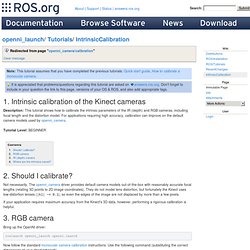

BE WARNED: Before you jump in and try this at home, we encourage you to read this post (and the next one) to get a sense of how we went about this. Openni_camera/calibration. Intrinsic calibration of the Kinect cameras Description: This tutorial shows how to calibrate the intrinsic parameters of the IR (depth) and RGB cameras, including focal length and the distortion model.

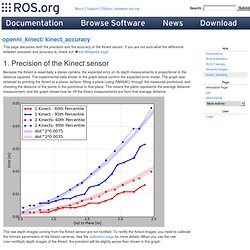

Openni_kinect/kinect_accuracy. This page discusses both the precision and the accuracy of the Kinect sensor.

If you are not sure what the difference between precision and accuracy is, check out this Wikipedia page. Precision of the Kinect sensor Because the Kinect is essentially a stereo camera, the expected error on its depth measurements is proportional to the distance squared. The experimental data shown in the graph below confirm the expected error model. Kinect_calibration. Camera_calibration/Tutorials/MonocularCalibration. Description: This tutorial cover using the camera_calibration 's cameracalibrator.py node to calibrate a monocular camera with a raw image over ROS. Keywords: monocular, camera, calibrate Tutorial Level: BEGINNER Before Starting Make sure that you have the following: a large checkerboard with known dimensions. Compiling Start by getting the dependencies and compiling the driver. Kinect Alternative for Developers (XTION Pro) I Heart Robotics: Progress with RGB-D Sensors. RSS 2011 Workshop on RGB-D Cameras. Kinect with ROS.

ROS Contest Projects. Matt's Webcorner - Kinect Sensor Programming. The Kinect is an attachment for the Xbox 360 that combines four microphones, a standard RGB camera, a depth camera, and a motorized tilt.

Although none of these individually are new, previously depth sensors have cost over $5000, and the comparatively cheap $150 pricetag for the Kinect makes it highly accessible to hobbyist and academics. This has spurred a lot of work into creating functional drivers for many operating systems so the Kinect can be used outside of the Xbox 360. You can find a decent overview of the current state of people working on Kinect here. I decided to hack around with the Kinect partially because Pat Hanrahan bought us a Kinect and partially becauase I wanted to see if it had a good enough resolution to be used for my scene reconstruction algorithm.

ROS (Robot Operating System) and the Kinect. One of my master courses is called autonomous systems.

It's a course, where we do stuff with the Pioneer Robot from MobileRobots and ROS (Robot Operating System) from Willow Garage. There are groups with 2 to 4 people. Each of them got a topic. KinectHacks.net. Kinect connector pinout. Openni_kinect. Electric: Documentation generated on March 01, 2013 at 04:19 PMfuerte: Documentation generated on August 19, 2013 at 10:41 AMgroovy: Cannot load information on name: openni_kinect, distro: groovy, which means that it is not yet in our index.

Please see this page for information on how to submit your repository to our index.hydro: Cannot load information on name: openni_kinect, distro: hydro, which means that it is not yet in our index. Please see this page for information on how to submit your repository to our index.indigo: Cannot load information on name: openni_kinect, distro: indigo, which means that it is not yet in our index. Kinect Calibration. OpenKinect. TheTechJournal.com. Introducing OpenNI. OpenNI/OpenNI - GitHub. OpenNI Downloads. OpenNI discussions - [OpenNI-dev] Passing the output buffer to the generators. Kinect tear down - I FixIt Yourself. V-Sido] Control the Humanoid Robot by Kinect. Microsoft Kinect somatosensory game device full disassembly report _Microsoft XBOX - waybeta.

Der Fall Kinect - Page 1. Während die Veröffentlichung Kinects im November näher rückt, sprechen die Entwickler – offen und unter der Hand – über das neue Bewegungserkennungssystem.

Darüber, was es kann, was es nicht kann und was wir von dem System für die Zukunft zu erwarten haben. Microsoft selbst verstärkt seine Marketing-Bemühungen. In dieser Woche erschienen zwei Artikel (einer von T3 und ein weiterer, beindruckenderer von Gizmodo), die uns erstmals in die Kinect-Kamera hineinblicken ließen und uns dabei genügend technische Infos lieferten, die etwas unschönen „EyeToy-HD"-Vorwürfe zu entkräften, mit denen dem Gerät im Internet seit der E3 begegnet wird: Kinect ist ein State-of-the-Art-Motion-Capture-Gerät für Verbraucher mit Stimmerkennung und biometrischer Personenerkennung. Und Microsoft will, dass ihr das wisst.

Video: Alternative Anwendungsmöglichkeiten für Microsoft Kinect.