5 Minute Overview of Pentaho Business Analytics. Mondrian - Interactive Statistical Data Visualization in JAVA. MESI. Many Eyes. Data warehouse. Entity–attribute–value model. Entity–attribute–value model (EAV) is a data model to describe entities where the number of attributes (properties, parameters) that can be used to describe them is potentially vast, but the number that will actually apply to a given entity is relatively modest.

In mathematics, this model is known as a sparse matrix. EAV is also known as object–attribute–value model, vertical database model and open schema. OpenReports. Jasperreports : JasperForge. JasperReports. It can be used in Java-enabled applications, including Java EE or web applications, to generate dynamic content.

It reads its instructions from an XML or .jasper file. JasperReports is part of the Lisog open source stack initiative. Features[edit] Public Data Explorer. DSPL Tutorial - DSPL: Dataset Publishing Language - Google Code. DSPL stands for Dataset Publishing Language.

Datasets described in DSPL can be imported into the Google Public Data Explorer, a tool that allows for rich, visual exploration of the data. Hans Rosling shows the best stats you've ever seen. Business analytics and business intelligence leaders - Pentaho. 03. Hello World Example. PLEASE NOTE: This tutorial is for a pre-5.0 version PDI.

If you are using PDI 5.0 or later, please use the following tutorial instead: Getting Started with PDI. Although this will be a simple example, it will introduce you to some of the fundamentals of PDI: Working with the Spoon tool Transformations Steps and Hops Predefined variables Previewing and Executing from Spoon Executing Transformations from a terminal window with the Pan tool. Loop over fields in a MySQL table to generate csv files. Dynamic SQL Queries in PDI a.k.a. Kettle. Email When doing ETL work every now and then the exact SQL query you want to execute depends on some input parameters determined at runtime.

This requirement comes up most frequently when SELECTing data. This article shows the techniques you can employ with the “Table Input” step in PDI to make it execute dynamic or parametrized queries. The samples you can get in the downloads section are self-contained and they use an in-memory database, so they work out of the box. Just download and run the samples.

Binding Field Values to the SQL Query The first approach to executing dynamic queries will be familiar to many readers who are used to executing SQL statements from code: you start by writing a skeleton of your query that contains placeholders. Slowly changing dimension. For example, you may have a dimension in your database that tracks the sales records of your company's salespeople.

Creating sales reports seems simple enough, until a salesperson is transferred from one regional office to another. How do you record such a change in your sales dimension? You could calculate the sum or average of each salesperson's sales, but if you use that to compare the performance of salespeople, that might give misleading information. If the salesperson was transferred and used to work in a hot market where sales were easy, and now works in a market where sales are infrequent, his/her totals will look much stronger than the other salespeople in their new region. Or you could create a second salesperson record and treat the transferred person as a new sales person, but that creates problems. Dealing with these issues involves SCD management methodologies referred to as Type 0 through 6. Type 0[edit] The Type 0 method is passive. Power Your Decisions With SAP Crystal Solutions. OpenMRS: ETL/Data Warehouse/Reporting. ETL Process.

The ETL (Extract, Transform, Load) process is comprised of several steps and its architecture depends on the specific data warehouse system.

In this post, an outline of the process will be given along with choices that are/could be used for OpenMRS. Data sources, staging area and data targets Data sources: The only data source for the moment is the OpenMRS database.Staging area: This refers to an intermediate area between the source database and the DW database. This is where the extracted data from the source systems are stored and manipulated through transformations.

At this time, there is no need for a sophisticated staging area, other than a few independent tables (called orphans), which are stored in the DW database.Data Targets: The DW database. Extraction. Another approach for reporting: A Data Warehouse System. Why would we want to build a data warehouse system?

We might consider doing this for some of the following reasons: An overview of the data warehouse How can the above requirements be met? What are the main components of such a system? DW Data Model. This post is going to describe the data model for the OpenMRS data warehouse.

It will be edited frequently to add documentation for the model and to modify it. Star Schemas. Building Reports (Step By Step Guide) - Documentation - OpenMRS Wiki. You can create three different types of reports: a Period Indicator Report, a Row-Per-Patient Report, or a Custom Report (Advanced).

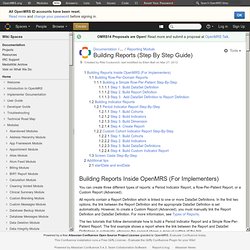

All reports contain a Report Definition which is linked to one or more DataSet Definitions. In the first two options, the link between the Report Definition and the appropriate DataSet Definition is set automatically. However, to create a Custom Report (Advanced), you must manually link the Report Definition and DataSet Definition. For more information, see Types of Reports. Openmrs-reporting-etl-olap - A data warehouse system for OpenMRS, based on other open source projects. Pentaho and OpenMRS Integration. Pentaho ETL and Designs for Dimensional Modeling (Design Page, R&D) - Projects - OpenMRS Wiki. Abstract OpenMRS has few tools in place allowing for easier analysis of concept, patient, location, encounter or visit data in an aggregated, dimensional manner.

OLAP (Online Analytical Processing) is one technology encompassed under the umbrella of business intelligence that facilitates rapid answers to multi-dimensional querying of data. Click on the image at right for a simple sample of what dimensional modeling looks like at a high level. This functionality extends beyond traditional reporting in several ways: The community edition of the Pentaho Business Intelligence suite includes Pentaho Analysis, an OLAP engine (specifically ROLAP) project named Mondrian. The project will include ongoing development of a set of prototype ETL transformations and models in order to flesh out detailed requirements and validate design decisions.

Project Champions Andrew Kanter Burke Mamlin Objectives There will be two sets of parallel objectives defined. Cohort Queries as a Pentaho Reporting Data Source - Projects - OpenMRS Wiki. Skip to end of metadataGo to start of metadata Abstract Pentaho Reporting Community Edition (CE) includes the Pentaho Report Designer, Pentaho Reporting Engine, Pentaho Reporting SDK and the common reporting libraries shared with the entire Pentaho BI Platform. This suite of open-source reporting tools allows you to create relational and analytical reports from a wide range of data-sources and output types including: PDF, Excel, HTML, Text, Rich-Text-File and XML and CSV outputs of your data.

The OpenFormula/Excel-formula expressions help you to create more dynamic reports exactly the way you want them. The open architecture and powerful API and extension points makes Pentaho Reporting a prime candidate for integration with OpenMRS. Welcome to the Pentaho Community. Concept Dictionary Creation and Maintenance Under Resource Constraints: Lessons from the AMPATH Medical Record System. Welcome to Apelon DTS. OpenMRS. Advanced Concept Management at OpenMRS. OpenMRS Database Schema. Main Page - MaternalConceptLab.