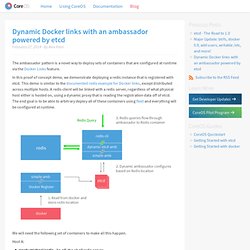

Hopsoft/docker-graphite-statsd. Dynamic Docker links with an ambassador powered by etcd. The ambassador pattern is a novel way to deploy sets of containers that are configured at runtime via the Docker Links feature.

In this proof of concept demo, we demonstrate deploying a redis instance that is registered with etcd. This demo is similar to the documented redis example for Docker links, except distributed across multiple hosts. A redis client will be linked with a redis server, regardless of what physical host either is hosted on, using a dynamic proxy that is reading the registration data off of etcd. The end goal is to be able to arbitrary deploy all of these containers using fleet and everything will be configured at runtime.

We will need the following set of containers to make all this happen. The Why and How of Ansible and Docker. This is a guest post by Gerhard Lazu, a Rubyist with a strong devops background.

It originally appeared here on The Changelog #. There is a lot of interest from the tech community in both Docker and Ansible, I am hoping that after reading this article you will share our enthusiasm. You will also gain a practical insight into using Ansible and Docker for setting up a complete server environment for a Rails application. Many reading this might be asking, “Why don’t you just use Heroku?”. First of all, I can run Docker and Ansible on any host, with any provider. Why Ansible? After 4 years of heavy Chef usage, the infrastructure as code mentality becomes really tedious. Using nginx, confd, and docker for zero-downtime web updates. Update March 5, 2014: Added drone.io continuous delivery to this process, for git push to production deployment.

I’ve been really enjoying working with Docker recently, but one of my pain points has been how to update a website running in a docker container with no downtime. There are apps like which uses a custom node.js proxy that gets configuration from a redis instance. I don’t really want to maintain something so “heavy” for just a small website, so I’ve been searching for a better solution for a while. I even considered writing one of my own, and started a few times, only to stop — thinking that this was a wheel that didn’t need to be reinvented.

Recently I came across confd from Kelsey Hightower, which is a really slick tool that watches etcd for changes, then emits configuration file(s) based on the contents of the keys you’re watching in etcd. I set aside a few hours this morning to try it out, and I’m very pleasantly surprised by how well it all works. Ansible & Docker - The Path to Continuous Delivery I. If I had a Rails application requiring MySQL and Redis that I wanted to host myself, this is the quickest and most simple approach.

There are just 2 dependencies: Ansible & Docker. To make the introductions: Meet Ansible, a system orchestration tool. It has no dependencies other than python and ssh. It doesn’t require any agents to be set up on the remote hosts and it doesn’t leave any traces after it runs either. Meet Docker, a utility for creating virtualized Linux containers for shipping self-contained applications. The quickest way to setup Ansible on OS X: sudo easy_install pip sudo pip install setuptools ansible Ansible will setup Docker on the remote system, there’s only 1 thing left to do on my local system: setup DigitalOcean integration.

I have logged into my DigitalOcean account, generated a new API key and set it up in my shell environment. If you want to follow along, the Ansible configuration required to run all following examples is available at gerhard/ansible-docker. Fast, isolated development environments using Docker. Fast, isolated development environments using Docker.

Define your app's environment with Docker so it can be reproduced anywhere: FROM orchardup/python:2.7 ADD . /code WORKDIR /code RUN pip install -r requirements.txt Define the services that make up your app so they can be run together in an isolated environment: web: build: . command: python app.py links: - db ports: - "8000:8000"db: image: orchardup/postgresql (No more installing Postgres on your laptop!) Then type fig up, and Fig will start and run your entire app: There are commands to: start, stop and rebuild servicesview the status of running servicestail running services' log outputrun a one-off command on a service. Baseimage-docker: A minimal Ubuntu base image modified for Docker-friendliness. You learned about Docker.

It's awesome and you're excited. You go and create a Dockerfile: FROM ubuntu:16.04 RUN apt-get install all_my_dependencies ADD my_app_files /my_app CMD ["/my_app/start.sh"] Cool, it seems to work. Pretty easy, right? Not so fast. You just built a container which contains a minimal operating system, and which only runs your app.

"What do you mean? Not quite. When your Docker container starts, only the CMD command is run. Furthermore, Ubuntu is not designed to be run inside Docker. "What important system services am I missing? " A correct init process Main article: Docker and the PID 1 zombie reaping problem Here's how the Unix process model works. Most likely, your init process is not doing that at all. Furthermore, docker stop sends SIGTERM to the init process, which is then supposed to stop all services. Syslog.