Analysing press images. How to verify photos and videos on social media networks. This image was mistakenly broadcast by one of France’s largest TV channels, France 2.

The scene was described as having unfolded in Iran, back in December 2009. A cursory glance reveals a range of details that could allow us to verify its authenticity. Are Iranian police shields the same colour as in this image? Are Tehran’s pavements painted yellow? Is that really how young Iranians dress? The photo was actually taken in Honduras. Another example, far more recent, relates to the migrant crisis in Europe, a favourite theme for those who wish to mislead the European public. According to the caption posted to YouTube, the video depicts violence at the hands of migrants in Erfurt, a city in central Germany. Google Maps, Google Earth, and Google Street View To really scrutinise a photo or a video, you have to get up close and personal. Time for a pop quiz! Here, no attempt has been made to mislead the viewer. Google Street View Who's the author ? Then, listen to the words. Bienvenue sur [decryptimages]

FotoForensics. Guide To Using Reverse Image Search For Investigations. Reverse image search is one of the most well-known and easiest digital investigative techniques, with two-click functionality of choosing “Search Google for image” in many web browsers.

This method has also seen widespread use in popular culture, perhaps most notably in the MTV show Catfish, which exposes people in online relationships who use stolen photographs on their social media. However, if you only use Google for reverse image searching, you will be disappointed more often than not. Limiting your search process to uploading a photograph in its original form to just images.google.com may give you useful results for the most obviously stolen or popular images, but for most any sophisticated research project, you need additional sites at your disposal — along with a lot of creativity. This guide will walk through detailed strategies to use reverse image search in digital investigations, with an eye towards identifying people and locations, along with determining an image’s progeny.

A Brief Comparison of Reverse Image Searching Platforms – DomainTools Blog. Overview This will be another of a hopefully long series of practical OSINT blog posts from the Security Research team here at DomainTools.

This time around I’ll be briefly comparing the reverse image search capabilities of some major image search engines. We’ll look at Google, Yandex, Bing, and TinEye. Hopefully you’re familiar with these search engines already but if you aren’t this post is a good crash course for what kind of results you can expect from each. First we’ll have Emily give an overview of reverse image searching, and then I’ll break down some comparisons between the most popular image search engines. Reverse Image Searching In security research, we deal a lot with various types of data: IOCs, malware binaries, reports, and more. For some investigations, it may be enough to reverse image search using a regular search engine like Google or Bing.

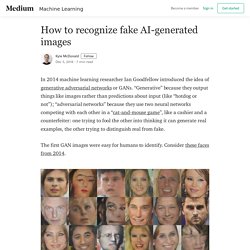

For example, during the course of an investigation I came across an avatar used by a malware author that I was investigating. How to recognize fake AI-generated images - Kyle McDonald - Medium. In 2014 machine learning researcher Ian Goodfellow introduced the idea of generative adversarial networks or GANs.

“Generative” because they output things like images rather than predictions about input (like “hotdog or not”); “adversarial networks” because they use two neural networks competing with each other in a “cat-and-mouse game”, like a cashier and a counterfeiter: one trying to fool the other into thinking it can generate real examples, the other trying to distinguish real from fake. The first GAN images were easy for humans to identify. Consider these faces from 2014. But the latest examples of GAN-generated faces, published in October 2017, are more difficult to identify. Here are some things you can look for when trying to recognize an image produced by a GAN. Straight hair looks like paint Text is indecipherable GANs trained on faces have a hard time capturing rare things in the background with lots of structure.

Background is surreal Asymmetry Weird teeth Messy hair.