Блог / Хабрацентр им. moat. 15 марта 2015 в 18:58 Представляю вашему вниманию очередной выпуск обзора наиболее интересных материалов, посвященных теме анализа данных и машинного обучения. 16,4k 138 @moat 10 марта 2015 в 11:52 7,5k 76 @moat 1 марта 2015 в 19:07 8,5k 83 @moat 22 февраля 2015 в 16:51 8,8k 90 @moat 15 февраля 2015 в 15:03 9,2k 96 @moat 9 февраля 2015 в 16:53.

Reflecting back on one year of Kaggle contests. It’s been a year since I joined Kaggle for my first competition.

Back then I didn’t know what an Area Under the Curve was. How did I manage to predict my way to Kaggle Master? Early start Toying with datasets and tools I was already downloading datasets from Kaggle purely for my own entertainment and study before I started competing. The dataset for the “Amazon.com – Employee Access Challenge” was one of the first datasets that caught my eyes.

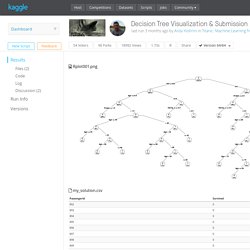

III. Specialization. Курс «Машинное обучение» 2014. Choosing the right estimator — scikit-learn 0.17 documentation. Titanic: Machine Learning from Disaster. [1] "Braund" " Mr" " Owen Harris" [1] " Mr" 'data.frame': 891 obs. of 14 variables:

H2O.ai (0xData) - Fast Scalable Machine Learning. Predictive Modeling Factories with H2O H2O provides sophisticated, ready-to-use algorithms and processing power to analyze bigger datasets, more variables, and more models.

All collected data can be used without any sampling, producing more accurate predictions. New buying patterns can be incorporated into P2B models immediately, reducing overall modeling time and faster score publication, allowing more time for campaign planning and execution. Cisco saw a 15x increase in speed after implementing H2O into their Propensity to Buy (P2B) modeling factory. H2O provides the platform for businesses to make more informed marketing decisions and get an accurate prediction on their future performance by delivering: • Support for multiple data sources, including Hadoop, Database, and S3, and all common file types for maximizing data collection. Using Gradient Boosted Trees to Predict Bike Sharing Demand. Coursera. Implementation of k-means Clustering - Edureka. In this blog, you will understand what is K-means clustering and how it can be implemented on the criminal data collected in various US states.

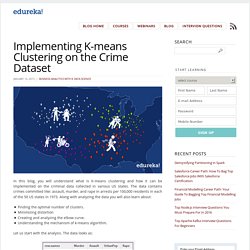

The data contains crimes committed like: assault, murder, and rape in arrests per 100,000 residents in each of the 50 US states in 1973. Along with analyzing the data you will also learn about: Finding the optimal number of clusters.Minimizing distortionCreating and analyzing the elbow curve.Understanding the mechanism of k-means algorithm. Let us start with the analysis. The data looks as: Click on the image to download this dataset. Spark MLlib for Decision Trees and Naive Bayes.

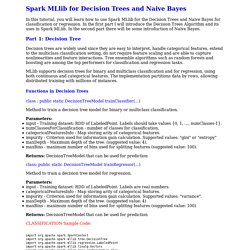

In this tutorial, you will learn how to use Spark MLlib for the Decision Trees and Naive Bayes for classification or regression.

In the first part I will introduce the Decision Trees Algorithm and its uses in Spark MLlib. In the second part there will be some introduction of Naive Bayes. Part 1: Decision Tree Decision trees are widely used since they are easy to interpret, handle categorical features, extend to the multiclass classification setting, do not require feature scaling and are able to capture nonlinearities and feature interactions. Tree ensemble algorithms such as random forests and boosting are among the top performers for classification and regression tasks. MLlib supports decision trees for binary and multiclass classification and for regression, using both continuous and categorical features. Functions in Decision Trees. Neural networks and deep learning. The human visual system is one of the wonders of the world.

Consider the following sequence of handwritten digits: Most people effortlessly recognize those digits as 504192. That ease is deceptive. In each hemisphere of our brain, humans have a primary visual cortex, also known as V1, containing 140 million neurons, with tens of billions of connections between them.

And yet human vision involves not just V1, but an entire series of visual cortices - V2, V3, V4, and V5 - doing progressively more complex image processing. The difficulty of visual pattern recognition becomes apparent if you attempt to write a computer program to recognize digits like those above. Neural networks approach the problem in a different way. And then develop a system which can learn from those training examples.

In this chapter we'll write a computer program implementing a neural network that learns to recognize handwritten digits. StatLearning-SP Course Info. Bayesian Methods for Hackers. An intro to Bayesian methods and probabilistic programming from a computation/understanding-first, mathematics-second point of view.

Prologue The Bayesian method is the natural approach to inference, yet it is hidden from readers behind chapters of slow, mathematical analysis. The typical text on Bayesian inference involves two to three chapters on probability theory, then enters what Bayesian inference is. Unfortunately, due to mathematical intractability of most Bayesian models, the reader is only shown simple, artificial examples.

This can leave the user with a so-what feeling about Bayesian inference. After some recent success of Bayesian methods in machine-learning competitions, I decided to investigate the subject again. Kaggle: Your Home for Data Science. Machine learning.