Inviting Machines Into Our Bodies. Chris Arkenberg In what amounts to a fairly shocking reminder of how quickly our technologies are advancing and how deeply our lives are being woven with networked computation, security researchers have recently reported successes in remotely compromising and controlling two different medical implant devices.

Such implanted devices are becoming more and more common, implemented with wireless communications both across components and outward to monitors that allow doctors to non-invasively make changes to their settings. Until only recently, this technology was mostly confined to advanced labs but it is now moving steadily into our bodies. As these procedures become more common, researchers are now considering the security implications of wiring human anatomy directly into the web of ubiquitous computation and networked communications. Kevin Warwick. Kevin Warwick (born 9 February 1954) is a British engineer and professor of cybernetics at the University of Reading in the United Kingdom.

He is known for his studies on direct interfaces between computer systems and the human nervous system, and has also done research in the field of robotics. Biography[edit] Kevin Warwick was born in 1954 in Coventry in the United Kingdom. NLP and language learning. NeuroSolutions: What is a Neural Network? A Non-Mathematical Introduction to Using Neural Networks. The goal of this article is to help you understand what a neural network is, and how it is used.

Most people, even non-programmers, have heard of neural networks. There are many science fiction overtones associated with them. Les architectures neuromorphiques. Les ordinateurs sont vraiment loin d'être les seuls systèmes capables de traiter de l'information.

Les automates mécaniques furent les premiers à en être capables : les ancêtres des calculettes étaient bel et bien des automates basés sur des pièces mécaniques, et n'étaient pas programmables. Par la suite, ces automates furent remplacés par les circuits électroniques analogiques et numériques non-programmables. Introduction aux Réseaux de Neurones Artificiels Feed Forward. Plongeons-nous dans l'univers de la reconnaissance de formes.

Plus particulièrement, nous allons nous intéresser à la reconnaissance des chiffres (0, 1, ..., 9). Imaginons un programme qui devrait reconnaître, depuis une image, un chiffre. On présente donc au programme une image d'un "1" manuscrit par exemple et lui doit pouvoir nous dire "c'est un 1". Supposons que les images que l'on montrera au programme soient toutes au format 200x300 pixels. Artificial neural network. An artificial neural network is an interconnected group of nodes, akin to the vast network of neurons in a brain.

Here, each circular node represents an artificial neuron and an arrow represents a connection from the output of one neuron to the input of another. For example, a neural network for handwriting recognition is defined by a set of input neurons which may be activated by the pixels of an input image. After being weighted and transformed by a function (determined by the network's designer), the activations of these neurons are then passed on to other neurons. This process is repeated until finally, an output neuron is activated. This determines which character was read. Researchers Create Artificial Neural Network from DNA. 5inShare Scientists at the California Institute of Technology (Caltech) have successfully created an artificial neural network using DNA molecules that is capable of brain-like behavior.

Hailing it as a “major step toward creating artificial intelligence,” the scientists report that, similar to a brain, the network can retrieve memories based on incomplete patterns. Potential applications of such artificially intelligent biochemical networks with decision-making skills include medicine and biological research. The researchers predict that, eventually, neural networks could be developed that operate within cells to gather information for disease diagnosis. More details from Caltech: Logiciels Libres. Stanford's Artificial Neural Network Is The Biggest Ever. Google and Neural Networks: Now Things Are Getting REALLY Interesting,… Back in October 2002, I appeared as a guest speaker for the Chicago (Illinois) URISA conference.

The topic that I spoke about at that time was on the commercial and governmental applicability of neural networks. Although well-received (the audience actually clapped, some asked to have pictures taken with me, and nobody fell asleep) at the time it was regarded as, well, out there. After all, who the hell was talking about – much less knew anything about – neural networks. Fast forward to 2014 and here we are: Google recently (and quietly) acquired a start-up - DNNResearch – whose primary purpose is the commercial application and development of practical neural networks. Before you get all strange and creeped out, neural networks are not brains floating in vials, locked away in some weird, hidden laboratory - ala The X Files - cloaked in poor lighting (cue the evil laughter BWAHAHAHA!)

That’s about it; no big announcement, little or no mention in any major publications. Google scientist Jeff Dean on how neural networks are improving everything Google does. Simon Dawson Google's goal: A more powerful search that full understands answers to commands like, "Book me a ticket to Washington DC.

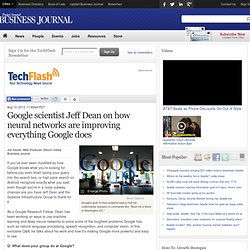

" Jon Xavier, Web Producer, Silicon Valley Business Journal If you've ever been mystified by how Google knows what you're looking for before you even finish typing your query into the search box, or had voice search on Android recognize exactly what you said even though you're in a noisy subway, chances are you have Jeff Dean and the Systems Infrastructure Group to thank for it. As a Google Research Fellow, Dean has been working on ways to use machine learning and deep neural networks to solve some of the toughest problems Google has, such as natural language processing, speech recognition, and computer vision. In this exclusive Q&A, he talks about his work and how it's making Google more powerful and easy to use. A Brain Cell is the Same as the Universe.

A Brain Cell is the Same as the Universe by Cliff Pickover, Reality Carnival Physicists discover that the structure of a brain cell is the same as the entire universe.

Image Source Return to Reality Carnival. If you like stories like this, Reality Carnival has many more. IBM simulates 530 billon neurons, 100 trillion synapses on supercomputer. A network of neurosynaptic cores derived from long-distance wiring in the monkey brain: Neuro-synaptic cores are locally clustered into brain-inspired regions, and each core is represented as an individual point along the ring. Arcs are drawn from a source core to a destination core with an edge color defined by the color assigned to the source core. Crowd Computing and The Synaptic Web. A couple of days ago David Gelernter – a known Computer Science Visionary who famously survived an attack by the Unabomber – wrote a piece on Wired called ‘The End of the Web, Search, and Computer as We Know It’. In it, he summarized one of his predictions around the web moving from a static document oriented web to a network of streams.

Nova Spivack, my Co-founder and CEO at Bottlenose, also wrote about this in more depth in his blog series about The Stream. IBM simulates 530 billon neurons, 100 trillion synapses on supercomputer. What is neural network? - Definition from Whatis. In information technology, a neural network is a system of programs and data structures that approximates the operation of the human brain. A neural network usually involves a large number of processors operating in parallel, each with its own small sphere of knowledge and access to data in its local memory. Typically, a neural network is initially "trained" or fed large amounts of data and rules about data relationships (for example, "A grandfather is older than a person's father"). A program can then tell the network how to behave in response to an external stimulus (for example, to input from a computer user who is interacting with the network) or can initiate activity on its own (within the limits of its access to the external world).

In making determinations, neural networks use several principles, including gradient-based training, fuzzy logic, genetic algorithms, and Bayesian methods.