Researchers Show How to "Steal" AI from Amazon's Machine Learning Service. In the burgeoning field of computer science known as machine learning, engineers often refer to the artificial intelligences they create as “black box” systems: Once a machine learning engine has been trained from a collection of example data to perform anything from facial recognition to malware detection, it can take in queries—Whose face is that?

Is this app safe? —and spit out answers without anyone, not even its creators, fully understanding the mechanics of the decision-making inside that box. But researchers are increasingly proving that even when the inner workings of those machine learning engines are inscrutable, they aren’t exactly secret. In fact, they’ve found that the guts of those black boxes can be reverse-engineered and even fully reproduced—stolen, as one group of researchers puts it—with the very same methods used to create them. WaveNet: A Generative Model for Raw Audio. Talking Machines Allowing people to converse with machines is a long-standing dream of human-computer interaction. The ability of computers to understand natural speech has been revolutionised in the last few years by the application of deep neural networks (e.g., Google Voice Search).

However, generating speech with computers — a process usually referred to as speech synthesis or text-to-speech (TTS) — is still largely based on so-called concatenative TTS, where a very large database of short speech fragments are recorded from a single speaker and then recombined to form complete utterances. This makes it difficult to modify the voice (for example switching to a different speaker, or altering the emphasis or emotion of their speech) without recording a whole new database.

WaveNet changes this paradigm by directly modelling the raw waveform of the audio signal, one sample at a time. WaveNet: A Generative Model for Raw Audio. WaveNet: A Generative Model for Raw Audio. The Neural Network Zoo - The Asimov Institute. With new neural network architectures popping up every now and then, it’s hard to keep track of them all.

Knowing all the abbreviations being thrown around (DCIGN, BiLSTM, DCGAN, anyone?) Can be a bit overwhelming at first. So I decided to compose a cheat sheet containing many of those architectures. Most of these are neural networks, some are completely different beasts. Though all of these architectures are presented as novel and unique, when I drew the node structures… their underlying relations started to make more sense.

One problem with drawing them as node maps: it doesn’t really show how they’re used. It should be noted that while most of the abbreviations used are generally accepted, not all of them are. Composing a complete list is practically impossible, as new architectures are invented all the time. For each of the architectures depicted in the picture, I wrote a very, very brief description. Rosenblatt, Frank. Broomhead, David S., and David Lowe. Hopfield, John J. AI & The Future Of Civilization. More particularly, I see technology as taking human goals and making them able to be automatically executed by machines.

The human goals that we've had in the past have been things like moving objects from here to there and using a forklift rather than our own hands. Now, the things that we can do automatically are more intellectual kinds of things that have traditionally been the professions' work, so to speak. These are things that we are going to be able to do by machine. The machine is able to execute things, but something or someone has to define what its goals should be and what it's trying to execute. People talk about the future of the intelligent machines, and whether intelligent machines are going to take over and decide what to do for themselves. Carpedm20/variational-text-tensorflow: TensorFlow implementation of Neural Variational Inference for Text Processing. Understanding Convolutional Neural Networks for NLP – WildML. When we hear about Convolutional Neural Network (CNNs), we typically think of Computer Vision.

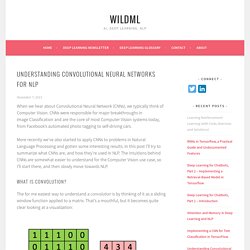

CNNs were responsible for major breakthroughs in Image Classification and are the core of most Computer Vision systems today, from Facebook’s automated photo tagging to self-driving cars. More recently we’ve also started to apply CNNs to problems in Natural Language Processing and gotten some interesting results. In this post I’ll try to summarize what CNNs are, and how they’re used in NLP. The intuitions behind CNNs are somewhat easier to understand for the Computer Vision use case, so I’ll start there, and then slowly move towards NLP. What is Convolution? The for me easiest way to understand a convolution is by thinking of it as a sliding window function applied to a matrix. Convolution with 3×3 Filter. Imagine that the matrix on the left represents an black and white image. You may be wondering wonder what you can actually do with this. The GIMP manual has a few other examples. Narrow vs. . Jtoy/awesome-tensorflow: TensorFlow - A curated list of dedicated resources.

Requests for Research. Google DeepMind. Deep Learning. Can I get a PDF of this book?

No, our contract with MIT Press forbids distribution of too easily copied electronic formats of the book. Google employees who would like a paper copy of the book can send Ian the name of the printer nearest their desk and he will send a print job to that printer containing as much of the book as you would like to read.Why are you using HTML format for the drafts? This format is a sort of weak DRM. It's intended to discourage unauthorized copying/editing of the book. Unfortunately, the conversion from PDF to HTML is not perfect, and some things like subscript expressions do not render correctly. Printing seems to work best printing directly from the browser, using Chrome. When will the book come out? Pyevolve. I am trask. WildML – AI, Deep Learning, NLP. Semantic Analysis of the Reddit Hivemind. Deep Learning with Spark and TensorFlow.

To learn more about Spark, attend Spark Summit East in New York in Feb 2016.

Neural networks have seen spectacular progress during the last few years and they are now the state of the art in image recognition and automated translation. Keras Documentation. Hello, TensorFlow! The TensorFlow project is bigger than you might realize.

The fact that it's a library for deep learning, and its connection to Google, has helped TensorFlow attract a lot of attention.