Deep Web. National Capital FreeNet, Ottawa's not-for-profit internet provider (ISP) DSL ('Digital Subscriber Line') technology gives you high-speed internet access via fiber optics and copper.

(This web page is new. If it gives you problems, please tell us; we'd appreciate email to office@ncf.ca or a call to 613-721-1773. Thank you!) DSL from NCF NCF's purpose is to support people of Canada's national capital region in enjoying the benefits of the internet. General Dry DSL Speed/Usage Installation Modem To keep things simple and transparent, all plans are month-to-month pay-in-advance arrangements. Typically it takes about five business days to activate DSL. Peer-to-peer. A peer-to-peer (P2P) network in which interconnected nodes ("peers") share resources amongst each other without the use of a centralized administrative system In a peer-to-peer network, tasks (such as searching for files or streaming audio/video) are shared amongst multiple interconnected peers who each make a portion of their resources (such as processing power, disk storage or network bandwidth) directly available to other network participants, without the need for centralized coordination by servers.[1] Historical development[edit] While P2P systems had previously been used in many application domains,[2] the concept was popularized by file sharing systems such as the music-sharing application Napster (originally released in 1999).

Tim Berners-Lee's vision for the World Wide Web was close to a P2P network in that it assumed each user of the web would be an active editor and contributor, creating and linking content to form an interlinked "web" of links. Database download. Wikipedia offers free copies of all available content to interested users.

These databases can be used for mirroring, personal use, informal backups, offline use or database queries (such as for Wikipedia:Maintenance). All text content is multi-licensed under the Creative Commons Attribution-ShareAlike 3.0 License (CC-BY-SA) and the GNU Free Documentation License (GFDL). Images and other files are available under different terms, as detailed on their description pages. For our advice about complying with these licenses, see Wikipedia:Copyrights. List of distributed computing projects. Berkeley Open Infrastructure for Network Computing. BOINC has been developed by a team based at the Space Sciences Laboratory (SSL) at the University of California, Berkeley led by David Anderson, who also leads SETI@home.

As a high performance distributed computing platform, BOINC has about 596,224 active computers (hosts) worldwide processing on average 9.2 petaFLOPS as of March 2013.[2] BOINC is funded by the National Science Foundation (NSF) through awards SCI/0221529,[3] SCI/0438443[4] and SCI/0721124.[5] History[edit] BOINC was originally developed to manage the SETI@home project. The original SETI client was a non-BOINC software exclusively for SETI@home. As one of the first volunteer grid computing projects, it was not designed with a high level of security. The BOINC project started in February 2002 and the first version was released on 10 April 2002. Design and structure[edit] Financial reports. Wikipedia Statistics - Tables - Database size.

Cloud computing. Cloud computing metaphor: For a user, the network elements representing the provider-rendered services are invisible, as if obscured by a cloud.

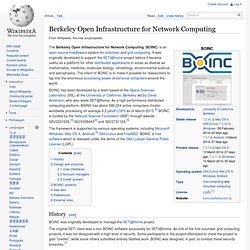

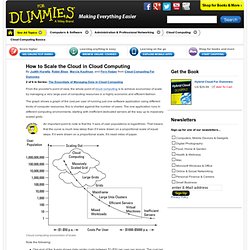

Cloud computing is a computing term or metaphor that evolved in the late 1990s, based on utility and consumption of computer resources. Cloud computing involves application systems which are executed within the cloud and operated through internet enabled devices. Purely cloud computing does not rely on the use of cloud storage as it will be removed upon users download action. Clouds can be classified as public, private and hybrid.[1][2] How to Scale the Cloud in Cloud Computing. 2 of 6 in Series: The Essentials of Managing Data in Cloud Computing From the provider's point of view, the whole point of cloud computing is to achieve economies of scale by managing a very large pool of computing resources in a highly economic and efficient fashion.

The graph shows a graph of the cost per user of running just one software application using different kinds of computer resources; this is charted against the number of users. The one application runs in different computing environments, starting with inefficient dedicated servers all the way up to massively scaled grids. An important point to note is that the Y-axis of user populations is logarithmic. That means that the curve is much less steep than if it were drawn on a proportional scale of equal steps. The Biggest Cost of Facebook's Growth.

Data store: Facebook’s data center in Prineville, Oregon, is one of several that will help the company cope with its always growing user base.

Facebook is the gateway to the Internet for a growing number of people. They message rather than e-mail; discover news and music through friends, rather than through conventional news or search sites; and use their Facebook ID to access outside websites and applications. As the keeper of so many people’s social graph, Facebook is in an incredibly powerful position—one reason its IPO this week is expected to be the largest ever for an Internet company. But potential investors should take note that there’s a flip side to Facebook’s explosive growth and power; that flip side, as one analyst put it, is its bid to become a core piece of the Internet’s infrastructure. Facebook’s own technology infrastructure is expensive to build and operate, and it must scale rapidly. To date, Facebook has been up to the infrastructure challenge.