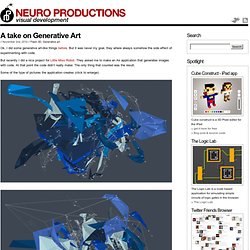

This is onformative a studio for generative design. A take on Generative Art Ok, I did some generative art-like things before.

But it was never my goal, they where always somehow the side effect of experimenting with code. But recently I did a nice project for Little Miss Robot. They asked me to make an Air application that generates images with code. At that point the code didn’t really mater. The only thing that counted was the result. Some of the type of pictures the application creates (click to enlarge): (no source code for now, but think Perlin Noise and Delaneay triangulation ) Ill update this post when the app is available. This whas a big difference in mindset for me. I looked around on the internet to see what was actually going on in the generative art scene. Some results of exploring that concept (click to enlarge): Original (public domain) pictures: Source code: generative_art_source An other thing I saw where beautiful 3d structures (mostly made with structuresynth and rendered with sunflow) So I thought it would be nice to make stuff like that in Flash.

Projects. Daniel Widrig. Daniel widrig's Photostream. ComputationalMatter. Dynamic for the people. Fragments of time and space recorded with Kinect+SLR on NYC Subway [openFrameworks, Kinect] by @obviousjim + @alexicon3000. Candid shots from Union Square NYC subway created using Kinect and SLR camera by James George in collaboration with Alexander Porter.

Video is forthcoming but for now only images below. We couldn’t wait but post these 3D fragments of time and space recorded in public. So good! Created using openFrameworks. For full set of images, see James’ flickr. /via @factoryfactory Previously: Sniff [openFrameworks]: Interactive projection of a dog that … Hemesh and HemeshGui [Processing] - library by Frederik Vanhoutte (@wblut) /post by Amnon Owed. Info : Quayola. Kinect - One Week Later [Processing, oF, Cinder, MaxMSP] - Now full speed ahead.. Last week we wrote about the wonderful work that happened over the weekend after the release of XBox Kinect opensource drivers.

![Kinect - One Week Later [Processing, oF, Cinder, MaxMSP] - Now full speed ahead..](http://cdn.pearltrees.com/s/pic/th/kinect-processing-cinder-10903470)

Today we look at what happened since then and how the Microsoft gadget is being utilised in the creative code community. In case you missed our post from last week, you can see it here: Kinect – OpenSource [News] Chris from ProjectAllusion.com got to play with the Kinect and one late night he made this little demo in Processing using the hacked Kinect drivers. The processing app is sending out OSC with depth information based on the level of detail and the defined plane. The iPad app is using TouchOSC to send different values to the Processing app. Daniel Reetz and Matti Kariluoma have been playing with Hacking a Powershot A540 camera for infrared sensitivity enabling you to see Kinect projected infra red dots in space.

Philipp Robb has some early experiments with a Microsoft Kinect depth camera on a mobile robot base. It’s still very alpha. Kinect - OpenSource [News] - amazing work created within a few days.. #of. There have been a number of exciting developments in the last few days related to XBox Kinect.

![Kinect - OpenSource [News] - amazing work created within a few days.. #of](http://cdn.pearltrees.com/s/pic/th/opensource-amazing-created-7414555)

For those that may not be aware of what it is, it’s a brand new interface to their Microsoft XBox games console. Kinect brings games without using a controller. By using projected infra-red light, the device is able to map the environment and via xbox built in software recognise gestures of any kind (see video below). Only few hours after the worldwide release of Kinect, guys at NUI Group, who posted results first, planed to only release the driver as open source once their $10k donation fund was filled up. In the meantime, Hector Martin performed a quick and hack of his own (three hours into the European launch) and released his results and code into the wild – named ‘libfreenect’.

Within a few hours of that Theo Watson ported it, making Kinect run from OSX for the first time. Memo Akten wrote a little demo to analyse the depth map for gestural 3D interaction. Moullinex - Catalina [Processing, Kinect] - Music video created using Kinect + Processing + Cinema 4D + After Effects. Music video for Catalina, a track off the Chocolat EP by Moullinex, released in January on Gomma Records created using Kinect + Processing + Cinema 4D + After Effects.

![Moullinex - Catalina [Processing, Kinect] - Music video created using Kinect + Processing + Cinema 4D + After Effects](http://cdn.pearltrees.com/s/pic/th/moullinex-catalina-processing-10903426)

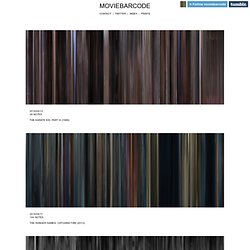

We started with the Kinect interface library developed for Processing and made available by Daniel Shiffman. Some modifications were introduced, to get our 3D data into Cinema 4D. Each file represents one frame with a coordinate map (point index, x, y, z lines) in plaintext. A threshold filter was added to enable us to filter out any points that were too far. Moviebarcode. Moviebarcode contact | twitter | index | prints 2014/04/12 28 notes The Karate Kid, Part III (1989) 2014/04/11 144 notes The Hunger Games: Catching Fire (2013) 2014/04/10 35 notes The Mark of Zorro (1940) 2014/04/09 20 notes Flandersui gae / Barking Dogs Never Bite (2000) 2014/04/08 41 notes The Last Airbender (2010)

NODE10 - Forum for Digital Arts - Welcome. A collection of experiments using fancy shmancy code.