Deep learning. Branch of machine learning Deep learning (also known as deep structured learning or differential programming) is part of a broader family of machine learning methods based on artificial neural networks with representation learning.

Learning can be supervised, semi-supervised or unsupervised.[1][2][3] Deep learning architectures such as deep neural networks, deep belief networks, recurrent neural networks and convolutional neural networks have been applied to fields including computer vision, speech recognition, natural language processing, audio recognition, social network filtering, machine translation, bioinformatics, drug design, medical image analysis, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance.[4][5][6]

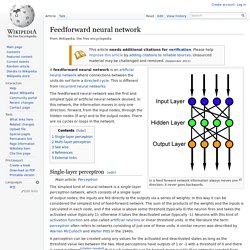

Feedforward neural network. In a feed forward network information always moves one direction; it never goes backwards.

A feedforward neural network is an artificial neural network where connections between the units do not form a directed cycle. This is different from recurrent neural networks. The feedforward neural network was the first and simplest type of artificial neural network devised. In this network, the information moves in only one direction, forward, from the input nodes, through the hidden nodes (if any) and to the output nodes. There are no cycles or loops in the network. Artificial neural network. An artificial neural network is an interconnected group of nodes, akin to the vast network of neurons in a brain.

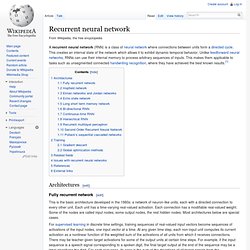

Here, each circular node represents an artificial neuron and an arrow represents a connection from the output of one neuron to the input of another. For example, a neural network for handwriting recognition is defined by a set of input neurons which may be activated by the pixels of an input image. After being weighted and transformed by a function (determined by the network's designer), the activations of these neurons are then passed on to other neurons. This process is repeated until finally, an output neuron is activated. This determines which character was read. Recurrent neural network. A recurrent neural network (RNN) is a class of neural network where connections between units form a directed cycle.

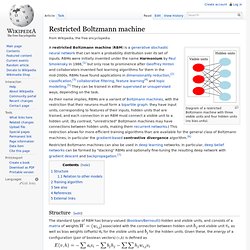

This creates an internal state of the network which allows it to exhibit dynamic temporal behavior. Unlike feedforward neural networks, RNNs can use their internal memory to process arbitrary sequences of inputs. This makes them applicable to tasks such as unsegmented connected handwriting recognition, where they have achieved the best known results.[1] Restricted Boltzmann machine. Diagram of a restricted Boltzmann machine with three visible units and four hidden units (no bias units).

A restricted Boltzmann machine (RBM) is a generative stochastic neural network that can learn a probability distribution over its set of inputs. RBMs were initially invented under the name Harmonium by Paul Smolensky in 1986,[1] but only rose to prominence after Geoffrey Hinton and collaborators invented fast learning algorithms for them in the mid-2000s. RBMs have found applications in dimensionality reduction,[2] classification,[3] collaborative filtering, feature learning[4] and topic modelling.[5] They can be trained in either supervised or unsupervised ways, depending on the task.

Restricted Boltzmann machines can also be used in deep learning networks. In particular, deep belief networks can be formed by "stacking" RBMs and optionally fine-tuning the resulting deep network with gradient descent and backpropagation.[7] Structure[edit] and visible unit. Self-organizing map. A self-organizing map (SOM) or self-organizing feature map (SOFM) is a type of artificial neural network (ANN) that is trained using unsupervised learning to produce a low-dimensional (typically two-dimensional), discretized representation of the input space of the training samples, called a map.

Self-organizing maps are different from other artificial neural networks in the sense that they use a neighborhood function to preserve the topological properties of the input space. This makes SOMs useful for visualizing low-dimensional views of high-dimensional data, akin to multidimensional scaling. Connectionism. Connectionism is a set of approaches in the fields of artificial intelligence, cognitive psychology, cognitive science, neuroscience, and philosophy of mind, that models mental or behavioral phenomena as the emergent processes of interconnected networks of simple units.

There are many forms of connectionism, but the most common forms use neural network models. Basic principles[edit] The central connectionist principle is that mental phenomena can be described by interconnected networks of simple and often uniform units. The form of the connections and the units can vary from model to model. For example, units in the network could represent neurons and the connections could represent synapses. Spreading activation[edit] In most connectionist models, networks change over time. Network science.

{{Scienc[1] e}} Network science is an interdisciplinary academic field which studies complex networks such as telecommunication networks, computer networks, biological networks, cognitive and semantic networks, and social networks.

The field draws on theories and methods including graph theory from mathematics, statistical mechanics from physics, data mining and information visualization from computer science, inferential modeling from statistics, and social structure from sociology. The United States National Research Council defines network science as "the study of network representations of physical, biological, and social phenomena leading to predictive models of these phenomena.

Network science. Small world experiment. The "six degrees of separation" model The small-world experiment comprised several experiments conducted by Stanley Milgram and other researchers examining the average path length for social networks of people in the United States.

The research was groundbreaking in that it suggested that human society is a small-world-type network characterized by short path-lengths. The experiments are often associated with the phrase "six degrees of separation", although Milgram did not use this term himself. Historical context of the small-world problem[edit] Mathematician Manfred Kochen and political scientist Ithiel de Sola Pool wrote a mathematical manuscript, "Contacts and Influences", while working at the University of Paris in the early 1950s, during a time when Milgram visited and collaborated in their research. How to Burst the "Filter Bubble" that Protects Us from Opposing Views. The term “filter bubble” entered the public domain back in 2011when the internet activist Eli Pariser coined it to refer to the way recommendation engines shield people from certain aspects of the real world.

Pariser used the example of two people who googled the term “BP”. One received links to investment news about BP while the other received links to the Deepwater Horizon oil spill, presumably as a result of some recommendation algorithm. This is an insidious problem. Much social research shows that people prefer to receive information that they agree with instead of information that challenges their beliefs. This problem is compounded when social networks recommend content based on what users already like and on what people similar to them also like.

This is the filter bubble—being surrounded only by people you like and content that you agree with. And the danger is that it can polarise populations creating potentially harmful divisions in society. It’s certainly a start. Category:Networks.