Google creative lab & BERG develop interactive light interfaces. Dec 20, 2012 google creative lab & BERG develop interactive light interfaces at the beginning of 2011 BERG started a wide-ranging conversation with the google creative lab, around near-future experiences of google and its products. during the discussions with them, a strong theme arised. both companies became curious about how it would feel to have google in the world, rather than on a screen. the question emerged: if google wasn’t trapped behind glass, what would it do?

LEGO calendar by vitamins digitally syncs to google calendar. Oct 01, 2013 LEGO calendar by vitamins digitally syncs to google calendar LEGO calendar by vitamins digitally syncs to google calendar made entirely of LEGO bricks, the cloud-based calendar by UK design studio vitamins is a wall mounted time planner that syncs up with google calendar or iCal. coded using openFrameworks and openCV, all events and schedules are synchronized to an online digital agenda, as soon as users take a picture via smartphone. the system makes the most of the tangibility of physical objects, and the ubiquity of digital platforms, providing an interactive and tactile organizer for daily life. it works by sending photographs of the calendar to a special email address, where written software scans the image, searches for the position and color of every brick and then imports the updated data directly into iCal or google calendar. to see more on the ‘LEGO calendar’, watch the video below.

LEGO calendar by vitamins studiovideo courtesy vitamins studio. Case studies (Berg) Google Creative Lab and BERG London carve interfaces out of light. The SMSlingshot. The SMSlingshot was a great success.

We travelled around the whole globe, showcased and exhibited in well known galleries, big museums and little art fairs. To get a brief overview, we recommend this little video. Because of the increased commercial interest of paving public space with digital advertising screens the need for an accessibel intervention devices seemed obvious and necessary. The wish and habit to comment (tag) the surrounding world is also an ancient and still vivant phenomena we try to preserve. Skintimacy, entre perception et intimité. Detours: Disney researchers turn houseplants into theremin. Augmented Reality Glove Lets You Use Your iPad Without Touching It. What if you could reach into your iPad and move around the objects on the screen with your hand?

Now you can do just that with the T(ether), an augmented reality glove that allows wearers to create virtual environments on the tablet with simple hand gestures. Users can manipulate the objects on the screen, such as picking them up, dropping them down, pushing them aside, or creating a new object by tracing the shape’s outline. Microsoft Augmented Reality Concept Fuses Virtual World with Reality. Microsoft researchers have created a new augmented reality concept by improving how virtual simulations react in the physical world.

The Kinect sensor is used in a process called Kinect Fusion, which allows projections of objects to react to different surfaces. Kinect Fusion is possible with the Beamatron — a device consisting of the Kinect sensor and a projector. It's attached to a spinning head in the ceiling and allows it to take detailed maps of physical spaces. This technology projects objects anywhere in a room and allows realistic movement. These Guys Turned A Rock Climbing Wall Into A Big Video Game. Indoor rock climbing is a pretty excellent sport.

It’s great exercise, it works your brain, and you can feel yourself getting better each time you reach the top. But once you’ve mastered the fastest/hardest/most creative routes up a given wall, that wall becomes… pretty boring. Perhaps a massive, virtual chain saw heading in your direction will liven things up a bit? SimX Brings Augmented Reality to the Medical Field - TechCrunch Disrupt. Street-art. Murmur. Murmur is an architectural prosthesis that enables the communication between passers-by and the wall upon which it is connected.The installation simulates the movement of sound waves, building a luminous bridge between the physical and the virtual worlds.

There is a magical effect, a mystery in the way that sound waves move. Murmur focuses on this movement, thus creating an unconventional dialogue between the public and the wall. Collaboration Murmur is a collaboration between Chevalvert, 2Roqs, Polygraphik and Splank. Video. Augmented Structures / Frequency. Augmented bubbles as shadows projected on the wall. Keyfleas - Interactive augmented projection by Miles Peyton (@mlsptn) Created by Miles Peyton, first year student at the Carnegie Mellon University, Keyfleas is an experiment in interactive augmented projection.

Inspired by the work of Chris Sugrue where light bugs crawl out of the screen and onto the viewer’s hand, Miles has used Processing and Box2D to create an augmented projection on the keyboard. As the user types, the “fleas” swarm around the pressed key, avoiding the letters. Miles describes the experience as “It’s okay if you feel something nibbling at your fingers.”

Revel: Programming the Sense of Touch. AIREAL: Interactive Tactile Experiences in Free Air. 3D Printed Interactive Speakers. This app turns your selfies into augmented reality GIFs. The future isn't filtered, it's chaotic – at least, that's the premise behind to.be camera, a new app that launched earlier this month.

Described as an "augmented reality camera", the free-to-download app lets you record video GIFs and layer colourful visual layers on top of the image. It's already built a steady following from its New York launchpad, where it was first conceived by internet collage community to.be, which has close connects with other digital collectives like DIS and #BeenTrill#. "We were missing tools to gather pieces from our physical surroundings – like pencils and stones that you might have laying on your desk, or drawings that you make on paper," to.be co-founder Nick Dangerfield told us. The resulting GIFs aren't too dissimilar from a digital video artwork you might see in an art gallery – blending the real with the unreal, sea waves wash over suburban cul-de-sacs, and blissfully unaware babies glitter like gold.

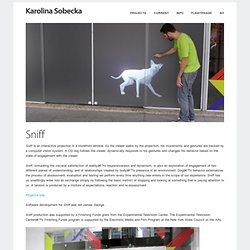

AR[t] 1 by AR Lab. ROOM RACERS: Mixed Reality Gaming (Nov 2010 version) Karolina Sobecka. Sniff is an interactive projection in a storefront window.

As the viewer walks by the projection, his movements and gestures are tracked by a computer vision system. A CG dog follows the viewer, dynamically responds to his gestures and changes his behavior based on the state of engagement with the viewer. Sniff, simulating the visceral satisfaction of reality’s responsiveness and dynamism, is also an exploration of engagement of two different planes of understanding, and of relationships created by body’s presence in an environment. All the Universe is Full of the Lives of Perfect Creatures. In this interactive mirror, viewer’s movement and expressions are mimicked by an animal head which is overlaid on the viewer’s reflection. The resulting effect invites inquiry into issues of self-awareness, empathy and non-verbal communication. A different animal appears every time a person walks in front of the mirror.

ColAR augmented reality colouring book. Apr 02, 2012 colAR augmented reality colouring book the ‘colAR’ application turns colouring book pages into custom 2D and 3D animationsleft: blank colouring book page, © bruce mahalski right: animation of coloured-in kiwi, © tech media network developed by the human interface technology lab new zealand (HITlabNZ), ‘colAR‘ is a computer program that transforms colouring book pages into animated 3D models. the project features a series of mini-applications, each dedicated to a particular colouring book whose printable pages are downloaded alongside the program. children and other users colour in the pages normally, but can then scan their work with a web camera. upon focusing in on a recognizable page, the program features an animated version of the coloured-in characters onscreen. the 3D scene can be rotated to be viewed from different angles. the developing team is comprised of adrian clark, andreas duenser, elwin lee, katy bang, and gabriel salas.

InFORM dynamic shape display augments physical interaction. Nov 13, 2013 inFORM dynamic shape display augments physical interaction inFORM dynamic shape display augments physical realityall images courtesy tangible media group five engineers from the tangible media group at MIT’s media lab have developed ‘inFORM’, a dynamic shape display that has the capability to render three-dimensional content physically, so users can interact with tangible digital information. the system has been created to communicate with the physical world around it, such as moving objects on a table’s surface. in the future, the technology could be used to mediate interaction through geospatial data, such as maps, terrain models and architectural models. Virtual gender swap with the oculus rift by beanotherlab.

Jan 21, 2014 virtual gender swap with the oculus rift by beanotherlab virtual gender swap with the oculus rift by beanotherlaball images courtesy of the machine to be another interdisciplinary art collective beanotherlab asks ‘what would it be like to see through the eyes of the opposite sex?’ , answered through their open source art investigation ‘the machine to be another‘. using two immersive head mounted displays — the oculus rift — the user partakes in a brain illusion, seeing a 3-dimensional video through the eyes’ of the person they face, who follows the former’s movements. designed as an interactive performance installation, the participants engage in an embodiment experience, seeing the other’s body as if it was their own. Glassified: An intelligent ruler with embedded transparent display.

EyeRing. Digital Airbrush. MAS S65: Science Fiction to Science Fabrication. Sensory fiction is about new ways of experiencing and creating stories. Traditionally, fiction creates and induces emotions and empathy through words and images. By using a combination of networked sensors and actuators, the Sensory Fiction author is provided with new means of conveying plot, mood, and emotion while still allowing space for the reader’s imagination. These tools can be wielded to create an immersive storytelling experience tailored to the reader. Pepsi brings packaging to life with augmented reality. Jaguar Concept Windshield Shows Off Augmented Reality in the Car. Land Rover's New Invention Lets You See Through Your Car's Hood. This Augmented-Reality Sandbox Turns Dirt Into a UI.