PyPI - the Python Package Index.

Python Comprehensions Tools and Concepts. IPython References. Amazon S3 — Boto 3 Docs 1.3.1 documentation. Boto 2.x contains a number of customizations to make working with Amazon S3 buckets and keys easy.

Boto 3 exposes these same objects through its resources interface in a unified and consistent way. Creating the Connection Boto 3 has both low-level clients and higher-level resources. For Amazon S3, the higher-level resources are the most similar to Boto 2.x's s3 module: # Boto 2.ximport botos3_connection = boto.connect_s3() # Boto 3import boto3s3 = boto3.resource('s3') Creating a Bucket Creating a bucket in Boto 2 and Boto 3 is very similar, except that in Boto 3 all action parameters must be passed via keyword arguments and a bucket configuration must be specified manually: Storing Data Storing data from a file, stream, or string is easy:

GitHub - awslabs/aws_lambda_sample_events_python: A Python module for creating sample events to test AWS Lambda functions. GitHub - FlyTrapMind/lambda-packages: Various popular python libraries, pre-compiled to be compatible with AWS Lambda. GitHub - FlyTrapMind/data-science-ipython-notebooks: Continually updated data science Python notebooks: Deep learning (TensorFlow, Theano, Caffe), scikit-learn, Kaggle, big data (Spark, Hadoop MapReduce, HDFS), matplotlib, pandas, NumPy, SciPy, Python ess.

GitHub - FlyTrapMind/gitsome: A supercharged Git/GitHub command line interface (CLI). GitHub - FlyTrapMind/saws: A supercharged AWS command line interface (CLI). Python Prompt Toolkit — prompt_toolkit 1.0.3 documentation. Windows. GitHub - FlyTrapMind/pyvim: Pure Python Vim clone. The xonsh shell — xonsh 0.3.4 documentation. GitHub - FlyTrapMind/python-prompt-toolkit: Library for building powerful interactive command lines in Python.

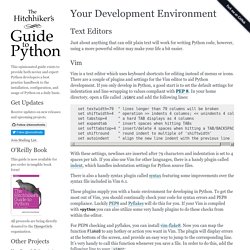

Your Development Environment. Text Editors Just about anything that can edit plain text will work for writing Python code, however, using a more powerful editor may make your life a bit easier.

Vim Vim is a text editor which uses keyboard shortcuts for editing instead of menus or icons. There are a couple of plugins and settings for the Vim editor to aid Python development. If you only develop in Python, a good start is to set the default settings for indentation and line-wrapping to values compliant with PEP 8. Set textwidth=79 " lines longer than 79 columns will be broken set shiftwidth=4 " operation >> indents 4 columns; << unindents 4 columns set tabstop=4 " a hard TAB displays as 4 columns set expandtab " insert spaces when hitting TABs set softtabstop=4 " insert/delete 4 spaces when hitting a TAB/BACKSPACE set shiftround " round indent to multiple of 'shiftwidth' set autoindent " align the new line indent with the previous line. Python - Pydoop on Amazon EMR. Elastic MapReduce Quickstart — mrjob v0.5.2 documentation.

Running an EMR Job Running a job on EMR is just like running it locally or on your own Hadoop cluster, with the following changes: The job and related files are uploaded to S3 before being runThe job is run on EMR (of course)Output is written to S3 before mrjob streams it to stdout locallyThe Hadoop version is specified by the EMR AMI version This the output of this command should be identical to the output shown in Fundamentals, but it should take much longer: Elastic Map Reduce with Amazon S3, AWS, EMR, Python, MrJob and Ubuntu 14.04. This tutorial is about setting up an environment with scripts to work via Amazon's Hadoop implmentation EMR on huge datasets. With dataset I mean extremely large datasets and a simple yet powerful grep does not cut it any more for you. What you need is Hadoop. Setting up Hadoop the first time or scaling it can be to much of an effort, this is why we switched to Amazon Elastic Map Reduce, or EMR, Amazon's implementation of Yahoo!

's Hadoop, which itself is an implementation of Google's MapReduce paper. Amazon's EMR will take care of the Hadoop architecture and scalability; in the likely case one cluster is not enough for you. So let me outline the architecture of the tools and services I have in mind to get our environment going. You will need your Access Key, Private Key and usually a private key file to access AWS from programatically. Programmatic Deployment to Elastic Mapreduce with Boto and Bootstrap Action. A while back I wrote about How to combine Elastic Mapreduce/Hadoop with other Amazon Web Services.

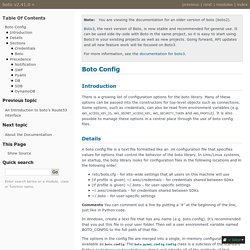

Flask (A Python Microframework) Python Developer Center. Boto Config — boto v2.41.0. The following sections and options are currently recognized within the boto config file.

Credentials The Credentials section is used to specify the AWS credentials used for all boto requests. The order of precedence for authentication credentials is: Credentials passed into the Connection class constructor.Credentials specified by environment variablesCredentials specified as named profiles in the shared credential file.Credentials specified by default in the shared credential file.Credentials specified as named profiles in the config file.Credentials specified by default in the config file. This section defines the following options: aws_access_key_id and aws_secret_access_key. Boto Config — boto v2.41.0. Command Line Tools — boto v2.41.0. Note You are viewing the documentation for an older version of boto (boto2).

Boto3, the next version of Boto, is now stable and recommended for general use. It can be used side-by-side with Boto in the same project, so it is easy to start using Boto3 in your existing projects as well as new projects. Going forward, API updates and all new feature work will be focused on Boto3. For more information, see the documentation for boto3. Boto ships with a number of command line utilities, which are installed when the package is installed. The included utilities available are: asadmin Works with Autoscaling bundle_image Creates a bundled AMI in S3 based on a EC2 instance cfadmin Works with CloudFront & invalidations. Applications Built On Boto — boto v2.41.0.

Note You are viewing the documentation for an older version of boto (boto2).

Boto3, the next version of Boto, is now stable and recommended for general use. It can be used side-by-side with Boto in the same project, so it is easy to start using Boto3 in your existing projects as well as new projects. Going forward, API updates and all new feature work will be focused on Boto3. Boto: A Python interface to Amazon Web Services — boto v2.41.0. An Introduction to boto’s S3 interface — boto v2.41.0. This tutorial focuses on the boto interface to the Simple Storage Service from Amazon Web Services.

This tutorial assumes that you have already downloaded and installed boto. Creating a Connection The first step in accessing S3 is to create a connection to the service.