Transformers are Graph Neural Networks. Engineer friends often ask me: Graph Deep Learning sounds great, but are there any big commercial success stories?

Is it being deployed in practical applications? Besides the obvious ones–recommendation systems at Pinterest, Alibaba and Twitter–a slightly nuanced success story is the Transformer architecture, which has taken the NLP industry by storm. Let’s start by talking about the purpose of model architectures–representation learning. Representation Learning for NLP At a high level, all neural network architectures build representations of input data as vectors/embeddings, which encode useful statistical and semantic information about the data. Transformers from scratch. I will assume a basic understanding of neural networks and backpropagation.

If you'd like to brush up, this lecture will give you the basics of neural networks and this one will explain how these principles are applied in modern deep learning systems. A working knowledge of Pytorch is required to understand the programming examples, but these can also be safely skipped. Self-attention The fundamental operation of any transformer architecture is the self-attention operation. Natural Language Processing: the age of Transformers. This article is the first installment of a two-post series on Building a machine reading comprehension system using the latest advances in deep learning for NLP.

Stay tuned for the second part, where we'll introduce a pre-trained model called BERT that will take your NLP projects to the next level! In the recent past, if you specialized in natural language processing (NLP), there may have been times when you felt a little jealous of your colleagues working in computer vision. It seemed as if they had all the fun: the annual ImageNet classification challenge, Neural Style Transfer, Generative Adversarial Networks, to name a few.

At last, the dry spell is over, and the NLP revolution is well underway! It would be fair to say that the turning point was 2017, when the Transformer network was introduced in Google's Attention is all you need paper. Next we shall take a moment to remember the fallen heros, without whom we would not be where we are today. 1. 1a. 1b. 2. How does this work? 2a. New advances in natural language processing to better connect people. Natural language understanding (NLU) and language translation are key to a range of important applications, including identifying and removing harmful content at scale and connecting people across different languages worldwide.

Although deep learning–based methods have accelerated progress in language processing in recent years, current systems are still limited when it comes to tasks for which large volumes of labeled training data are not readily available. Recently, Facebook AI has achieved impressive breakthroughs in NLP using semi-supervised and self-supervised learning techniques, which leverage unlabeled data to improve performance beyond purely supervised systems. We took first place in several languages in the Fourth Conference on Machine Translation (WMT19) competition using a novel kind of semi-supervised training.

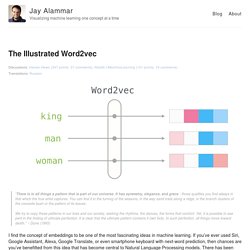

We’ve also introduced a new self-supervised pretraining approach, RoBERTa, that surpassed all existing NLU systems on several language comprehension tasks. The Illustrated Word2vec – Jay Alammar – Visualizing machine learning one concept at a time. Discussions: Hacker News (347 points, 37 comments), Reddit r/MachineLearning (151 points, 19 comments) Translations: Russian “There is in all things a pattern that is part of our universe.

It has symmetry, elegance, and grace - those qualities you find always in that which the true artist captures. You can find it in the turning of the seasons, in the way sand trails along a ridge, in the branch clusters of the creosote bush or the pattern of its leaves. We try to copy these patterns in our lives and our society, seeking the rhythms, the dances, the forms that comfort. Yet, it is possible to see peril in the finding of ultimate perfection. The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time. The year 2018 has been an inflection point for machine learning models handling text (or more accurately, Natural Language Processing or NLP for short). Our conceptual understanding of how best to represent words and sentences in a way that best captures underlying meanings and relationships is rapidly evolving.

Moreover, the NLP community has been putting forward incredibly powerful components that you can freely download and use in your own models and pipelines (It’s been referred to as NLP’s ImageNet moment, referencing how years ago similar developments accelerated the development of machine learning in Computer Vision tasks). (ULM-FiT has nothing to do with Cookie Monster.

CS224n: Natural Language Processing with Deep Learning. Can I follow along from the outside?

We'd be happy if you join us! We plan to make the course materials widely available: The assignments, course notes and slides will be available online. However, we won't be able to give you course credit. Are the lecture videos publicly available online? Update as of July 2018: Though we hoped to make the lecture videos publicly available, unfortunately we were unable to secure permission to do so this year. Can I take this course on credit/no cred basis? Yes. A Review of the Neural History of Natural Language Processing - AYLIEN.