Akeru beta 3.2. Capture Video from File or Camera. Capture Video From File You can download this OpenCV visual c++ project from here.

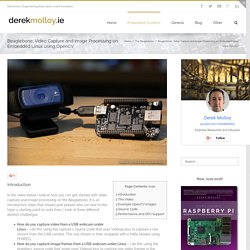

(The downloaded file is a compressed .rar folder. Beaglebone: Video Capture and Image Processing on Embedded Linux using OpenCV. Introduction In the video below I look at how you can get started with video capture and image processing on the Beaglebone.

It is an introductory video that should give people who are new to this topic a starting point to work from. I look at three different distinct challenges: How do you capture video from a USB webcam under Linux – I do this using the capture.c source code that uses Video4Linux to capture a raw stream from the USB camera. This raw stream is then wrapped with a H264 header using FFMPEG.How do you capture image frames from a USB webcam under Linux – I do this using the grabber.c source code that again uses Video4Linux to capture raw video frames in the uncompressed PPM format.How do you use OpenCV to capture and image process frames so that you can build computer vision applications under Linux on the Beaglebone – I do this using the boneCV.cpp program as described below. Android - How can I calculate the distance to the target? Android - How can I update my UI in response to tracking events?

Android - How do I add another target to the Video Playback sample. Torcellite/imageComparator. Studio. Using Ultrasonics for Detection People. All of the MaxBotix® Inc. ultrasonic sensors are capable of detecting people.

The range that the MaxSonar® family of sensors is capable of detecting people varies from sensor to sensor. When choosing a sensor that you want to use for people detection, we recommend viewing the beam plots of the sensor for the approximate range of people detection. People detection generally falls between grid pattern A and grid pattern B when the sensor is perpendicular to the person. Typically a person will reflect the same amount of ultrasonic energy as a 1 inch diameter dowel. Even though people are physically large targets, humans are a soft target that absorb a large amount of ultrasonic sound and reflect only a fraction of the ultrasonic sound. It has been observed that because of the soft target nature of people, occasional range readings may be incorrect.

People detection is not perfect. Industries: LV-MaxSonar-EZ Ultrasonic sensor test. I've been thinking on for some time how I could keep the quadcopter "parked" at a height set out by the user and how the quadcopter could detect and avoid obstacles.

After some research I decided to use an ultrasonic sensor. I also could have chosen to use infrared sensors, but the ultrasonic sensors have a longer range and can be used both inside and outside, there are a variety of configurations while infrared sensors depend on lighting conditions and the color of the surfaces in front of them, their characteristics are more suitable to detect objects than to do accurate measurements of distances to objects. XL-MaxSonar-AE0(MB1300) The Arduino OBD-II Adapter works as a vehicle OBD-II data bridge for Arduino with open-source Arduino library provided.

Besides providing OBD-II data access, it also provides power supply (converted and regulated from OBD-II port) for Arduino and its attached devices. [b]Features[/b][list][li]Directly pluggable into vehicle’s OBD-II port[/li][li]Serial data interface (UART for [url= or I2C for [url= efficiency DC-DC module for 5V/3.3V DC output up to 2A[/li][li]Supporting CAN bus (used by most modern cars), KWP2000 and ISO9141-2 protocols[/li][li]Accessing all OBD-II PIDs provided by the vehcile ECU[/li][li]Embedded 3-axis accelerometer, 3-axis gyroscope and temperature sensors ([url= only)[/li][li]Extendable and actively maintained Arduino library and example sketches provided[/li][/list] [b]Enhanced features of TEL0068[/b] TEL0068 has an additional MPU6050 module built inside, which provides accelerometer, gyroscope and temperature sensor all accessible via I2C.

CAPTEUR : HC-SR04. LinkSprite Learning Center. Use Ultrasonic Sensor to Measure Distance on pcDuino. Www.mon-club-elec.fr/mes_downloads/doc_pcduino/4a.pcduino_personnalisation_du_systeme_de_base_v2ok.pdf. PcDuino Carte pcDuino V2. La carte pcDuino V2 est un mini PC à hautes performances pour un prix très abordable équipé d'un module Wifi et supportant Ubuntu et Android ICS.

Il suffit de raccorder la carte pcDuino V2 à une alimentation 5 Vcc, un clavier, une souris et un écran pour être opérationnel. Le pcDuino V2 dispose d'une sortie vidéo HDMI et est compatible avec toute télévision ou moniteur équipé de cette interface HDMI. Platform - Vuforia. Template Matching. Goal In this tutorial you will learn how to: Use the OpenCV function matchTemplate to search for matches between an image patch and an input imageUse the OpenCV function minMaxLoc to find the maximum and minimum values (as well as their positions) in a given array.

Theory What is template matching? The CImg Library - C++ Template Image Processing Toolkit. pHash.org: Home of pHash, the open source perceptual hash library. OpenCV. VXL - C++ Libraries for Computer Vision. Integrating Vision Toolkit. The Integrating Vision Toolkit (IVT) is a powerful and fast C++ computer vision library with an easy-to-use object-oriented architecture.

It offers its own multi-platform GUI toolkit. Availability[edit] The library is available as free software under a 3-clause BSD license. It is written in pure ANSI C++ and compiles using any available C++ compiler (e.g. any Visual Studio, any gcc, TI Code Composer). It is cross-platform and runs on basically any platform offering a C++ compiler, including Windows, Mac OS X and Linux. OpenCV. History[edit] Advance vision research by providing not only open but also optimized code for basic vision infrastructure.

No more reinventing the wheel.Disseminate vision knowledge by providing a common infrastructure that developers could build on, so that code would be more readily readable and transferable.Advance vision-based commercial applications by making portable, performance-optimized code available for free—with a license that did not require to be open or free themselves. The first alpha version of OpenCV was released to the public at the IEEE Conference on Computer Vision and Pattern Recognition in 2000, and five betas were released between 2001 and 2005. The first 1.0 version was released in 2006. In mid-2008, OpenCV obtained corporate support from Willow Garage, and is now again under active development.

IN2AR - Flash Augmented Reality Engine. Free Open Source Augmented Reality Engine. Serving Raspberry Pi with Flask - Matt Richardson, Creative Technologist. The following is an adapted excerpt from Getting Started with Raspberry Pi.

I especially like this section of the book because it shows off one of the strengths of the Pi: its ability to combine modern web frameworks with hardware and electronics. Not only can you use the Raspberry Pi to get data from servers via the internet, but your Pi can also act as a server itself. There are many different web servers that you can install on the Raspberry Pi. Traditional web servers, like Apache or lighttpd, serve the files from your board to clients. Most of the time, servers like these are sending HTML files and images to make web pages, but they can also serve sound, video, executable programs, and much more. However, there's a new breed of tools that extend programming languages like Python, Ruby, and JavaScript to create web servers that dynamically generate the HTML when they receive HTTP requests from a web browser.

WiFiWebServer. Learning Examples | Foundations | Hacking | Links Examples > WiFi Library WiFi Web Server In this example, you will use your WiFi Shield and your Arduino to create a simple Web server. Using the WiFi library, your device will be able to answer a HTTP request with your WiFI shield. After opening a browser and navigating to your WiFi shield's IP address, your Arduino will respond with just enough HTML for a browser to display the input values from all six analog pins. This example is written for a network using WPA encryption.

Hardware Required. Lire des entrées analogiques sur un Raspberry avec un circuit ADC : le MCP3008 - Slog. Analogue Sensors On The Raspberry Pi Using An MCP3008. The Raspberry Pi has no built in analogue inputs which means it is a bit of a pain to use many of the available sensors. I wanted to update my garage security system with the ability to use more sensors so I decided to investigate an easy and cheap way to do it. The MCP3008 was the answer. Capteur à ultrasons URM37 V3.2 [SEN0001] - 13,80€ Le capteur URM37 V3.2 est idéal pour les applications nécessitant de réaliser une mesure entre des objets mobiles ou fixes. Ses applications en robotique sont très populaires mais ce capteur est aussi utile dans les systèmes de sécurité et là où il n'est pas possible d'utiliser les infrarouges. Capteur de distance IR Sharp GP2Y0A710K (100-550cm) [SEN0085] - 30,80€ Le capteur de distance à infra-rouges Sharp GP2Y0A710K est une nouvelle version du capteur GP2Y0A700K.

Il mesure la distance en continu et la retourne sous forme de tension analogique avec une portée de un à cinq mètres et demi. Télémètre ultrason "SRF10" LabFab Rennes - Projet de laboratoire de fabrication francophoneLabFab Rennes – Projet de laboratoire de fabrication francophone. BeagleBone Black. What is BeagleBone Black? BeagleBone Black is a $45 MSRP community-supported development platform for developers and hobbyists. Boot Linux in under 10 seconds and get started on development in less than 5 minutes with just a single USB cable. Platine "BeagleBone Black" (Rev. A6A) Texas Instruments Android Development Kit. Click on the links in the table below to download. This release package provides Android Jelly Bean 4.1.2 distribution for TI's Sitara(TM) AM335x ARM Cortex(TM) A8 Processors.

The Development Kit provides pre-built images for the AM335x Starter Kit, AM335x EVM and BeagleBone that include Android default apps, multimedia files, RowboPerf performance and benchmarking utilities to help with evaluation and prototyping.It also includes Android source code with pre-integrated POWERVR(TM) SGX 3D graphics accelerator drivers, TI hardware abstraction for Audio, WLAN & Bluetooth for TI WL1271 chipset, USB mass storage and more.In addition to these, this package also includes debug and development tools like prebuilt ARM GCC tool chain, TI CCSv5, ADT plugins, etc. to enable an Android developer to easily build custom Android solutions for non-phone market segments. Android on Texas Instruments Embedded Procecssors Wiki.