Fastmocap - Kinect Motion Capture - Mocap software for everyone. Kinect. Kinect (codenamed in development as Project Natal) is a line of motion sensing input devices by Microsoft for Xbox 360 and Xbox One video game consoles and Windows PCs.

Based around a webcam-style add-on peripheral, it enables users to control and interact with their console/computer without the need for a game controller, through a natural user interface using gestures and spoken commands.[9] The first-generation Kinect was first introduced in November 2010 in an attempt to broaden Xbox 360's audience beyond its typical gamer base.[10] A version for Windows was released on February 1, 2012.[6] Kinect competes with several motion controllers on other home consoles, such as Wii Remote Plus for Wii, PlayStation Move/PlayStation Eye for PlayStation 3, and PlayStation Camera for PlayStation 4. Technology. Programming for Kinect 3 – A simple Kinect App in Processing.

After (hopefully) successful installation of the OpenNI drivers, we will finally get our hands dirty on some code to create our first Kinect-enabled application.

To make things easier we’ll be using Processing.org and simple-openni. Processing.org is a java-based programming environment that … After (hopefully) successful installation of the OpenNI drivers, we will finally get our hands dirty on some code to create our first Kinect-enabled application. Kinect Hacking using Processing. About Processing from Processing.org: Processing is an open source programming language and environment for people who want to create images, animations, and interactions.

Initially developed to serve as a software sketchbook and to teach fundamentals of computer programming within a visual context, Processing also has evolved into a tool for generating finished professional work. Today, there are tens of thousands of students, artists, designers, researchers, and hobbyists who use Processing for learning, prototyping, and production. Z Vector Takes Live Kinect Feeds To Create Stunning Video Art. This is a bit of a niche product, but it’s still interesting nonetheless.

Artist Julius Tuomisto believes that VJs are the next DJs. Just in the same way that a DJ shepherds music lovers through a thoughtful selection of tracks, video artists can guide audiences through live visualizations that respond to music. He and his Helsinki-based firm Delicode have created a new software platform, called Z Vector, that can take data from a Kinect or the PrimeSense Carmine to create live video feeds and visualizations that rotate around 3D forms and people captured by the camera. It’s a tool for live performances, not for programming. You can see how it works in this music video he created with the band, Phantom. Z Vector takes the raw 3D data from the Kinect and puts effects or filters on it like different geometric textures and lines (like in the Phantom video). Or it also can put in distortion fields like in the video below (or particle trails and gradients, too). An open source implementation of KinectFusion - Point Cloud Library.

Synapse for Kinect. SYNAPSE for Kinect Update: There’s some newer Kinect hardware out there, “Kinect for Windows”.

This hardware is slightly different, and doesn’t work with Synapse. Be careful when purchasing, Synapse only supports “Kinect for Xbox”. Update to the update: There appears to also be newer “Kinect for Xbox” hardware out there. Model 1414 Kinects work with Synapse, but I’m getting reports that the newer 1473 models do not work. Update the third: Synapse doesn’t work on Windows 8, sorry.Synapse is an app for Mac and Windows that allows you to easily use your Kinect to control Ableton Live, Quartz Composer, Max/MSP/Jitter, and any other application that can receive OSC events. Kinect Guide to Using Synapse with Quartz Composer. Ryan Challinor wrote an incredibly useful tool for speeding up the set up process involved with using your Kinect sensor with Apple's free visual programming tool Quartz Composer.

I was able to easily set up a quick demo where A particle system with a halo effect would follow my left hand along the X and Y axis. Incredibly easy to set up with a very rewarding end result. First you'll need to download Synapse. Since this guide uses Quartz Composer so you'll only need to download Mac version of Synapse for Kinect. You can download it from the original source of from our resource section right here. You'll also need to download the Quartz Composer plugin qcOSC in order to send OSC joint messages QC. Getting Started with Kinect and Processing. So, you want to use the Kinect in Processing.

Great. This page will serve to document the current state of my Processing Kinect library, with some tips and info. The current state of affairs Since the kinect launched in November 2010, there have been several models released. Here's a quick list of what is out there and what is supported in Processing for Mac OS X. Kinect 1414: This is the original kinect and works with the library documented on this page in Processing 2.1 Kinect 1473: This looks identical to the 1414, but is an updated model.

Now, before you proceed, you could also consider using the SimpleOpenNI library and read Greg Borenstein’s Making Things See book. OpenKinect. Setting up Kinect on Mac — black label creative. Update 27/04/2013: Latest test of the OpenNI 2.1.0 beta and NITE2 was good but it’s not working with the SimpleOpenNI library yet.

I’ll keep watching for updates and let you know when it’s all running. Thanks to open source projects like OpenNI and OpenKinect, you can now use Microsoft’s Kinect on more than just Windows. This guide is for those running OSX 10.6.8 or newer but might also be applicable to anyone still running older versions or Linux. Some changes to the command line code might be needed though – if you get it going, let me know in the comments. The main parts involved here are OpenNI, SensorKinect, NITE. Setup Microsoft Kinect on Mac OS X 10.9 (Mavericks) If you want to get the Microsoft Kinect setup and working on your Mac using OS X 10.9 Mavericks, then you’ve come to the right place.

Since posting the first tutorial, a number of new software updates have been released, so it’s a good idea to recap from the start. This tutorial will detail all the steps necessary to get the Kinect working in Mavericks, so buckle up and let’s get this party started. As always, if you have any questions, issues, or feedback, please feel free to post them in the comments section at the bottom, and to keep abreast of any new updates and posts you can follow me on Twitter, or subscribe using the new email form in the sidebar. Oh, and if you don’t own a Kinect yet, there’s a few things you’ll need to know, so please check out the buyers guide or If you followed my earlier tutorial and/or had your Kinect running in Mac OS X 10.8 Mountain Lion, then you’ll want to complete this step before moving ahead. When it comes to hacking the Kinect, cleaner is better. .

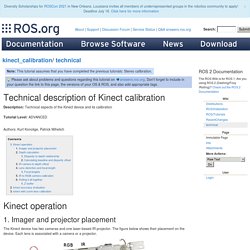

Kinect and Processing experiments. Kinect_calibration/technical. Description: Technical aspects of the Kinect device and its calibration Tutorial Level: ADVANCED Authors: Kurt Konolige, Patrick Mihelich Imager and projector placement The Kinect device has two cameras and one laser-based IR projector.

The figure below shows their placement on the device. This image is provided by iFixit. All the calibrations done below are based on IR and RGB images of chessboard patterns, using OpenCV's calibration routines. Depth calculation The IR camera and the IR projector form a stereo pair with a baseline of approximately 7.5 cm. Depth is calculated by triangulation against a known pattern from the projector. Disparity to depth relationship For a normal stereo system, the cameras are calibrated so that the rectified images are parallel and have corresponding horizontal lines. Z = b*f / d,