Computer science. Computer science deals with the theoretical foundations of information and computation, together with practical techniques for the implementation and application of these foundations History[edit] The earliest foundations of what would become computer science predate the invention of the modern digital computer.

Machines for calculating fixed numerical tasks such as the abacus have existed since antiquity, aiding in computations such as multiplication and division. Further, algorithms for performing computations have existed since antiquity, even before sophisticated computing equipment were created. The ancient Sanskrit treatise Shulba Sutras, or "Rules of the Chord", is a book of algorithms written in 800 BCE for constructing geometric objects like altars using a peg and chord, an early precursor of the modern field of computational geometry.

Time has seen significant improvements in the usability and effectiveness of computing technology. Contributions[edit] These contributions include:

iSeries. Synon. Computer-aided software engineering. Example of a CASE tool.

Computer-aided software engineering (CASE) is the application of a set of tools and methods to a software system with the desired end result of high-quality, defect-free, and maintainable software products.[1] It also refers to methods for the development of information systems together with automated tools that can be used in the software development process.[2] History[edit] The Information System Design and Optimization System (ISDOS) project, started in 1968 at the University of Michigan, initiated a great deal of interest in the whole concept of using computer systems to help analysts in the very difficult process of analysing requirements and developing systems. Several papers by Daniel Teichroew fired a whole generation of enthusiasts with the potential of automated systems development.

His Problem Statement Language / Problem Statement Analyzer (PSL/PSA) tool was a CASE tool although it predated the term. Under the direction of Albert F. Components[edit] IBM System i. IBM System i 570 server (as of 2006) The IBM System i is IBM's previous generation of midrange computer systems for IBM i users, and was subsequently replaced by the IBM Power Systems in April 2008.

The platform was first introduced as the AS/400 (Application System/400) on June 21, 1988 and later renamed to the eServer iSeries in 2000. As part of IBM's Systems branding initiative in 2006, it was again renamed to System i. The codename of the AS/400 project was "Silver Lake", named for the lake in downtown Rochester, Minnesota, where development of the system took place. In April 2008, IBM announced its integration with the System p platform. Summary[edit] The predecessor to AS/400, IBM System/38, was first made available in August 1979 and was marketed as a minicomputer for general business and departmental use. Realizing the importance of compatibility with the thousands of programs written in legacy code, IBM launched the AS/400 midrange computer line in 1988.

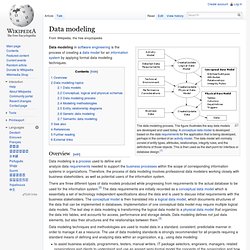

Features[edit] Examples: Data modeling. The data modeling process.

The figure illustrates the way data models are developed and used today. A conceptual data model is developed based on the data requirements for the application that is being developed, perhaps in the context of an activity model. The data model will normally consist of entity types, attributes, relationships, integrity rules, and the definitions of those objects. This is then used as the start point for interface or database design.[1] Data modeling in software engineering is the process of creating a data model for an information system by applying formal data modeling techniques. Overview[edit] Data modeling is a process used to define and analyze data requirements needed to support the business processes within the scope of corresponding information systems in organizations.

Data modeling techniques and methodologies are used to model data in a standard, consistent, predictable manner in order to manage it as a resource.