Exclusive: How Google's Algorithm Rules the Web | Wired Magazine Want to know how Google is about to change your life? Stop by the Ouagadougou conference room on a Thursday morning. It is here, at the Mountain View, California, headquarters of the world’s most powerful Internet company, that a room filled with three dozen engineers, product managers, and executives figure out how to make their search engine even smarter. This year, Google will introduce 550 or so improvements to its fabled algorithm, and each will be determined at a gathering just like this one. You might think that after a solid decade of search-market dominance, Google could relax. Still, the biggest threat to Google can be found 850 miles to the north: Bing. Team Bing has been focusing on unique instances where Google’s algorithms don’t always satisfy. Even the Bingers confess that, when it comes to the simple task of taking a search term and returning relevant results, Google is still miles ahead. Google’s response can be summed up in four words: mike siwek lawyer mi.

Google PageRank Checker - Check Google page rank instantly Check PAGE RANK of Web site pages Instantly In order to check pagerank of a single web site, web page or domain name, please submit the URL of that web site, web page or domain name to the form below and click "Check PR" button. the free PR checker tool enables you to check the current pagerank of your web site instantly via the form above, however, you might consider to be quite boring to visit online page rank checking tool every time you'd like to check Google pagerank of your web pages.. so, it might be a good idea to put a small page rank icon to your site to check and display your Google rankings right on your web site pages. Add Free PAGE RANK Check tool to your site In order to add this free page rank checker tool to your web site and give your visitors the way to check the ranking of any pages directly from your site, just copy the following HTML code and put it into your HTML document where you want the check page rank tool to appear:

Google PageRank - Algorithm The PageRank Algorithm The original PageRank algorithm was described by Lawrence Page and Sergey Brin in several publications. It is given by PR(A) = (1-d) + d (PR(T1)/C(T1) + ... + PR(Tn)/C(Tn)) where So, first of all, we see that PageRank does not rank web sites as a whole, but is determined for each page individually. The PageRank of pages Ti which link to page A does not influence the PageRank of page A uniformly. The weighted PageRank of pages Ti is then added up. Finally, the sum of the weighted PageRanks of all pages Ti is multiplied with a damping factor d which can be set between 0 and 1. The Random Surfer Model In their publications, Lawrence Page and Sergey Brin give a very simple intuitive justification for the PageRank algorithm. The random surfer visits a web page with a certain probability which derives from the page's PageRank. So, the probability for the random surfer reaching one page is the sum of probabilities for the random surfer following links to this page.

PewDiePie quit plan prompts YouTube reply Image copyright Getty Images YouTube has denied making changes to its algorithms, after its most popular star said he would delete his channel. Video gamer Felix Kjellberg, known as PewDiePie, suggested changes to YouTube's algorithms had affected the discoverability of creators' content. On Tuesday, a Forbes report named the Swedish gamer who now lives in the UK as the highest-earning YouTuber. YouTube told the BBC it had not made any changes to its "suggested videos" algorithms. However, other video-makers have reported the same problem, with new videos being viewed fewer times than old content. Algorithms The "suggested videos" feed appears when a video is being watched, and recommends more content to watch. Mr Kjellberg said the feed usually accounted for more than 30% of his video traffic, but in recent weeks it had suddenly fallen to under 1%, signalling an undisclosed algorithm change. Other criticisms aimed at YouTube included suggestions that: Highest-earning YouTube stars of 2016

How Google made the world go viral The first thing ever searched on Google was the name Gerhard Casper, a former Stanford president. As the story goes, in 1998, Larry Page and Sergey Brin demoed Google for computer scientist John Hennessy. They searched Casper’s name on both AltaVista and Google. The former pulled up results for Casper the Friendly Ghost; the latter pulled up information on Gerhard Casper the person. What made Google’s results different from AltaVista’s was its algorithm, PageRank, which organized results based on the amount of links between pages. Google officially went online later in 1998. This year, The Verge is exploring how Google Search has reshaped the web into a place for robots — and how the emergence of AI threatens Google itself. There is a growing chorus of complaints that Google is not as accurate, as competent, as dedicated to search as it once was. For two decades, Google Search was the largely invisible force that determined the ebb and flow of online content. But it wasn’t an anomaly.

The Small-World Phenomenon: An Algorithmic Perspective 1 Jon Kleinberg 2 Abstract: Long a matter of folklore, the ``small-world phenomenon'' -- the principle that we are all linked by short chains of acquaintances -- was inaugurated as an area of experimental study in the social sciences through the pioneering work of Stanley Milgram in the 1960's. This work was among the first to make the phenomenon quantitative, allowing people to speak of the ``six degrees of separation'' between any two people in the United States. Since then, a number of network models have been proposed as frameworks in which to study the problem analytically. But existing models are insufficient to explain the striking algorithmic component of Milgram's original findings: that individuals using local information are collectively very effective at actually constructing short paths between two points in a social network. The Small-World Phenomenon. Milgram's basic small-world experiment remains one of the most compelling ways to think about the problem. The Present Work. .

Google bomb A Google bomb on March 31st, 2013. Despite Google's intervention, some of the first search results still refer to Bush. The terms Google bomb and Googlewashing refer to the practice of causing a web page to rank highly in search engine results for unrelated or off-topic search terms by linking heavily. In contrast, search engine optimization (SEO) is the practice of improving the search engine listings of web pages for relevant search terms. It is done for either business, political, or comedic purposes (or some combination thereof).[1] Google's search-rank algorithm ranks pages higher for a particular search phrase if enough other pages linked to it use similar anchor text (linking text such as "miserable failure"). By January 2007, however, Google tweaked its search algorithm to counter popular Google bombs such as "miserable failure" leading to George W. History[edit] Uses as tactical media[edit] Alternative meanings[edit] Google bowling[edit] Beyond Google[edit] Motivations[edit]

What is link juice | SEO Guide | GoUp Controlling internal link juice As discussed in Link Metrics, Link Juice is the amount of positive ranking factors that a link passes from one page to the next. A website has an overall Domain Rank. Link Juice should be spread as evenly as possible throughout a website as possible. So, here are the Go Up tips on Link Juice distribution best Practice: 1. This is a very common mistake. Remember: If a website has a balanced link juice distribution, every page on the website will have a good chance of ranking well. In your link building campaign, make a table of how many links you have requested to different pages. Poor Inbound link distribution Home Page 20 links Page 2 6 links Page 3 1 link Page 4 1 link Page 5 0 links Page 6 2 links Page 7 0 links Page 8 0 links Page 9 0 links Page 10 0 links Good Inbound link distribution Home Page 6 links Page 2 4 links Page 3 3 link Page 4 3 link Page 5 2 links Page 7 3 links Page 8 2 links Page 9 3 links Page 10 2 links 2. Link Juice can be passed internally. 3. About Page

Ludwig Wittgenstein (Stanford Encyclopedia of Philosophy) 1. Biographical Sketch Wittgenstein was born on April 26, 1889 in Vienna, Austria, to a wealthy industrial family, well-situated in intellectual and cultural Viennese circles. In 1908 he began his studies in aeronautical engineering at Manchester University where his interest in the philosophy of pure mathematics led him to Frege. Upon Frege’s advice, in 1911 he went to Cambridge to study with Bertrand Russell. Russell wrote, upon meeting Wittgenstein: “An unknown German appeared … obstinate and perverse, but I think not stupid” (quoted by Monk 1990: 38f). During his years in Cambridge, from 1911 to 1913, Wittgenstein conducted several conversations on philosophy and the foundations of logic with Russell, with whom he had an emotional and intense relationship, as well as with Moore and Keynes. In the 1930s and 1940s Wittgenstein conducted seminars at Cambridge, developing most of the ideas that he intended to publish in his second book, Philosophical Investigations. 2. 3.

Howard Rheingold's insight:

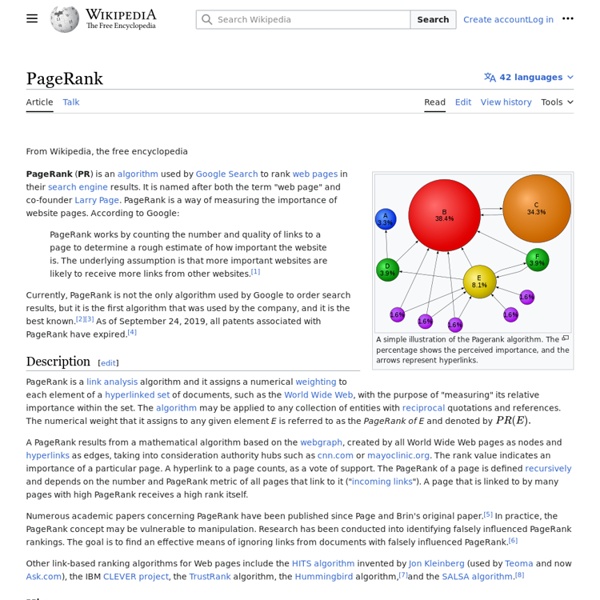

Every time you Google, you are using an augmented collective intelligence engine. PageRank is the algorithm that weights the inbound links to web pages as "votes" for that page's significance. Certainly no blogger thinks "I'm making Google more intelligent and contributing to its value" when adding a link to a website. More likely, they think "this is a valuable link for the attention of my public." By figuring out how to measure the informational value of websites through a mathematical manipulation of its inbound links, Google created a collective intelligence engine (and, to the benefit of Google's stockholders, created an attention magnet for displaying advertising messages -- a case of a public good that is also a concentrator of private wealth. by noosquest Mar 30