3D Renderings of Humans. Google's Latest Machine Vision Breakthrough. Researchers build one-pixel cameras that can take 3D pictures. A team at the University of Glasgow has developed a camera with only one pixel that can nevertheless make 3D models of objects, including ones featuring light beyond the visible spectrum.

Harvard School of Engineering and Applied Sciences. Cambridge, Mass. - August 5, 2013 - Researchers at the Harvard School of Engineering and Applied Sciences (SEAS) have developed a way for photographers and microscopists to create a 3D image through a single lens, without moving the camera.

Published in the journal Optics Letters, this improbable-sounding technology relies only on computation and mathematics—no unusual hardware or fancy lenses. The effect is the equivalent of seeing a stereo image with one eye closed. That's easier said than done, as principal investigator Kenneth B. Crozier, John L. Loeb Associate Professor of the Natural Sciences, explains.

"If you close one eye, depth perception becomes difficult. A Seismic Shift in Object Detection. Object detection has undergone a dramatic and fundamental shift. I’m not talking about deep learning here – deep learning is really more about classification and specifically about feature learning. Feature learning has begun to play and will continue to play a critical role in machine vision. Arguably in a few years we’ll have a diversity of approaches for learning rich features hierarchies from large amounts of data; it’s a fascinating topic. However, as I said, this post is not about deep learning. CGI Dude - Very Lifelike. Solving the Tongue-Twisting Problems of Speech Animation. Motion capture is a widely used technique to record the movement of the human body.

Indeed, the technique has become ubiquitous in areas such as sports science, where it is used to analyze movement and gait, as well as in movie animation and gaming, where it is used to control computer-generated avatars. As a result, an entire industry exists for analyzing movement in this way, along with cheap, off-the-shelf equipment and software for capturing and handling the data. Garry's Mod. Gameplay[edit] Although Garry's Mod is usually considered to be a full game, it has no game objective and players can use the game's set of tools for any purpose whatsoever, although sometimes when playing on a multiplayer server it may have role-play or other types of game modes.

Garry's Mod allows players to manipulate items, furniture and "props" – various objects that players can place in-game. Props can be selected from any installed Source engine game or from a community created collection. The game features two "guns" – Physics Gun and Tool Gun for manipulating objects. The Physics Gun allows objects to be picked up, adjusted, and frozen in place. Another popular Garry's Mod concept is ragdoll posing. Multiplayer[edit] Garry's Mod supports multiplayer gameplay on dedicated game servers. Garry's Mod servers run many game modes, most of which are user-created modifications.

Real Time CGI - Lucas Films. Nvidia Face Works Tech Demo; Renders Realistic Human Faces. Faces. Uncanny valley. In an experiment involving the human lookalike robot Repliee Q2 (pictured above), the uncovered robotic structure underneath Repliee, and the actual human who was the model for Repliee, the human lookalike triggered the highest level of mirror neuron activity.[1] Etymology[edit] The concept was identified by the robotics professor Masahiro Mori as Bukimi no Tani Genshō (不気味の谷現象) in 1970.[5][6] The term "uncanny valley" first appeared in the 1978 book Robots: Fact, Fiction, and Prediction, written by Jasia Reichardt.[7] The hypothesis has been linked to Ernst Jentsch's concept of the "uncanny" identified in a 1906 essay "On the Psychology of the Uncanny".[8][9][10] Jentsch's conception was elaborated by Sigmund Freud in a 1919 essay entitled "The Uncanny" ("Das Unheimliche").[11]

Facial Asymmetry. Mapping the Stairs to Visual Excellence. Max Headroom and the Strange World of Pseudo-CGI. I’ve come across people who believe that Max Headroom, the Channel 4 character from the Eighties, was a genuine piece of computer animation.

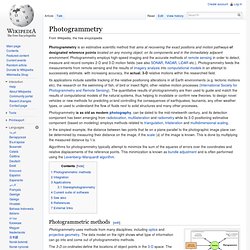

But although he was conceived by the animators Rocky Morton and Annabel Jankel (of Cucumber Films fame) Max himself was portrayed by actor Matt Frewer, placed into latex makeup and a shiny costume and set amidst a range of technological tricks. Photogrammetry. Photogrammetry is an estimative scientific method that aims at recovering the exact positions and motion pathways of designated reference points located on any moving object, on its components and in the immediately adjacent environment.

Photogrammetry employs high-speed imaging and the accurate methods of remote sensing in order to detect, measure and record complex 2-D and 3-D motion fields (see also SONAR, RADAR, LiDAR etc.). Photogrammetry feeds the measurements from remote sensing and the results of imagery analysis into computational models in an attempt to successively estimate, with increasing accuracy, the actual, 3-D relative motions within the researched field.

Its applications include satellite tracking of the relative positioning alterations in all Earth environments (e.g. tectonic motions etc), the research on the swimming of fish, of bird or insect flight, other relative motion processes (International Society for Photogrammetry and Remote Sensing). Integration[edit]