tuioZones EyePoke. Kivy: a crossplatform framework for creating NUI applications. Ideo-multitouch - Multitouch Package for Flash & Processing. Multitouch Package for Flash & Processing by labs.ideo.com Created to enable designers to explore multitouch interactions quickly and easily, this package includes all requisite software to build a lightweight multitouch system.

This package is comprised of two parts: Multitouch Client – A multitouch API for Flash including support for extensible touch actions, arbitrarily sized displays, and a limitless number of active fingers. Software simulated touch events are also supported to allow for software to be tested and developed on computers lacking multitouch hardware.

The Multitouch Client API is built in ActionScript 2 and communicates with the Multitouch Server via network socket connection. Multitouch Server – There are currently two alternatives for the back-end server: A camera-based computer vision system that optically recognizes multitouch input, serializing it for transmission to the Multitouch Client. WiiMote Example Code (Client) import com.ideo.MultiTouch. TUIO. Software → touché. Nov 1, 2008 Version 1.0b3 MacOS 10.5.3 and later Download Version History Visit Google Code Page Watch Videos Touché is a free, open-source tracking environment for optical multitouch tables. It has been written for MacOS X Leopard and uses many of its core technologies, such as QuickTime, Core Animation, Core Image and the Accelerate framework, but also high-quality open-source libraries such as libdc1394 and OpenCV, in order to achieve good tracking performance.

The Touché environment consists of two parts: A standalone tracking application written in Cocoa, that comes with lots of configuration options as well as calibration and test tools, and a Cocoa framework that can be embedded into custom applications in order to receive tracking data from the tracking application. This way, you can easily experiment with MacOS X technologies such as Core Animation or Quartz Composer on your multitouch table. Requirements Configuration Setting up Touché is pretty straight-forward. Features Tips About. Who-T. MPX: Multi-pointer X « [WCL] Wearable Computer Lab, University of South Australia. Welcome to OpenNI. SGDriver. Community Core Vision. SimProj : Built with Processing. MT4j - Multitouch For Java. Touchlib - Home.

Touchlib is a library for creating multi-touch interaction surfaces.

It handles tracking blobs of infrared light, and sends your programs these multi-touch events, such as 'finger down', 'finger moved', and 'finger released'. It includes a configuration app and a few demos to get you started, and will interace with most types of webcams and video capture devices. It currently works only under Windows but efforts are being made to port it to other platforms. Who Should Use Touchlib? Touchlib only comes with simple demo applications. As of the current version, Touchlib now can broadcast events in the TUIO protocol (which uses OSC). If you don't like Touchlib and want to program your own system, the latest version of OpenCV now has support for blob detection and tracking. ReacTIVision.

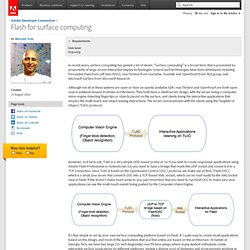

ENAC's Interactive Computing Laboratory - X.org multitouch. Flash for surface computing. It's that simple to set up your own surface computing platform based on Flash.

It's quite easy to create small applications based on this design, and most of the applications that you find online are based on this architecture. At Synlab at Georgia Tech, we have two large (55-inch diagonally) reacTIVision setups where many student enthusiasts create interactive surface applications on different platforms. Having a diverse pool of designers and programmers working at our lab, we have seen certain UI and programming design guidelines evolve over the last year that may be useful for everyone. Our surface is quite large, and it can have multiple users working on it at once.

Also, since we primarily use reacTIVision at Synlab, our projects have a bias toward object sensing more than employing touch gestures. The code in this project can help you create interactions between these fiduciary objects that are sensed on the surface.