Azure Data Factory. Individually great, collectively unmatched: Announcing updates to 3 great Azure Data Services. As Julia White mentioned in her blog today, we’re pleased to announce the general availability of Azure Data Lake Storage Gen2 and Azure Data Explorer.

We also announced the preview of Azure Data Factory Mapping Data Flow. With these updates, Azure continues to be the best cloud for analytics with unmatched price-performance and security. In this blog post we’ll take a closer look at the technical capabilities of these new features. Azure Data Lake Storage - The no compromise Data Lake Azure Data Lake Storage (ADLS) combines the scalability, cost effectiveness, security model, and rich capabilities of Azure Blob Storage with a high-performance file system that is built for analytics and is compatible with the Hadoop Distributed File System. One of our key priorities was to ensure that ADLS is compatible with the Apache ecosystem. Abfs[s]://file_system@account_name.dfs.core.windows.net/<path>/<path>/<filename> Next steps. Azure Data Studio - Setting up your environment - SQL Server Blog.

This blog entry comes from Buck Woody, who recently rejoined the SQL Server team from the Machine Learning and AI team.

For those of you who haven’t met me or read any of my books or blog entries, it’s great to meet you! I’ve been a data professional for over 35 years, worked at a variety of places like NASA, various consulting firms, and here at Microsoft since 2006. I started on the SQL Server team, and then helped ship Microsoft Azure. After that I spent some time in Microsoft Consulting Services, then over to the Machine Learning team in Microsoft Research, and then the Machine Learning and AI team. I’ve rejoined the SQL Server team to help with the inclusion of Apache Spark™, Kubernetes, and the Machine Learning and AI features.

You may be thinking wait – don’t we already have a lot of those? Well, yes. Multi-Source In Azure Data Studio, you can connect to multiple data systems, not just SQL Server, like Apache Hadoop HDFS, Apache Spark™, and others. CheatSheets/ADFDF-Cheat-Sheet-sqlplayer.pdf at master · SQLPlayer/CheatSheets. The Necessary Extras That Aren’t Shown in Your Azure BI Architecture Diagram. When we talk about Azure architectures for data warehousing or analytics, we usually show a diagram that looks like the below.

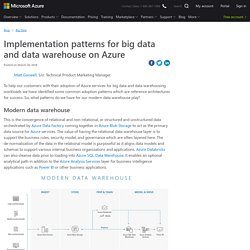

This diagram is a great start to explain what services will be used in Azure to build out a solution or platform. But many times, we add the specific resource names and stop there. Learn Azure in a Month of Lunches. Azure SQL Data Warehouse - MPP architecture. Implementation patterns for big data and data warehouse on Azure. To help our customers with their adoption of Azure services for big data and data warehousing workloads we have identified some common adoption patterns which are reference architectures for success.

So, what patterns do we have for our modern data warehouse play? Modern data warehouse This is the convergence of relational and non-relational, or structured and unstructured data orchestrated by Azure Data Factory coming together in Azure Blob Storage to act as the primary data source for Azure services. The value of having the relational data warehouse layer is to support the business rules, security model, and governance which are often layered here. The de-normalization of the data in the relational model is purposeful as it aligns data models and schemas to support various internal business organizations and applications. Monitoring Azure SQL Databases.

In this article we will discuss different ways of monitoring data solutions including different types of Azure SQL databases like Azure SQL DB, Azure SQL DW, Azure SQL Managed Instance.

This can be done using 2 approaches: Single database monitoring using Internal SQL Server features (SQL Server Query Data Store, SQL Server Dynamic Management Views, SQL Server Extended Events), DTU consumption in Azure portal, Query Performance Insight, SQL Database Advisor;Multiple database monitoring and reacting using Azure Monitor with Azure SQL Analytics, Event Hubs, Logic Apps, and Power BI. Monitoring and troubleshooting single database performance: DTU consumption in Azure portalQuery Performance InsightSQL Database AdvisorAzure SQL Intelligent InsightsReal-time monitoring: dynamic management views (DMVs), extended events, and the Query Store.

AzureSQL. Microsoft SQL Server Integration Services: Start and stop Integration Runtime in ADF pipeline. Case You showed me how to schedule a pause and resume of the Integration Runtime (IR) in Azure Automation, but can you also start and stop IR in the Azure Data Factory (ADF) pipeline with one of the activities?

This will save the most money possible, especially when you only have one ETL job. Solution Yes you can and even better... you can reuse the existing Runbook PowerShell script that pauses and resumes the IR. Instead of scheduling it, which is more appropriate when you have multiple projects to run, we will call the scripts via their webhooks. Prerequisites. Service Trust Portal. Azure Data Catalog and Power BI. ADC and Power BI mix to generate a Corporate BI Suite Some time ago there are emerging services that are very interesting tools to a goal and organized a corporate level to Power BI deployment.

I’ve been lately involved in projects of high scale in terms of deployment of Power BI, in which we have defined Roles (Readers, Contributors, Analysts, Power BI Champions, IT Administrators), Members Apps Workspaces, Configuring Power BI Premium with differentiated capabilities, Readers Group for Workspaces Premium, and strategies for Governance and Deployment. With this in mind we can be align with the objectives of the whole organization and the ability to develop a real culture of Data Analytics with information set up, and also process definitions to provide some way that allows business analyst generating data sources to implements reports in a timely manner for presentation on a corporate level. Preview: SQL Transparent Data Encryption (TDE) with Bring Your Own Key support. We’re glad to announce the preview of Transparent Data Encryption (TDE) with Bring Your Own Key (BYOK) support for Azure SQL Database and Azure SQL Data Warehouse!

Now you can have control of the keys used for encryption at rest with TDE by storing these master keys in Azure Key Vault. TDE with BYOK support gives you increased transparency and control over the TDE Protector, increased security with an HSM-backed external service, and promotion of separation of duties. When you use TDE, your data is encrypted at rest with a symmetric key (called the database encryption key) stored in the database or data warehouse distribution. To protect this data encryption key (DEK) in the past, you could only use a certificate that the Azure SQL Service managed.

Now, with BYOK support for TDE, you can protect the DEK with an asymmetric key that is stored in Key Vault. In the Azure Portal, we’ve kept the experience simple. Enabling TDE Setting a TDE Protector Rotating Your Keys. Always Encrypted with Azure Key Vault – Bradley Schacht. I recently wrote a post about using Transparent Data Encryption (TDE) with Azure Key Vault as an alternative to managing certificates.

Today’s post will explore using SQL Server’s Always Encrypted functionality with Azure Key Vault. Always Encrypted, as with TDE, can use Windows certificates or what an external storage location such as a Hardware Security Module (HSM) or Azure Key Vault. Microsoft BI Tools: Schedule Pause/Resume of Azure Analysis Services. Case Azure Analysis Services (AAS) is a little bit too expensive to just let it run continuously if you are not using it all the time.

How can I pause and resume it automatically according a fixed schedule to save some money in Azure? Solution If you are are using AAS for testing purposes or nobody is using the production environment outside the office hours then you can pause it with some PowerShell code in Azure Automation Runbooks. This could potentially save you a lot of money. If pausing is too rigorous for you and you are not already using the lowest tier then you could also schedule a downscale and upscale. Starting a runbook in Azure Automation.

The following table will help you determine the method to start a runbook in Azure Automation that is most suitable to your particular scenario. This article includes details on starting a runbook with the Azure portal and with Windows PowerShell. Details on the other methods are provided in other documentation that you can access from the links below. The following image illustrates detailed step-by-step process in the life cycle of a runbook. Azure Automation : Create and Invoke Azure Runbook using Webhooks from Client Applications - TechNet Articles - United States (English) - TechNet Wiki. Azure Automation : Create and Invoke Azure Runbook using Webhooks from Client Applications - TechNet Articles - United States (English) - TechNet Wiki.