TextBlob: Simplified Text Processing — TextBlob 0.15.1 documentation. Translating English into Logic using the Linguist. The OpeNER Project. Kawu/nerf. SENNA. SENNA is a software distributed under a non-commercial license, which outputs a host of Natural Language Processing (NLP) predictions: part-of-speech (POS) tags, chunking (CHK), name entity recognition (NER), semantic role labeling (SRL) and syntactic parsing (PSG).

SENNA is fast because it uses a simple architecture, self-contained because it does not rely on the output of existing NLP system, and accurate because it offers state-of-the-art or near state-of-the-art performance. SENNA is written in ANSI C, with about 3500 lines of code. It requires about 200MB of RAM and should run on any IEEE floating point computer. Proceed to the download page. Read the compilation section in you want to compile SENNA yourself. Slyrz/hase. The Validator.nu HTML Parser. The Validator.nu HTML Parser is an implementation of the HTML parsing algorithm in Java.

The parser is designed to work as a drop-in replacement for the XML parser in applications that already support XHTML 1.x content with an XML parser and use SAX, DOM or XOM to interface with the parser. Low-level functionality is provided for applications that wish to perform their own IO and support document.write() with scripting. The parser core compiles on Google Web Toolkit and can be automatically translated into C++. (The C++ translation capability is currently used for porting the parser for use in Gecko.) Supported APIs SAX, DOM and XOM are supported. When running under Google Web Toolkit, the browser’s DOM is used (createElementNS required). Get the Source The code is available from a Mercurial repo: hg clone Download Release You really should prefer getting the source (see above), since the latest release is over two years out of data. Culturomics. Bookworm. Statistical NLP / corpus-based computational linguistics resources.

So, you need to understand language data? Open-source NLP software can help! Understanding language is not easy, even for us humans, but computers are slowly getting better at it. 50 years ago, the psychiatrist chat bot Elyza could successfully initiate a therapy session but very soon you understood that she was responding using simple pattern analysis.

Now, the IBM’s supercomputer Watson defeats human champions in a quiz show live on TV. The software pieces required to understand language, like the ones used by Watson, are complex. PDFMiner. Last Modified: Mon Mar 24 12:02:47 UTC 2014 Python PDF parser and analyzer Homepage Recent Changes PDFMiner API.

Tlevine/data-wranglers-dc-pdfs. Stanford Named Entity Recognizer (NER) - Inventory of FLOSS in the Cultural Heritage Domain. TextBlob: Simplified Text Processing — TextBlob 0.9.0-dev documentation. Release v0.8.4.

Getting Started with TextBlob. Natural Language Processing. Resultado de imágenes de Google para. Natural Language Processing. This is a book about Natural Language Processing.

By natural language we mean a language that is used for everyday communication by humans; languages like English, Hindi or Portuguese. In contrast to artificial languages such as programming languages and logical formalisms, natural languages have evolved as they pass from generation to generation, and are hard to pin down with explicit rules. We will take Natural Language Processing (or NLP for short) in a wide sense to cover any kind of computer manipulation of natural language. At one extreme, it could be as simple as counting the number of times the letter t occurs in a paragraph of text. At the other extreme, NLP might involve "understanding" complete human utterances, at least to the extent of being able to give useful responses to them.

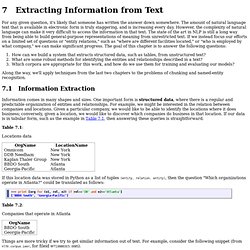

Book. Ch07. For any given question, it's likely that someone has written the answer down somewhere.

The amount of natural language text that is available in electronic form is truly staggering, and is increasing every day. However, the complexity of natural language can make it very difficult to access the information in that text. The state of the art in NLP is still a long way from being able to build general-purpose representations of meaning from unrestricted text. If we instead focus our efforts on a limited set of questions or "entity relations," such as "where are different facilities located," or "who is employed by what company," we can make significant progress. The goal of this chapter is to answer the following questions: How can we build a system that extracts structured data, such as tables, from unstructured text?

Along the way, we'll apply techniques from the last two chapters to the problems of chunking and named-entity recognition. 7.1 Information Extraction. Natural Language Toolkit — NLTK 2.0 documentation. Text Mining. Applications and Theory. Text Mining: Applications and Theory presents the state-of-the-art algorithms for text mining from both the academic and industrial perspectives.

The contributors span several countries and scientific domains: universities, industrial corporations, and government laboratories, and demonstrate the use of techniques from machine learning, knowledge discovery, natural language processing and information retrieval to design computational models for automated text analysis and mining. This volume demonstrates how advancements in the fields of applied mathematics, computer science, machine learning, and natural language processing can collectively capture, classify, and interpret words and their contexts. As suggested in the preface, text mining is needed when “words are not enough.” Applied mathematicians, statisticians, practitioners and students in computer science, bioinformatics and engineering will find this book extremely useful.