Amazon web services - How to use awscli inside python script? Submit a Custom JAR Step - Amazon EMR. Untitled. Parse.ly's Raw Data Pipeline is accessed using two core AWS services, S3 and Kinesis, as described in Getting Access.

This page contains some code examples for how to access this data using common open source programming tools. AWS maintains a command-line client called awscli that has a fully-featured S3 command-line interface. AWS maintains full documentation about this client. Python - spark-submit EMR Step failing when submitted using boto3 client. Pig_test.py. Amazon web services - Boto3 EMR - Hive step.

Python-Lambda-to-Lambda Tools/Techniques. S3 Boto - Examples. Packaging Python Lambda Tools. Replacements for switch statement in Python? Python - How to find all positions of the maximum value in a list? Finding the index of an item given a list containing it in Python. Python - Find the index of a dict within a list, by matching the dict's value. Python - Lookup for a key in dictionary with... regular expressions? Python - Lookup for a key in dictionary with... regular expressions? 9.9. operator — Standard operators as functions. The operator module exports a set of efficient functions corresponding to the intrinsic operators of Python.

For example, operator.add(x, y) is equivalent to the expression x+y. The function names are those used for special class methods; variants without leading and trailing __ are also provided for convenience. The functions fall into categories that perform object comparisons, logical operations, mathematical operations, sequence operations, and abstract type tests. The object comparison functions are useful for all objects, and are named after the rich comparison operators they support: operator.lt(a, b)operator.le(a, b)operator.eq(a, b)operator.ne(a, b)operator.ge(a, b)operator.gt(a, b)operator. Perform “rich comparisons” between a and b. The logical operations are also generally applicable to all objects, and support truth tests, identity tests, and boolean operations: How to search if dictionary value contains certain string with Python.

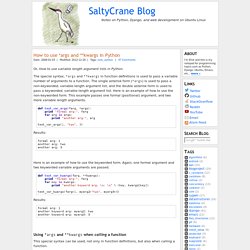

How to search if dictionary value contains certain string with Python. Accessing nested dictionary items in Python. Printing - How to print a dictionary line by line in Python? How to use *args and **kwargs in Python. How to use *args and **kwargs in Python Or, How to use variable length argument lists in Python.

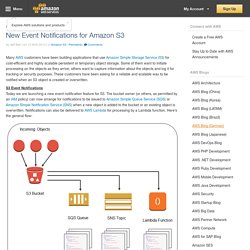

The special syntax, *args and **kwargs in function definitions is used to pass a variable number of arguments to a function. The single asterisk form (*args) is used to pass a non-keyworded, variable-length argument list, and the double asterisk form is used to pass a keyworded, variable-length argument list. Here is an example of how to use the non-keyworded form. This example passes one formal (positional) argument, and two more variable length arguments. def test_var_args(farg, *args): print "formal arg:", farg for arg in args: print "another arg:", arg test_var_args(1, "two", 3) Results: Boto 3 Documentation — Boto 3 Docs 1.3.1 documentation. New Event Notifications for Amazon S3. Many AWS customers have been building applications that use Amazon Simple Storage Service (S3) for cost-efficient and highly scalable persistent or temporary object storage.

Some of them want to initiate processing on the objects as they arrive; others want to capture information about the objects and log it for tracking or security purposes. These customers have been asking for a reliable and scalable way to be notified when an S3 object is created or overwritten. S3 Event Notifications Today we are launching a new event notification feature for S3. The bucket owner (or others, as permitted by an IAM policy) can now arrange for notifications to be issued to Amazon Simple Queue Service (SQS) or Amazon Simple Notification Service (SNS) when a new object is added to the bucket or an existing object is overwritten.

Build a simple distributed system using AWS Lambda, Python, and DynamoDB — AdRoll. Build a simple distributed system using AWS Lambda, Python, and DynamoDB — AdRoll. AWS inventory details in CSV using lambda. Here at Powerupcloud, we deal with a wide variety of environments and customers of varying sizes - we manage Infra hosted on AWS for a one-man startup to huge enterprises.

So it is necessary for our support engineers to get familiar with new features and also continue to hone their skills on existing AWS technologies. We have a dedicated AWS account for R&D and all of our support engineers have access to it. To avoid a bill shock at the end of the month, we wanted to collect an inventory of resources every day in CSV format, store it on S3 and trigger an email.

Below is how we implemented it using a lambda function. AWS inventory details in CSV using lambda. The Context Object (Python) - AWS Lambda. While a Lambda function is executing, it can interact with the AWS Lambda service to get useful runtime information such as: How much time is remaining before AWS Lambda terminates your Lambda function (timeout is one of the Lambda function configuration properties).The CloudWatch log group and log stream associated with the Lambda function that is executing.The AWS request ID returned to the client that invoked the Lambda function.

You can use the request ID for any follow up inquiry with AWS support. If the Lambda function is invoked through AWS Mobile SDK, you can learn more about the mobile application calling the Lambda function. AWS Lambda provides this information via the context object that the service passes as the second parameter to your Lambda function handler. AWS Lambda Functions in Go - There’s no place like. Update 1: Thanks @miksago for the much more robust node.js wrapper.

I guess you can now see the reason why I wanted to avoid node :-) I’m a big fan of AWS, and every year I get super excited about a new technology/service they release. Last year, I spent too much time marvelling about the simplicity and beauty of AWS Kinesis. Hacking with AWS Lambda and Python. Ever since AWS announced the addition of Lambda last year, it has captured the imagination of developers and operations folks alike.

Lambda paints a future where we can deploy serverless(or near serverless) applications focusing only on writing functions in response to events to build our application. It’s an event-driven architecture applied to the AWS cloud, and Jeff Barr describes AWS Lambda quite well here, so I’ll dispense with all the introductory stuff. What I will do is document a simple AWS Lambda function written in Python that’s simple to understand, but does something more than a “Hello World” function.

One of the reasons it’s taken so long for me to explore AWS Lambda is that it was only offered with Java or Node.js. Example of python code to submit spark process as an emr step to AWS emr cluster in AWS lambda function. FlyTrapMind/saws: A supercharged AWS command line interface (CLI). Python - How to launch and configure an EMR cluster using boto. Elastic Map Reduce with Amazon S3, AWS, EMR, Python, MrJob and Ubuntu 14.04.

This tutorial is about setting up an environment with scripts to work via Amazon's Hadoop implmentation EMR on huge datasets. With dataset I mean extremely large datasets and a simple yet powerful grep does not cut it any more for you. What you need is Hadoop. Setting up Hadoop the first time or scaling it can be to much of an effort, this is why we switched to Amazon Elastic Map Reduce, or EMR, Amazon's implementation of Yahoo! 's Hadoop, which itself is an implementation of Google's MapReduce paper. Amazon's EMR will take care of the Hadoop architecture and scalability; in the likely case one cluster is not enough for you. So let me outline the architecture of the tools and services I have in mind to get our environment going.

You will need your Access Key, Private Key and usually a private key file to access AWS from programatically. cntml sudo apt-get install cntlm Edit /etc/cntlm.conf: S Scientific Computing Blog: Use Boto to Start an Elastic Map-Reduce Cluster with Hive and Impala Installed. I spent all of yesterday beating my head against the Boto document (or lack thereof).

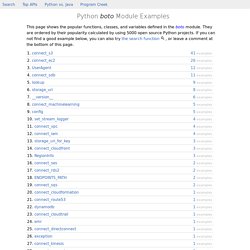

Boto is a popular (the?) Tool for using Amazon Webservices (AWS) with Python. The parts of AWS that are used quite a bit have good documentation, while the rest suffer for explanation. The task I wanted to accomplish was:Use Boto to start an elastic mapreduce cluster of machines.Install Hive and Impala on the machines.Use Spot instances for the core nodes. Below is sample code to accomplish these tasks. Gallamine's Scientific Computing Blog: Use Boto to Start an Elastic Map-Reduce Cluster with Hive and Impala Installed. Programcreek. Python boto Examples. This page shows the popular functions, classes, and variables defined in the boto module.

They are ordered by their popularity calculated by using 5000 open source Python projects. If you can not find a good example below, you can also try the search function , or leave a comment at the bottom of this page. Python boto.lookup Examples. The following are 9 code examples for showing how to use boto.lookup. They are extracted from open source Python projects. You can click to vote up the examples you like, or click to vote down the exmaples you don't like. Your votes will be used in our system to extract more high-quality examples. You may also check out all available functions/classes of the module boto , or try the search function. Python S3 Examples — Ceph Documentation. Listing Owned Buckets This gets a list of Buckets that you own.

This also prints out the bucket name and creation date of each bucket. for bucket in conn.get_all_buckets(): print "{name}\t{created}".format( name = bucket.name, created = bucket.creation_date, ) The output will look something like this: mahbuckat1 2011-04-21T18:05:39.000Z mahbuckat2 2011-04-21T18:05:48.000Z mahbuckat3 2011-04-21T18:07:18.000Z. Aws-python-sample/s3_sample.py at master · FlyTrapMind/aws-python-sample. Boto: A Python interface to Amazon Web Services — boto v2.41.0. Getting Started with Boto — boto v2.41.0.

Note You are viewing the documentation for an older version of boto (boto2). Boto3, the next version of Boto, is now stable and recommended for general use. It can be used side-by-side with Boto in the same project, so it is easy to start using Boto3 in your existing projects as well as new projects. Python. If you don't have pip already installed, follow the instructions on the pip installation page before running the command below. Depending on the environment, you may need to run 'sudo pip install boto', if the following command doesn't work due to insufficient permissions. Create your credentials file at ~/.aws/credentials (C:\Users\USER_NAME\.aws\credentials for Windows users) and save the following lines after replacing the underlined values with your own. [default] aws_access_key_id = YOUR_ACCESS_KEY_ID.

Getting Started with AWS and Python : Articles & Tutorials : Amazon Web Services.