Checking robots.txt - Webmasters/Site owners Help. Die robots.txt-Generierung wird in Kürze deaktiviert.

Sie können manuell eine robots.txt-Datei erstellen oder eines der vielen robots.txt-Erstellungstools im Web verwenden. Eine robots.txt-Datei schränkt den Zugriff auf Ihre Website für Suchmaschinenrobots ein, die das Web crawlen. Robots sind automatisierte Systeme, die vor dem Zugriff auf die Seiten einer Website prüfen, ob der Zugriff auf bestimmte Seiten möglicherweise durch die Datei "robots.txt" gesperrt ist. Die in der "robots.txt"-Datei festgelegten Anweisungen werden von allen seriösen Robots unterstützt. Einige können sie allerdings unterschiedlich interpretieren.

Wenn Sie sehen möchten, welche URLs für das Crawlen durch Google blockiert wurden, öffnen Sie in den Webmaster-Tools im Abschnitt Crawling die Seite Blockierte URLs. Sie benötigen die Datei "robots.txt" nur, wenn Ihre Website Inhalte aufweist, die nicht von den Suchmaschinen indexiert werden sollen. Die einfachste robots.txt-Datei enthält nur zwei Regeln: User-agent: * Drupal's Tracker Module and Search Engine Optimization. The Tracker Module creates "track" pages for each user.

For example, a page that tracks user #234 would have a tracker page located at Those pages should be blocked from search engines with the following rule: Disallow: /*/track$ That robots.txt syntax is recognized by Google Search, Yahoo Search, and MSN Live Search. The tracker module also keeps track of recent posts on the site at URLs like and on large sites creates thousands of tracker pages like My recommendation is to leave the first page of the Recent Posts ( exposed to search engines, while blocking the paginated tracker pages like Leaving the just the first page of /tracker exposed to search engines allows search engines to rapidly find and index your latest content as it is posted.

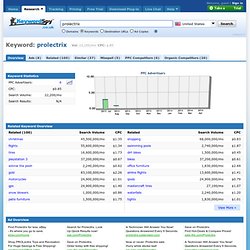

The following rule blocks all but the first of your site-wide tracker pages: Disallow: /tracker? Prolectrix. Overview Ads (8) Related (100) Similar (37) Misspell (5) PPC Competitors (6) Organic Competitors (20) Keyword Statistics Related Keyword Overview Ad Overview Competitors Overview Categories And Or United States Sometimes you don’t know exactly what you are looking for in a Research data.

The Domain Search This search allows you to enter the domain name of the site you want to analyze. The Destination URL Search This search allows you to enter the destination URL of the site that you want to analyze. Category Custom Search Loading... Keyword Software Tool | Become an Affiliate! AdWords: Keyword Tool. Drupal SEO: How Duplicate Content Hurts Drupal Sites. Drupal's clean URLs give it a good reputation when it comes to SEO, but there's a lot more you can do under the hood to improve Drupal's search engine friendliness.

Today I will show you some Drupal SEO tips to help you avoid duplicate content and boost your search engine ranking. Proper search engine optimization allows you to tap into a significant source of new visitors. If you rank well for your keywords, you may find that search engine hits account for more traffic than all your other referrals combined. Unfortunately, most Drupal sites aren't performing as well as they could due to duplicate content. Drupal's Duplicate Content Problem Let's take a look at two URLs: On a normal Drupal site, with clean URLs enabled, these two addresses are basically interchangeable.

But, when it comes to getting a good search engine ranking, having two pages with the exact same content can hurt you. At the very least, duplicate content can decrease your ranking. Disallow: /node/