Entropy. Enthalpy. Enthalpy is a defined thermodynamic potential, designated by the letter "H", that consists of the internal energy of the system (U) plus the product of pressure (P) and volume (V) of the system:[1] Since enthalpy, H, consists of internal energy, U, plus the product of pressure (P) and the volume (V) of the system, which are all functions of the state of the thermodynamic system, enthalpy is a state function. The unit of measurement for enthalpy in the International System of Units (SI) is the joule, but other historical, conventional units are still in use, such as the British thermal unit and the calorie. The enthalpy is the preferred expression of system energy changes in many chemical, biological, and physical measurements, because it simplifies certain descriptions of energy transfer.

Enthalpy change accounts for energy transferred to the environment at constant pressure through expansion or heating. The total enthalpy, H, of a system cannot be measured directly. Origins[edit] where or So. Laws of thermodynamics. The four laws of thermodynamics define fundamental physical quantities (temperature, energy, and entropy) that characterize thermodynamic systems. The laws describe how these quantities behave under various circumstances, and forbid certain phenomena (such as perpetual motion). The four laws of thermodynamics are:[1][2][3][4][5][6] Zeroth law of thermodynamics: If two systems are in thermal equilibrium with a third system, they must be in thermal equilibrium with each other. This law helps define the notion of temperature.First law of thermodynamics: Because energy is conserved, the internal energy of a system changes as heat flows in or out of it.

Equivalently, machines that violate the first law (perpetual motion machines) are impossible. There have been suggestions of additional laws, but none of them achieve the generality of the four accepted laws, and they are not mentioned in standard textbooks.[1][2][3][4][5][8][9] Zeroth law[edit] First law[edit] Quasistatic process. In thermodynamics, a quasistatic process is a thermodynamic process that happens infinitely slowly.

No real process is quasistatic, but such processes can be approximated by performing them very slowly. Some ambiguity exists in the literature concerning the distinction between quasistatic and reversible processes, as these are sometimes taken as synonyms. The reason is precisely because of the proven theorem that any reversible process is also a quasistatic one, even though we have also shown that the converse is not true. It is practically not useful to differentiate between the two because any engineer would remember to include friction when calculating the dissipative entropy generation.

The above definition is closer to the intuitive understanding of the word “quasi-” (almost) “static”, while remaining technically different from reversible processes. PV-Work in various quasi-static processes[edit] Bibliography[edit] See also[edit] Maxwell's demon. In the philosophy of thermal and statistical physics, Maxwell's demon is a thought experiment created by the physicist James Clerk Maxwell to "show that the Second Law of Thermodynamics has only a statistical certainty".[1] It demonstrates Maxwell's point by hypothetically describing how to violate the Second Law: a container of gas molecules at equilibrium is divided into two parts by an insulated wall, with a door that can be opened and closed by what came to be called "Maxwell's demon".

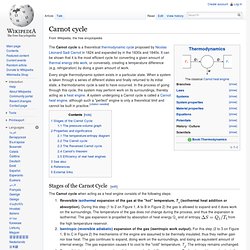

The demon opens the door to allow only the faster than average molecules to flow through to a favored side of the chamber, and only the slower than average molecules to the other side, causing the favored side to gradually heat up while the other side cools down, thus decreasing entropy. Origin and history of the idea[edit] The thought experiment first appeared in a letter Maxwell wrote to Peter Guthrie Tait on 11 December 1867. Original thought experiment[edit] Schematic figure of Maxwell's demon. Carnot cycle. Every single thermodynamic system exists in a particular state.

When a system is taken through a series of different states and finally returned to its initial state, a thermodynamic cycle is said to have occurred. In the process of going through this cycle, the system may perform work on its surroundings, thereby acting as a heat engine. A system undergoing a Carnot cycle is called a Carnot heat engine, although such a "perfect" engine is only a theoretical limit and cannot be built in practice. [citation needed]