IDE - Overview. NetBeans IDE lets you quickly and easily develop Java desktop, mobile, and web applications, as well as HTML5 applications with HTML, JavaScript, and CSS.

The IDE also provides a great set of tools for PHP and C/C++ developers. It is free and open source and has a large community of users and developers around the world. Best Support for Latest Java Technologies. Screen_scraping. Screen_scraping. Pyquery: a jquery-like library for python — pyquery 1.2.4 documentation. Pyquery allows you to make jquery queries on xml documents.

The API is as much as possible the similar to jquery. pyquery uses lxml for fast xml and html manipulation. Ironmacro - GUI Automation for .NET. Pyscraper - simple python based HTTP screen scraper. Xkcd-viewer - A small test project using screen scraping. Juicedpyshell - This Python Firefox Shell Mashup lets you automate Firefox browser using python scripts. The Juiced Python Firefox Shell lets you automate a browser using python scripts.

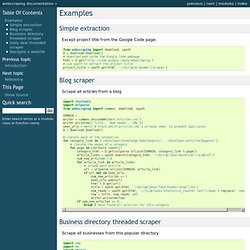

It requires that the pyxpcomext extension be installed. It is useful for browser automation, including automated testing of web sites. It makes it easy to do screen scraping and html manipulation using Python. Examples — webscraping documentation. Simple extraction Except project title from the Google Code page: from webscraping import download, xpathD = download.Download()# download and cache the Google Code webpagehtml = D.get(' use xpath to extract the project titleproject_title = xpath.get(html, '//div[@id="pname"]/a/span') Blog scraper Scrape all articles from a blog import itertoolsimport urlparsefrom webscraping import common, download, xpath DOMAIN = ...writer = common.UnicodeWriter('articles.csv')writer.writerow(['Title', 'Num reads', 'URL'])seen_urls = set() # track which articles URL's already seen, to prevent duplicatesD = download.Download() # iterate each of the categoriesfor category_link in ('/developer/knowledge-base?

Business directory threaded scraper. Scrapemark - Documentation. 12.2 Parsing HTML documents. 12.2 Parsing HTML documents This section only applies to user agents, data mining tools, and conformance checkers.

The rules for parsing XML documents into DOM trees are covered by the next section, entitled "The XHTML syntax". User agents must use the parsing rules described in this section to generate the DOM trees from text/html resources. Together, these rules define what is referred to as the HTML parser While the HTML syntax described in this specification bears a close resemblance to SGML and XML, it is a separate language with its own parsing rules. Mechanize. Scrapemark - Easy Python Scraping Library. How to get along with an ASP webpage. Fingal County Council of Ireland recently published a number of sets of Open Data, in nice clean CSV, XML and KML formats.

Unfortunately, the one set of Open Data that was difficult to obtain, was the list of sets of open data. Mechanize. Screen_scraping. Using A Gui To Build Packages. Not everyone is a command line junkie.

Some folks actually prefer the comfort of a Windows GUI application for performing tasks such as package creation. The NuGet Package Explorer click-once application makes creating packages very easy. C# - Scraping Content From Webpage? Sponsored Links: Related Forum Messages For ASP.NET category: Scraping Text Of A Webpage?

Web scraping with Python. What are good Perl or Python starting points for a site scraping library. Using A Gui To Build Packages. C# - Scraping Content From Webpage? Webscraping with Python. Scrape.py. Scrape.py is a Python module for scraping content from webpages.

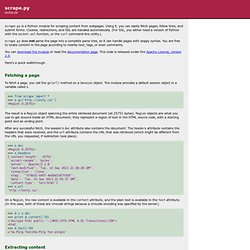

Using it, you can easily fetch pages, follow links, and submit forms. Cookies, redirections, and SSL are handled automatically. (For SSL, you either need a version of Python with the socket.ssl function, or the curl command-line utility.) scrape.py does not parse the page into a complete parse tree, so it can handle pages with sloppy syntax. You are free to locate content in the page according to nearby text, tags, or even comments. Julian_Todd / Python mechanize cheat sheet. Mechanize — Documentation. Full API documentation is in the docstrings and the documentation of urllib2.

The documentation in these web pages is in need of reorganisation at the moment, after the merge of ClientCookie and ClientForm into mechanize. Tests and examples Examples. Screen Scraping. Probabilistic Graphical Models. About the Course What are Probabilistic Graphical Models? Uncertainty is unavoidable in real-world applications: we can almost never predict with certainty what will happen in the future, and even in the present and the past, many important aspects of the world are not observed with certainty. Probability theory gives us the basic foundation to model our beliefs about the different possible states of the world, and to update these beliefs as new evidence is obtained.

These beliefs can be combined with individual preferences to help guide our actions, and even in selecting which observations to make. While probability theory has existed since the 17th century, our ability to use it effectively on large problems involving many inter-related variables is fairly recent, and is due largely to the development of a framework known as Probabilistic Graphical Models (PGMs). Course Syllabus Topics covered include: Cryptography. Game Theory. Machine Learning. Khan Academy. Computer Science 101. UPDATE: we're doing a live, updated MOOC of this course at stanford-online July-2014 (not this Coursera version).

See here: CS101 teaches the essential ideas of Computer Science for a zero-prior-experience audience. Computers can appear very complicated, but in reality, computers work within just a few, simple patterns. CS101 demystifies and brings those patterns to life, which is useful for anyone using computers today. In CS101, students play and experiment with short bits of "computer code" to bring to life to the power and limitations of computers. Noticeboard for all MSc in Computing Students - DIT.