Comparison of the Most Useful Text Processing APIs – ActiveWizards: machine learning company. Nowadays, text processing is developing rapidly, and several big companies provide their products which help to deal successfully with diverse text processing tasks.

In case you need to do some text processing there are 2 options available. The first one is to develop the entire system on your own from scratch. This way proves to be very time and resource consuming. On the other hand, you can use the already accessible solutions developed by well-known companies. This option is usually faster and simpler.

Anyway, APIs for text processing are very popular and useful. Working with text processing, the data analyst faces the following tasks: □ 100 Times Faster Natural Language Processing in Python. So, how can we speed up these loops?

Fast Loops in Python with a bit of Cython Let’s work this out on a simple example. Say we have a large set of rectangles that we store as a list of Python objects, e.g. instances of a Rectangle class. The main job of our module is to iterate over this list in order to count how many rectangles have an area larger than a specific threshold. Build your own Knowledge Graph – VectrConsulting. Do you have a lot of text documents stored on hard disks or in the cloud, and you don't use its textual information directly in your business?

Then this article is for you. Learn how you can leverage artificial intelligence to use that dark data and turn it into valuable business insights, using a Knowledge Graph. Many organisations have large amounts of information contained in free-text documents. Processing these documents often entails categorising the information contained in them. Humans read the documents and label them. Labels and metadata are then stored in a database, together with a link to the original document. After time, when business changes, these documents can not be used in a new context unless they are relabelled and reprocessed, which is cumbersome in a manual procedure.

Automation to the rescue. The list of topics found needs to be labeled to have any meaning. A human with domain knowledge is needed to do this labeling properly and to create an ontology. GluonNLP — Deep Learning Toolkit for Natural Language Processing. Original author: Sheng ZhaTranslated from Hao Jin, Thomas Delteil Why are the results of the latest models so difficult to reproduce?

Why is the code that worked fine last year not compatible with the latest release of my deep learning framework? Why is a baseline benchmark meant to be straightforward so difficult to set up? In today’s world, these are the challenges faced by Natural Language Processing (NLP) researchers. Let’s take the case of a hypothetical PhD student. Soon, our friend found out that the official implementation’s hyperparameters differed widely from the ones mentioned in the paper. Fast-forward three days… All the GPUs in the lab cluster are starting to smoke after being run at 100% capacity continuously. Half a month passed… Finally, the project maintainer appeared and replied that he would look into it.

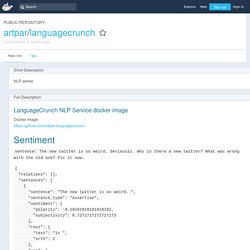

Topic: options-trading. Topic: semantic-role-labeling. Luheng He: Deep Semantic Role Labeling: What Works and What’s Next. UKPLab/eacl2017-oodFrameNetSRL: Implementation of a simple frame identification approach (SimpleFrameId) described in the paper "Out-of-domain FrameNet Semantic Role Labeling" Gildea cl02. Text Analysis, Text Mining, and Information Retrieval Software. Udibr/headlines: Automatically generate headlines to short articles. Languagecrunch/main.py at master · artpar/languagecrunch. Hub.docker. Docker Sentiment sentence: The new twitter is so weird.

Seriously. Pycorenlp.StanfordCoreNLP Python Example. Open Information Extraction. Information extraction. Information extraction (IE) is the task of automatically extracting structured information from unstructured and/or semi-structured machine-readable documents.

In most of the cases this activity concerns processing human language texts by means of natural language processing (NLP). Recent activities in multimedia document processing like automatic annotation and content extraction out of images/audio/video could be seen as information extraction. Due to the difficulty of the problem, current approaches to IE focus on narrowly restricted domains. An example is the extraction from newswire reports of corporate mergers, such as denoted by the formal relation:

Open information extraction. In natural language processing, open information extraction (OIE) is the task of generating a structured, machine-readable representation of the information in text, usually in the form of triples or n-ary propositions.

A proposition can be understood as truth-bearer, a textual expression of a potential fact (e.g., "Dante wrote the Divine Comedy"), represented in an amenable structure for computers [e.g., ("Dante", "wrote", "Divine Comedy")]. An OIE extraction normally consists of a relation and a set of arguments. For instance, ("Dante", "passed away in" "Ravenna") is a proposition formed by the relation "passed away in" and the arguments "Dante" and "Ravenna".

The first argument is usually referred as the subject while the second is considered to be the object.[1] The extraction is said to be a textual representation of a potential fact because its elements are not linked to a knowledge base. Cloud Natural Language Insightful text analysis Natural Language uses machine learning to reveal the structure and meaning of text.

You can extract information about people, places, and events, and better understand social media sentiment and customer conversations. Natural Language enables you to analyze text and also integrate it with your document storage on Google Cloud Storage. AutoML Natural Language. Apache UIMA - Apache UIMA. Natural Language Processing (NLP) Techniques for Extracting Information. Xtext - Language Engineering Made Easy! Build the Language You Want!

Xtext can build full-featured text editors for both general-purpose and domain-specific languages. In the background it uses the LL(*) parser generator of ANTLR, allowing to cover a wide range of syntaxes. Xtext editors have already been implemented for JavaScript, VHDL, Xtend, and many other languages. Compile to Whatever You Want! You define the target format to which your language is compiled. Highly Customizable The default behavior of Xtext is optimized to cover a wide range of languages and use cases. Single Sourcing The grammar definition language of Xtext is not just for the parser. Igordejanovic/textX: Domain-Specific Languages in Python made easy. Orange – Getting Started.

Download and Install Download Orange distribution package and run the installation file on your local computer.

Here is a step-by-step installation guide, that we recommend you to follow. Run Locate Orange program icon. Gensim: Tutorials. The tutorials are organized as a series of examples that highlight various features of gensim. It is assumed that the reader is familiar with the Python language, has installed gensim and read the introduction. Preliminaries All the examples can be directly copied to your Python interpreter shell. IPython’s cpaste command is especially handy for copypasting code fragments, including the leading >>> characters. Gensim – Text Mining Online. I have launched WordSimilarity on April, which focused on computing the word similarity between two words by word2vec model based on the Wikipedia data. The website has the English Word2Vec Model for English Word Similarity: Exploiting Wikipedia Word Similarity by … Continue reading → After “Training a Chinese Wikipedia Word2Vec Model by Gensim and Jieba“, we continue “Training a Japanese Wikipedia Word2Vec Model by Gensim and Mecab” with “Wikipedia_Word2vec” related scripts.

Still, download the latest Japanese Wikipedia dump data first: You can use … Continue reading → Graus.co. Explosion/spaCy: □ Industrial-strength Natural Language Processing (NLP) with Python and Cython. RaRe-Technologies/gensim: Topic Modelling for Humans. RaRe-Technologies/gensim: Topic Modelling for Humans. Topic: natural-language-processing. Maximedb/nlp_papers: NLP papers applicable to financial markets. Open Information Extraction. Dair-iitd/OpenIE-standalone. Scrapinghub/python-crfsuite: A python binding for crfsuite. The Stanford Natural Language Processing Group. | About | Download | Usage | Support | Questions | Release history | About Open information extraction (open IE) refers to the extraction of relation tuples, typically binary relations, from plain text.

The central difference is that the schema for these relations does not need to be specified in advance; typically the relation name is just the text linking two arguments. Pycorenlp. Python Programming Tutorials. Guest Post by Chuck Dishmon An alternative to NLTK's named entity recognition (NER) classifier is provided by the Stanford NER tagger. This tagger is largely seen as the standard in named entity recognition, but since it uses an advanced statistical learning algorithm it's more computationally expensive than the option provided by NLTK. A big benefit of the Stanford NER tagger is that is provides us with a few different models for pulling out named entities.

We can use any of the following: 3 class model for recognizing locations, persons, and organizations 4 class model for recognizing locations, persons, organizations, and miscellaneous entities 7 class model for recognizing locations, persons, organizations, times, money, percents, and dates In order to move forward we'll need to download the models and a jar file, since the NER classifier is written in Java. The parameters passed to the StanfordNERTagger class include: cTAKES. Apache cTAKES: clinical Text Analysis and Knowledge Extraction System is an open-source natural language processing system for information extraction from electronic health record clinical free-text.

It processes clinical notes, identifying types of clinical named entities — drugs, diseases/disorders, signs/symptoms, anatomical sites and procedures. Each named entity has attributes for the text span, the ontology mapping code, context (family history of, current, unrelated to patient), and negated/not negated.[1] cTAKES was built using the UIMA Unstructured Information Management Architecture framework and OpenNLP natural language processing toolkit.[2][3] Its components are specifically trained for the clinical domain, and create rich linguistic and semantic annotations that can be utilized by clinical decision support systems and clinical research.

Apache UIMA - Apache UIMA. Apache OpenNLP - Welcome to Apache OpenNLP. Enterprise Search and Big Data Analytics White Papers. Natural Language Processing (NLP) Techniques for Extracting Information.