Pdf/1312.5602v1.pdf. Nbviewer.ipython.org/github/jakevdp/ESAC-stats-2014/blob/master/notebooks/Index.ipynb. An Introduction to Feature Selection. Which features should you use to create a predictive model?

This is a difficult question that may require deep knowledge of the problem domain. It is possible to automatically select those features in your data that are most useful or most relevant for the problem you are working on. This is a process called feature selection. In this post you will discover feature selection, the types of methods that you can use and a handy checklist that you can follow the next time that you need to select features for a machine learning model. An Introduction to Feature SelectionPhoto by John Tann, some rights reserved What is Feature Selection Feature selection is also called variable selection or attribute selection.

It is the automatic selection of attributes in your data (such as columns in tabular data) that are most relevant to the predictive modeling problem you are working on. Take Control By Creating Targeted Lists of Machine Learning Algorithms. Any book on machine learning will list and describe dozens of machine learning algorithms.

Once you start using tools and libraries you will discover dozens more. This can really wear you down, if you think you need to know about every possible algorithm out there. A simple trick to tackle this feeling and take some control back is to make lists of machine learning algorithms. This ridiculously simple tactic can give you a lot of power. You can use it to give you a list of methods to try when tackling a whole new class of problem. Hacker's guide to Neural Networks. Hi there, I’m a CS PhD student at Stanford.

I’ve worked on Deep Learning for a few years as part of my research and among several of my related pet projects is ConvNetJS - a Javascript library for training Neural Networks. Javascript allows one to nicely visualize what’s going on and to play around with the various hyperparameter settings, but I still regularly hear from people who ask for a more thorough treatment of the topic. This article (which I plan to slowly expand out to lengths of a few book chapters) is my humble attempt. It’s on web instead of PDF because all books should be, and eventually it will hopefully include animations/demos etc. My personal experience with Neural Networks is that everything became much clearer when I started ignoring full-page, dense derivations of backpropagation equations and just started writing code.

What are some time-series classification methods? Обзор наиболее интересных материалов по анализу данных и машинному обучению №19 (20 — 26 октября 2014) Tutorial: How to detect spurious correlations, and how to find the real ones. Specifically designed in the context of big data in our research lab, the new and simple strong correlation synthetic metric proposed in this article should be used, whenever you want to check if there is a real association between two variables, especially in large-scale automated data science or machine learning projects.

Use this new metric now, to avoid being accused of reckless data science and even being sued for wrongful analytic practice. In this paper, the traditional correlation is referred to as the weak correlation, as it captures only a small part of the association between two variables: weak correlation results in capturing spurious correlations and predictive modeling deficiencies, even with as few as 100 variables.

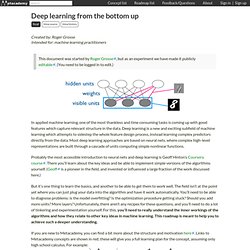

In short, even nowadays, what makes two variables X and Y seem related in most scientific articles and pretty much all articles written by journalists, is based on ordinary (weak) regression. 1. So you wanna try Deep Learning? - Exchangeable random experiments. I’m keeping this post quick and dirty, but at least it’s out there.

The gist of this post is that I put out a one file gist that does all the basics, so that you can play around with it yourself. [repost ] Machine learning start here. Index of /yks/documents/classes/mlbook/pdf. Selection: Machine Learning. Learn about the most effective machine learning techniques, and gain practice implementing them and getting them to work for yourself.

Machine learning is the science of getting computers to act without being explicitly programmed. In the past decade, machine learning has given us self-driving cars,... 3 March 2014, 10 weeks. Machine learning textbook. Elements of Statistical Learning: data mining, inference, and prediction. 2nd Edition. Neural Networks and Deep Learning, free online book (draft) A free online book explaining the core ideas behind artificial neural networks and deep learning (draft), with new chapters added every 2-3 months.

By Gregory Piatetsky, @kdnuggets, Sep 20, 2014. Here is a Machine Learning gem I found on the web: a free online book on Neural Networks and Deep Learning , written by Michael Nielsen, a scientist, writer, and programmer. So you wanna try Deep Learning? I’m keeping this post quick and dirty, but at least it’s out there.

The gist of this post is that I put out a one file gist that does all the basics, so that you can play around with it yourself. First of all, I would say that deep learning is simply kernel machines whose kernel we learn. That’s gross but that’s not totally false. Second of all, there is nothing magical about deep learning, just that we can efficiently train (GPUs, clusters) large models (millions of weights, billions if you want to make a Wired headline) on large datasets (millions of images, thousands of hours of speech, more if you’re GOOG/FB/AAPL/MSFT/NSA). I think a good part of the success of deep learning comes from the fact that practitionners are not affraid to go around beautiful mathematical principles to have their model work on whatever dataset and whatever task.

Deep learning from the bottom up. This document was started by Roger Grosse, but as an experiment we have made it publicly editable.

(You need to be logged in to edit.) In applied machine learning, one of the most thankless and time consuming tasks is coming up with good features which capture relevant structure in the data. Deep learning is a new and exciting subfield of machine learning which attempts to sidestep the whole feature design process, instead learning complex predictors directly from the data. Most deep learning approaches are based on neural nets, where complex high-level representations are built through a cascade of units computing simple nonlinear functions.

Probably the most accessible introduction to neural nets and deep learning is Geoff Hinton’s Coursera course. Nbviewer.ipython.org/github/twiecki/financial-analysis-python-tutorial/blob/master/1. Pandas Basics.ipynb. R&D Blog: Data Characters in Search of An Author. My last post on Implied Stories was about how we fill in the blanks to create story contexts in even very short works, like Hemingway's example of "the shortest story every told": "For sale: Baby shoes, never worn.

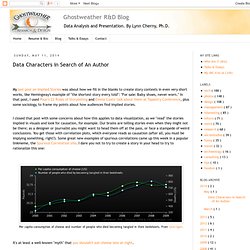

" In that post, I used Pixar's 22 Rules of Storytelling and Emma Coats' talk about them at Tapestry Conference, plus some sociology, to frame my points about how audiences find implied stories. I closed that post with some concerns about how this applies to data visualization, as we "read" the stories implied in visuals and look for causation, for example. Our brains are telling stories even when they might not be there; as a designer or journalist you might want to head them off at the pass, or face a stampede of weird conclusions.

You get those with correlation plots, which everyone reads as causation (after all, you must be implying something, right?). Some great new examples of spurious correlations came up this week in a popular linkmeme, the Spurious Correlation site. Principal Component Analysis step by step. In this article I want to explain how a Principal Component Analysis (PCA) works by implementing it in Python step by step.

At the end we will compare the results to the more convenient Python PCA()classes that are available through the popular matplotlib and scipy libraries and discuss how they differ. The main purposes of a principal component analysis are the analysis of data to identify patterns and finding patterns to reduce the dimensions of the dataset with minimal loss of information. Here, our desired outcome of the principal component analysis is to project a feature space (our dataset consisting of n x d-dimensional samples) onto a smaller subspace that represents our data "well".

A possible application would be a pattern classification task, where we want to reduce the computational costs and the error of parameter estimation by reducing the number of dimensions of our feature space by extracting a subspace that describes our data "best". Pdf/1206.5538.pdf. Unsupervised Kernel Regression (UKR) Ukr.pdf. Detecting Novel Associations in Large Datasets. Learning more like a human: 18 free eBooks on Machine Learning.

Обзор наиболее интересных материалов по анализу данных и машинному обучению №9 (11 — 18 августа 2014) Nbviewer.ipython.org/github/GaelVaroquaux/sklearn_pandas_tutorial/blob/master/rendered_notebooks/01_introduction.ipynb. 100 Most Popular Machine Learning Talks at VideoLectures.Net. Infinite Mixture Models with Nonparametric Bayes and the Dirichlet Process. Imagine you’re a budding chef. A data-curious one, of course, so you start by taking a set of foods (pizza, salad, spaghetti, etc.) and ask 10 friends how much of each they ate in the past day. Your goal: to find natural groups of foodies, so that you can better cater to each cluster’s tastes. For example, your fratboy friends might love wings and beer, your anime friends might love soba and sushi, your hipster friends probably dig tofu, and so on. IPython Notebooks for StatLearning Exercises.

Earlier this year, I attended the StatLearning: Statistical Learning course, a free online course taught by Stanford University professors Trevor Hastie and Rob Tibshirani. They are also the authors of The Elements of Statistical Learning (ESL) and co-authors of its less math-heavy sibling: An Introduction to Statistical Learning (ISL). The course was based on the ISL book. Each week's videos were accompanied by some hands-on exercises in R. I personally find it easier to work with Python than R. R seems to have grown organically with very little central oversight, so function and package names are often non-intuitive, and often have duplicate or overlapping functionality.

At the time, I had worked a bit with scikit-learn and NumPy. So I decided to apply my newly acquired skills to do this rewrite. There are 9 notebooks listed below, corresponding to the exercises for Chapters 2-10 of the course. Java Machine Learning. Are you a Java programmer and looking to get started or practice machine learning? Writing programs that make use of machine learning is the best way to learn machine learning. You can write the algorithms yourself from scratch, but you can make a lot more progress if you leverage an existing open source library. UFLDL Tutorial - Ufldl.

Recommending music on Spotify with deep learning – Sander Dieleman. This summer, I’m interning at Spotify in New York City, where I’m working on content-based music recommendation using convolutional neural networks. In this post, I’ll explain my approach and show some preliminary results. Overview This is going to be a long post, so here’s an overview of the different sections. If you want to skip ahead, just click the section title to go there. Collaborative filteringA very brief introduction, its virtues and its flaws. Tutorials. Neural networks and deep learning.

Learning more like a human: 18 free eBooks on Machine Learning « Big Data Made Simple. IPython Notebooks for StatLearning Exercises. Deep Learning of Representations. Deep Learning Research Groups « Deep Learning. Machine Learning Tutorial: High Performance Text Processing - Open Source. A Primer on Deep Learning. CS 229: Machine Learning Final Projects, Autumn 2013. A Tour of Machine Learning Algorithms. In this post, we take a tour of the most popular machine learning algorithms. It is useful to tour the main algorithms in the field to get a feeling of what methods are available. There are so many algorithms available that it can feel overwhelming when algorithm names are thrown around and you are expected to just know what they are and where they fit. Machine Learning Group: Research - University of Toronto. 4-Steps to Get Started in Machine Learning: The Top-Down Strategy for Beginners to Start and Practice.

Getting started is much easier than you think. [] Research.microsoft.com/pubs/69588/tr-95-06.pdf. Neural Networks, Manifolds, and Topology. Posted on April 6, 2014. ConvNetJS demo: Classify toy 2D data. - Best NLP books. Do you know any Natural Language Processing best-sellers? Natural Language Processing (NLP) is a vast field and what is more important - today it’s a fast-moving area of research.

In this post we propose you to have a look at our review of the most interesting books about NLP. We know that every researcher and scientist must have a good theoretical foundation. That’s why we are recommending these books for your consideration and discussion. Neural Networks, Manifolds, and Topology. Topic Modeling In R. Editor's note: This is the first in a series of posts from rOpenSci's recent hackathon. I recently had the pleasure of participating in rOpenSci's hackathon.

To be honest, I was quite nervous to work among such notables, but I immediately felt welcome thanks to a warm and personable group. Alyssa Frazee has a great post summarizing the event, so check that out if you haven't already. Once again, many thanks to rOpenSci for making it possible! In addition to learning and socializing at the hackathon, I wanted to ensure my time was productive, so I worked on a mini-project related to my research in text mining. rOpenSci has plethora of R packages for extracting literary content off the web, including elife, which is a lightweight interface to the elife API.

Machine Learning: What are currently the hot topics in Machine Learning research and in real applications. Machine Learning. Курс от Яндекса для тех, кто хочет провести новогодние каникулы с пользой / Блог компании Яндекс. Метод Виолы-Джонса (Viola-Jones) как основа для распознавания лиц. What are the advantages of different classification algorithms. Template for Working through Machine Learning Problems in Weka. When you are getting started in Weka, you may feel overwhelmed. There are so many datasets, so many filters and so many algorithms to choose from. There is too much choice. There are too many things you could be doing.

Structured process is key. How to Define Your Machine Learning Problem. The first step in any project is defining your problem. You can use the most powerful and shiniest algorithms available, but the results will be meaningless if you are solving the wrong problem. In this post you will learn the process for thinking deeply about your problem before you get started. Data Hacking by ClickSecurity. [] Improve Machine Learning Results with Boosting, Bagging and Blending Ensemble Methods in Weka. Machine Learning Library (MLlib) - Spark 0.9.0 Documentation. Info.sumologic.com/rs/sumologic/images/Andrzejewski-ML-for-Machine-Data.pdf. Anaconda — Continuum documentation. Курс «Машинное обучение» — Школа анализа данных. Selection: Data Science. Selection: Machine Learning. Lecture 01 - The Learning Problem. Machine learning - introduction. Infolab.stanford.edu/~ullman/mmds/ch1. [1402.4306] Student-t Processes as Alternatives to Gaussian Processes.

Itc.ktu.lt/itc351/Karbaus351.pdf. An Introduction To Mahout’s Logistic Regression SGD Classifier. Conference.scipy.org/scipy2011/slides/mckinney_time_series.pdf. Www8.cs.umu.se/education/examina/Rapporter/WajidAli.pdf. Machine learning. Machine Learning. Comp.utm.my/pars/files/2013/04/Comparison-of-Canny-and-Sobel-Edge-Detection-in-MRI-Images.pdf. Интерактивный анализ данных. Фазовый портрет. Калман, Матлаб, и State Space Models. Анализ временных рядов с помощью python. Методика сравнения алгоритмов и для чего она ещё может пригодиться. Sergey Karayev. Деревья принятия решений на JavaScript. Scikit-learn. Machine learning. Введение в оптимизацию. Имитация отжига. Introduction to Machine Learning.

Stanford Statistical Learning. A List of Data Science and Machine Learning Resources. Machine Learning. Comparing machine learning classifiers based on their hyperplanes for "package users" - Data Scientist in Ginza, Tokyo. An Introduction to Decision Trees with Julia - BenSadeghi. Machine learning - linear prediction. Chemoton § Vitorino Ramos' research notebook. Machine Learning Day 2013 - Clustering: Probably Approximately Useless; Geometry Preserving Non-Linear Dimension Reduction. Topological Data Analysis: A Framework for Machine Learning. The Learning and Intelligent OptimizatioN solver. Johnmyleswhite/ML_for_Hackers.