http://www.cpan.org/index.html

Models Collecting Dust? How to Transform Your Results from Interesting to Impactful Data science, machine learning, and analytics have re-defined how we look at the world. The R community plays a vital role in that transformation and the R language continues to be the de-facto choice for statistical computing, data analysis, and many machine learning scenarios. The importance of R was first recognized by the SQL Server team back in 2016 with the launch of SQL ML Services and R Server. Over the years we have added Python to SQL ML Services in 2017 and Java support through our language extensions in 2019. Earlier this year we also announced the general availability of SQL ML Services into Azure SQL Managed Instance. SparkR, sparklyr, and PySpark are also available as part of SQL Server Big Data Clusters.

Posts CSS Query (I've been out of practice) James Robert Gardiner Hi guys, I've been majorly out of practice with website development, and I'm currently developing a website for a friend of mine. Essentially he wants a scrolling website with anchor links, which is fine. Basically I have whipped up a pretty awesome background for him, and have even got it to fit to the page using background size to "cover"..

Formation Perl - Guide Perl : débuter et progresser en Perl Introduction Ce guide Perl sert de support à la formation Perl. C'est une introduction au langage initialement écrite pour Linux Magazine France et parus dans les numéros de juillet 2002 à février 2003 puis ré-édité au printemps 2004 dans les Dossiers Linux 2. Facebook Graph API Explorer with R (on Windows) « Consistently Infrequent library(RCurl) library(RJSONIO) Facebook_Graph_API_Explorer <- function() {

Eclipse IDE for R Background: Eclipse is an open source Integrated Development Environment (IDE). As with Microsoft's Visual Studio product, Eclipse is programming language-agnostic and supports any language having a suitable plugin for the IDE platform. For Eclipse, the R language plugin is StatET. Figure 1 (above): Eclipse, StatET with R, and the R debugger (bottom window) at work. R: Web Scraping R-bloggers Facebook Page « Consistently Infrequent Introduction R-bloggers.com is a blog aggregator maintained by Tal Galili. It is a great website for both learning about R and keeping up-to-date with the latest developments (because someone will probably, and very kindly, post about the status of some R related feature).

Extracting comments from a Blogger.com blog post with R Note #1: Check out this very useful post by Najko Jahn describing how to extract links to blogs via Google Blog Search . Note #2: I’ll update the code below once I find the time using Najko’s cleaner XPath-based solution. Recently I’ve been working with comments as part of the project on science blogging we’re doing at the Junior Researchers Group “Science and the Internet” . I wrote the script below to quickly extract comments from Atom feeds, such as those generated by Blogger.com .

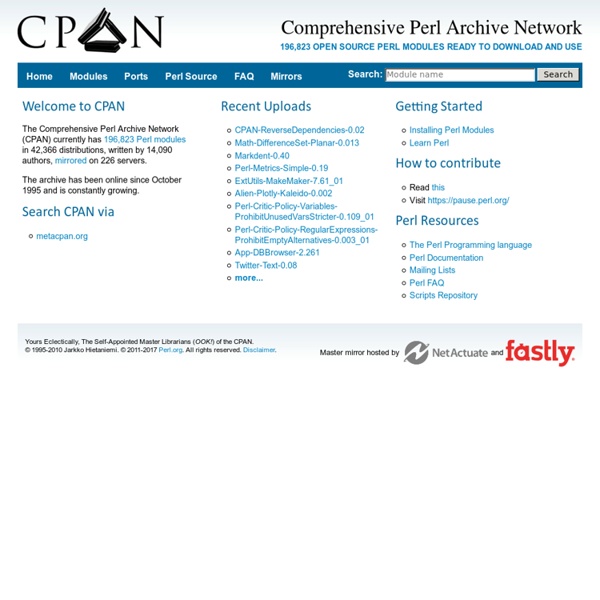

Web scraping Web scraping You are encouraged to solve this task according to the task description, using any language you may know. Create a program that downloads the time from this URL: and then prints the current UTC time by extracting just the UTC time from the web page's HTML. If possible, only use libraries that come at no extra monetary cost with the programming language and that are widely available and popular such as CPAN for Perl or Boost for C++. [edit] Ada [edit] AutoHotkey UrlDownloadToFile, time.htmlFileRead, timefile, time.htmlpos := InStr(timefile, "UTC")msgbox % time := SubStr(timefile, pos - 9, 8) Web Scraping Google Scholar (Partial Success) « Consistently Infrequent library(XML) library(RCurl) get_google_scholar_df <- function(u, omit.citation = TRUE) { html <- getURL(u)

Web Scraping Google Scholar: Part 2 (Complete Success) « Consistently Infrequent library(RCurl) library(XML) get_google_scholar_df <- function(u) { html <- getURL(u)