Hacking VR Interactivity: Preview "Focal Point" - a VR Design Framework. Laser-based tracking for real-time gesture acquisition. Tracking Principle Experimental Setup Beam Steering mechanism: The beam-steering mechanism represents the critical part of the system, determining both the ultimate tracking performance and the compactness of the whole setup.

In our proof-of-principle experiment, a pair of high-performance closed-loop galvano-mirrors with axes perpendicular to each other (GSI Lumonics, Model V500) are used for both generating the saccade and performing the actual tracking. The galvano-mirrors have a typical clear aperture of 5 mm and a maximum optical scan angle of ±50 Decoupling the mechanism responsible for the saccade generation from the mechanism used to actually keep track of the object position can lead to optimal tracking speeds (for instance, acousto-optic deflectors or resonant micro-mirrors can be used to generate Lissajou-like saccade patterns, while slower but precise micromirrors are used to position the center of the saccade).

Demonstration and Results Tracking precision. Dynamic Performances. Scanning LiDAR by Scanse. Chi15 chan. Chi15_chan. Fisheye camera calibration. MRL + Kinect + InMoov - the quest for accuracy. To display IK i've been trying to decide between Java3D(old and no longer supported or developed), JavaFX (new engine from Oracle), & WebGL + Three.js - Looking around I found this !

(wow - very nice !) More interesting WebGL IK Information - Another one from the same author - Step 2: Get the data We need detailed information regarding InMoov so we can use it in Inverse Kinematics & Forward Kinematics. Hopefully Gael will be able to provide us with information too. After we find the mathmatical model working and behaving correctly, we can continue to add software to visualize the model. In trying to solve the problem - the first step is defining the problem. Step 1: The Problem The Kinect shoulder joint is a single joint which can pivot in any direction. Oculus Rift + Kinect + KickR = Our Homage to Paperboy. Ironman demo - Oculus Rift - Kinect. Kinect a Oculus Rift. Vertigo: Kinect body-tracking and Oculus Rift DK2. VicoVR - Developers.

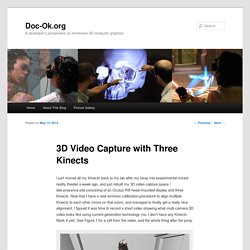

VicoVR. 3D Video Capture with Three Kinects. I just moved all my Kinects back to my lab after my foray into experimental mixed-reality theater a week ago, and just rebuilt my 3D video capture space / tele-presence site consisting of an Oculus Rift head-mounted display and three Kinects.

Now that I have a new extrinsic calibration procedure to align multiple Kinects to each other (more on that soon), and managed to finally get a really nice alignment, I figured it was time to record a short video showing what multi-camera 3D video looks like using current-generation technology (no, I don’t have any Kinects Mark II yet). See Figure 1 for a still from the video, and the whole thing after the jump.

Figure 1: A still frame from the video, showing the user’s real-time “holographic” avatar from the outside, providing a literal kind of out-of-body experience to the user. Now, one of the things that always echoes right back when I bring up Kinect and VR is latency, or rather, that the Kinect’s latency is too high to be useful for VR. 3D Video Capture With Three Kinects. Human body motion tracking with FreeIMU. Last updated on Wed, 2011-11-16 16:48.

Originally submitted by fabio on 2011-11-12 22:31. John Patillo, one of the very first guys in starting a "child" project based upon my FreeIMU hardware and library, just posted a comment sharing his last progresses in his own Smart Skeleton project. John, who's a Biology and Human Anatomy & Physiology College teacher by day and an Arduino/electronics hacker by night, is working on using inertial sensors in FreeIMU to track human body motion. Hi Fabio, I hope all is going well with your studies.

I just wanted to give you an update on what I've been doing with my modification of your FreeIMU circuit. Following a picture of the "Skeleton IMU" John designed basing upon FreeIMU, designed especially for daisy-chaining using the PCA9509 I2C repeater to allow long wires on the SDA and SCL I2C bus lines. I'm really looking forward having access to more details on this project. True 3D video from Kinect 2 in Oculus Rift DK2. Casa Paganini - InfoMus. EyesWeb is an open software research platform for the design and development of real-time multimodal systems and interfaces.

EyesWeb Week 2014 - The 4rd Tutorial on the EyesWeb Open Platform (9-11 March 2014) EyesWeb is an open platform to support the design and development of real-time multimodal systems and interfaces. It supports a wide number of input devices including motion capture systems, various types of professional and low cost videocameras, game interfaces (e.g., Kinect, Wii), multichiannel audio input (e.g. microphones), analog inputs (e.g. for physiological signals). Supported outputs include multichannel audio, video, analog devices, robotic platforms. Various standards are supported, including OSC, MIDI, FreeFrame and VST plugins, ASIO, Motion Capture standards and systems (Qualisys), Matlab. By downloading any of the software below you agree with the license agreement. Forum is available at the following link Bugzilla is available at the following link.