Probabilistic logic. The aim of a probabilistic logic (also probability logic and probabilistic reasoning) is to combine the capacity of probability theory to handle uncertainty with the capacity of deductive logic to exploit structure.

The result is a richer and more expressive formalism with a broad range of possible application areas. Probabilistic logics attempt to find a natural extension of traditional logic truth tables: the results they define are derived through probabilistic expressions instead. A difficulty with probabilistic logics is that they tend to multiply the computational complexities of their probabilistic and logical components. Other difficulties include the possibility of counter-intuitive results, such as those of Dempster-Shafer theory. The need to deal with a broad variety of contexts and issues has led to many different proposals. Probability interpretations. The word probability has been used in a variety of ways since it was first applied to the mathematical study of games of chance.

Does probability measure the real, physical tendency of something to occur or is it a measure of how strongly one believes it will occur, or does it draw on both these elements? In answering such questions, we interpret the probability values of probability theory. There are two broad categories[1][2] of probability interpretations which can be called "physical" and "evidential" probabilities. Bayesian probability. Bayesian probability is one of the different interpretations of the concept of probability and belongs to the category of evidential probabilities.

The Bayesian interpretation of probability can be seen as an extension of propositional logic that enables reasoning with propositions whose truth or falsity is uncertain. To evaluate the probability of a hypothesis, the Bayesian probabilist specifies some prior probability, which is then updated in the light of new, relevant data.[1] The Bayesian interpretation provides a standard set of procedures and formulae to perform this calculation.

Frequentist probability. John Venn.

Propensity probability. The propensity theory of probability is one interpretation of the concept of probability.

Theorists who adopt this interpretation think of probability as a physical propensity, or disposition, or tendency of a given type of physical situation to yield an outcome of a certain kind, or to yield a long run relative frequency of such an outcome.[1] Propensities are not relative frequencies, but purported causes of the observed stable relative frequencies. Propensities are invoked to explain why repeating a certain kind of experiment will generate a given outcome type at a persistent rate. Graphical model. An example of a graphical model.

Each arrow indicates a dependency. In this example: D depends on A, D depends on B, D depends on C, C depends on B, and C depends on D. A Brief Introduction to Graphical Models and Bayesian Networks. By Kevin Murphy, 1998.

"Graphical models are a marriage between probability theory and graph theory. They provide a natural tool for dealing with two problems that occur throughout applied mathematics and engineering -- uncertainty and complexity -- and in particular they are playing an increasingly important role in the design and analysis of machine learning algorithms. Fundamental to the idea of a graphical model is the notion of modularity -- a complex system is built by combining simpler parts. Probability theory provides the glue whereby the parts are combined, ensuring that the system as a whole is consistent, and providing ways to interface models to data. Markov logic network. Description[edit] Inference[edit] The goal of inference in a Markov logic network is to find the stationary distribution of the system, or one that is close to it; that this may be difficult or not always possible is illustrated by the richness of behaviour seen in the Ising model.

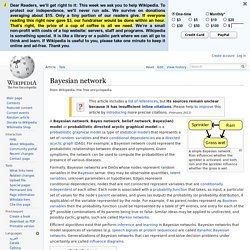

As in a Markov network, the stationary distribution finds the most likely assignment of probabilities to the vertices of the graph; in this case, the vertices are the ground atoms of an interpretation. Bayesian network. A simple Bayesian network.

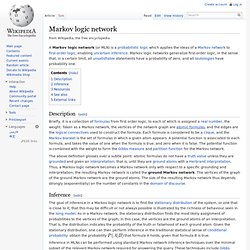

Rain influences whether the sprinkler is activated, and both rain and the sprinkler influence whether the grass is wet. A Bayesian network, Bayes network, belief network, Bayes(ian) model or probabilistic directed acyclic graphical model is a probabilistic graphical model (a type of statistical model) that represents a set of random variables and their conditional dependencies via a directed acyclic graph (DAG).

For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Bayesian inference. Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available.

Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule[edit] Belief propagation. Belief propagation, also known as sum-product message passing is a message passing algorithm for performing inference on graphical models, such as Bayesian networks and Markov random fields.

It calculates the marginal distribution for each unobserved node, conditional on any observed nodes. Belief propagation is commonly used in artificial intelligence and information theory and has demonstrated empirical success in numerous applications including low-density parity-check codes, turbo codes, free energy approximation, and satisfiability.[1]

Subjective logic. Subjective logic is a type of probabilistic logic that explicitly takes uncertainty and belief ownership into account. In general, subjective logic is suitable for modeling and analysing situations involving uncertainty and incomplete knowledge.[1][2] For example, it can be used for modeling trust networks and for analysing Bayesian networks. Arguments in subjective logic are subjective opinions about propositions. A binomial opinion applies to a single proposition, and can be represented as a Beta distribution. A multinomial opinion applies to a collection of propositions, and can be represented as a Dirichlet distribution. Through the correspondence between opinions and Beta/Dirichlet distributions, subjective logic provides an algebra for these functions.

A fundamental aspect of the human condition is that nobody can ever determine with absolute certainty whether a proposition about the world is true or false. Subjective opinions[edit] where . Binomial opinions[edit] Let where: and . . . Statistical relational learning. As is evident from the characterization above, the field is not strictly limited to learning aspects; it is equally concerned with reasoning (specifically probabilistic inference) and knowledge representation. Therefore, alternative terms that reflect the main foci of the field include statistical relational learning and reasoning (emphasizing the importance of reasoning) and first-order probabilistic languages (emphasizing the key properties of the languages with which models are represented).

Canonical tasks[edit] Machine learning. Machine learning is a subfield of computer science[1] that evolved from the study of pattern recognition and computational learning theory in artificial intelligence.[1] Machine learning explores the construction and study of algorithms that can learn from and make predictions on data.[2] Such algorithms operate by building a model from example inputs in order to make data-driven predictions or decisions,[3]:2 rather than following strictly static program instructions. Machine learning is closely related to and often overlaps with computational statistics; a discipline that also specializes in prediction-making.

It has strong ties to mathematical optimization, which deliver methods, theory and application domains to the field. Machine learning is employed in a range of computing tasks where designing and programming explicit, rule-based algorithms is infeasible. Example applications include spam filtering, optical character recognition (OCR),[4] search engines and computer vision. Abductive reasoning.

Abductive reasoning (also called abduction,[1] abductive inference[2] or retroduction[3]) is a form of logical inference that goes from an observation to a hypothesis that accounts for the observation, ideally seeking to find the simplest and most likely explanation. In abductive reasoning, unlike in deductive reasoning, the premises do not guarantee the conclusion. One can understand abductive reasoning as "inference to the best explanation".[4]

Probabilistic logics and the synthesis of reliable organisms from unreliable components.