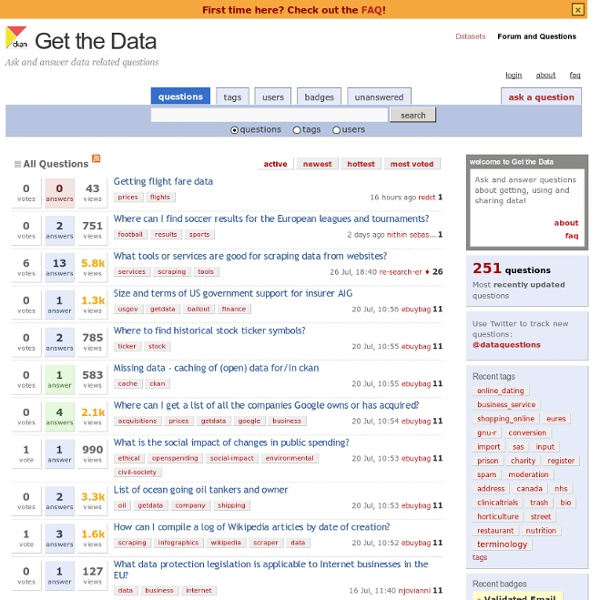

Get the Data: Data Q&A Forum

FlowingData | Data Visualization, Infographics, and Statistics

Data News | Actu data & Journalisme de Données

Behind the Scenes at the Guardian Datablog

Behind the Scenes at the Guardian Datablog Figure 17. The Guardian Datablog production process visualized (The Guardian) When we launched the Datablog, we had no idea who would be interested in raw data, statistics and visualizations. The Guardian Datablog — which I edit — was to be a small blog offering the full datasets behind our news stories. As a news editor and journalist working with graphics, it was a logical extension of work I was already doing, accumulating new datasets and wrangling with them to try to make sense of the news stories of the day. The question I was asked has been answered for us. We’ve had the MPs expenses scandal — Britain’s most unexpected piece of data journalism — the resulting fallout has meant Westminster is now committed to releasing huge amounts of data every year. We had a general election where each of the main political parties was committed to data transparency, opening our own data vaults to the world. It may seem new, but really it’s not.

domas mituzas

Related:

Related: