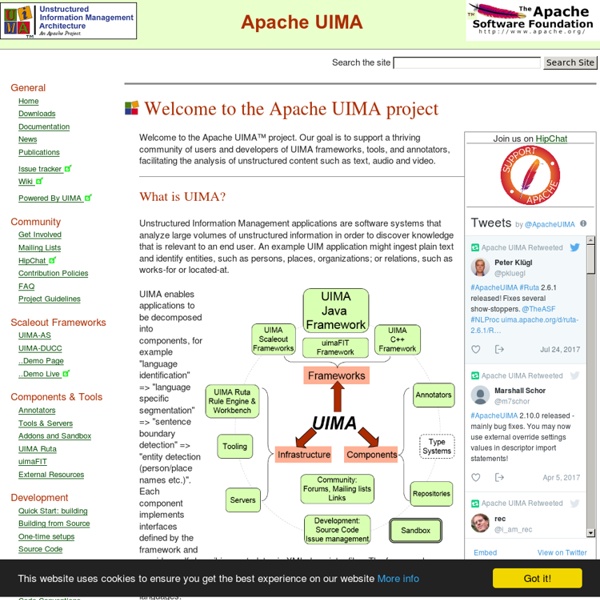

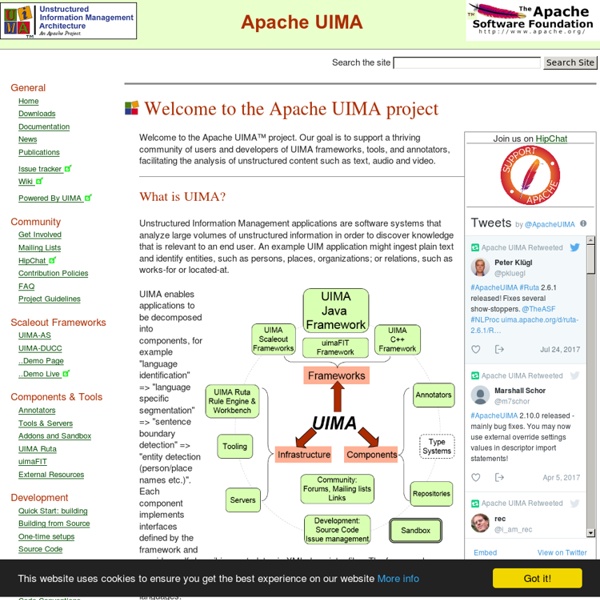

Apache UIMA - Apache UIMA

index

The Stanford NLP (Natural Language Processing) Group

A Suite of Core NLP Tools About | Citing | Download | Usage | SUTime | Sentiment | Adding Annotators | Caseless Models | Shift Reduce Parser | Extensions | Questions | Mailing lists | Online demo | FAQ | Release history About Stanford CoreNLP provides a set of natural language analysis tools which can take raw text input and give the base forms of words, their parts of speech, whether they are names of companies, people, etc., normalize dates, times, and numeric quantities, and mark up the structure of sentences in terms of phrases and word dependencies, indicate which noun phrases refer to the same entities, indicate sentiment, etc. Stanford CoreNLP is an integrated framework. Its goal is to make it very easy to apply a bunch of linguistic analysis tools to a piece of text. Citing Stanford CoreNLP If you're just running the CoreNLP pipeline, please cite this CoreNLP demo paper. Download Download Stanford CoreNLP version 3.5.2. GitHub: Here is the Stanford CoreNLP GitHub site. Usage Javadoc

Related:

Related: